You can now access OpenAI's newest model GPT 5.3 Codex via Vercel's AI Gateway with no other provider accounts required.

Chat SDK is now open source and available in public beta. It's a TypeScript library for building chat bots that work across Slack, Microsoft Teams, Google Chat, Discord, GitHub, and Linear — from a single codebase.

Sandbox network policies now support HTTP header injection to prevent secrets exfiltration by untrusted code

Generate photorealistic AI videos with the Veo models via Vercel AI Gateway. Text-to-video and image-to-video with native audio generation. Up to 4K resolution with fast mode options.

Generate AI videos with Kling models via the AI Gateway. Text-to-video with audio, image-to-video with first/last frame control, and motion transfer. 7 models including v3.0, v2.6, and turbo variants.

Generate stylized AI videos with Alibaba Wan models via the Vercel AI Gateway. Text-to-video, image-to-video, and unique style transfer (R2V) to transform existing footage into anime, watercolor, and more.

Generate AI videos with xAI Grok Imagine via the AI SDK. Text-to-video, image-to-video, and video editing with natural audio and dialogue.

Build video generation into your apps with AI Gateway. Create product videos, dynamic content, and marketing assets at scale.

You can now access Google's newest model, Gemini 3.1 Pro Preview via Vercel's AI Gateway with no other provider accounts required.

You can now access Anthropic's newest model Claude Sonnet 4.6 via Vercel's AI Gateway with no other provider accounts required.

You can now access the Recraft V4 image model via Vercel's AI Gateway with no other provider accounts required.

You can now access Alibaba's latest model, Qwen 3.5 Plus, via Vercel's AI Gateway with no other provider accounts required.

You can now access MiniMax M2.5 through Vercel's AI Gateway with no other provider accounts required.

You can now access Z.AI's latest model, GLM 5, via Vercel's AI Gateway with no other provider accounts required.

Runtime logs are now available via Vercel's MCP server, enabling AI agents to analyze performance, fix errors, and more.

Learn how we built an AI Engine Optimization system to track coding agents using Vercel Sandbox, AI Gateway, and Workflows for isolated execution.

Why competitive advantage in AI comes from the platform you deploy agents on, not the agents themselves.

A six-week program to help you scale your AI company offering over $6M in credits from Vercel, v0, AWS, and leading AI platforms

You can now access Anthropic's latest model, Claude Opus 4.6, via Vercel's AI Gateway with no other provider accounts required.

How Stably, a 6-person team, ships AI testing agents faster with Vercel, moving from weeks to hours. Their shift highlights how Vercel's platform eliminates infrastructure anxiety, boosting autonomous testing and enabling quick enterprise growth.

Parallel is now available on the Vercel Marketplace with a native integration for Vercel projects. Developers can add Parallel’s web tools and AI agents to their apps in minutes, including Search, Extract, Tasks, FindAll, and Monitoring

Parallel's Web Search and other tools are now available on Vercel AI Gateway, AI SDK, and Marketplace. Add web search to any model with domain filtering, date constraints, and agentic mode support.

TRAE now integrates with Vercel for one-click deployments and access to hundreds of AI models through a single API key. Available in both SOLO and IDE modes.

AI agents need secure, isolated environments that spin up instantly. Vercel Sandbox is now generally available with filesystem snapshots, container support, and production reliability.

Cubic joins the Vercel Agents Marketplace, offering teams an AI code reviewer with full codebase context, unified billing, and automated fixes.

AssistLoop is now available in the Vercel Marketplace, making it easy to add AI-powered customer support to Next.js apps deployed on Vercel.

Sensay went from zero to an MVP launch in six weeks for Web Summit. With Vercel preview deployments, feature flags, and rollbacks, the team shipped fast without a DevOps team.

You can now access Arcee AI's Trinity Large Preview model via AI Gateway with no other provider accounts required.

You can now access Qwen 3 Max Thinking via Vercel's AI Gateway with no other provider accounts required.

You can now access Moonshot AI's Kimi K2.5 model via Vercel's AI Gateway with no other provider accounts required.

A plainspoken Skills FAQ with a ready-to-use guide: what skill packages are, how agents load them, what skills-ai.dev is, how Skills compare to MCP, plus security and alternatives.

You can use your Claude Code Max subscription through Vercel's AI Gateway. This lets you leverage your existing subscription while gaining centralized observability, usage tracking, and monitoring capabilities for all your Claude Code requests.

You can now view live model performance metrics for latency and throughput on Vercel AI Gateway on the website and via REST API.

You can use Vercel AI Gateway with Clawdbot and access hundreds of models with no additional API keys required.

Skills support is now available in bash-tool, so your AI SDK agents can use the skills pattern with filesystem context, Bash execution, and sandboxed runtime access.

A brand new set of components designed to help you build the next generation of IDEs, coding apps and background agents.

Introducing skills, a CLI for installing and managing agent “skill packages.” Add a skill package with npx skills add <package>, with more commands planned.

You can now access image models from Recraft in Vercel AI Gateway with no other provider accounts required.

Users can opt-in to an experimental build mode for backend frameworks like Hono or Express. The new behavior allows logs to be filtered by route, similar to Next.js and other frameworks. It also improves the build process in several ways

SSH into running Sandboxes using the Vercel Sandbox CLI. Open secure, interactive shell sessions, with timeouts automatically extended in 5-minute increments for up to 5 hours.

Use the OpenResponses API on Vercel AI Gateway with no other API keys required and support for multiple providers.

We've shipped optimizations that reduce build overhead, particularly for projects with many input files, large node_modules, or extensive build outputs.

You can use Perplexity web search with any model and any provider on Vercel AI Gateway to access real-time results and up-to-date information.

Node.js runtime in Vercel Sandbox now defaults to Node.js 24 for newer features and performance improvements.

Today we're releasing a brand new set of components for AI Elements designed to work with the Transcription and Speech functions of the AI SDK, helping you build voice agents.

You can now access the GPT 5.2 Codex model on Vercel's AI Gateway with no other provider accounts required.

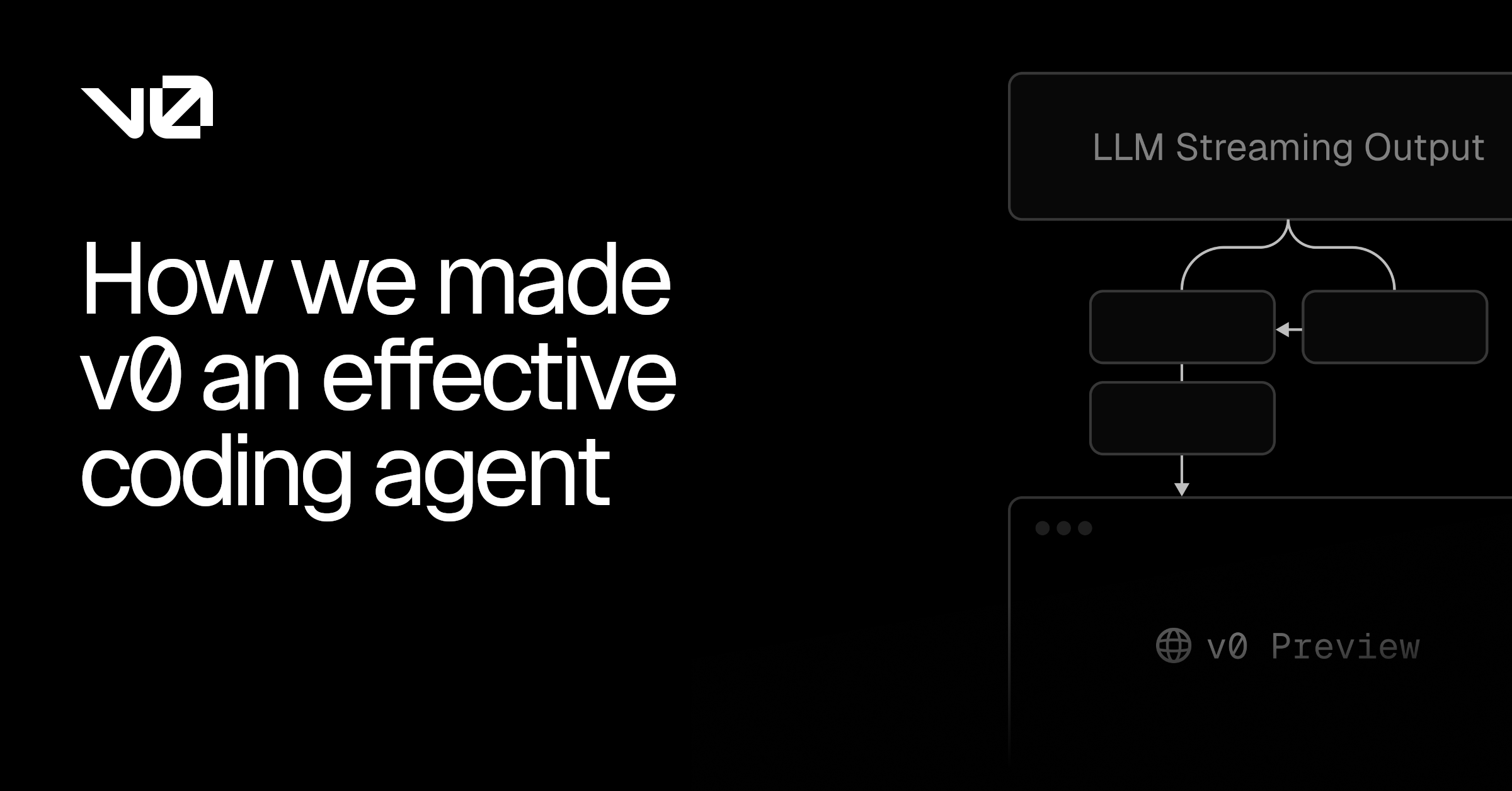

v0’s composite AI pipeline boosts reliability by fixing errors in real time. Learn how dynamic system prompts, LLM Suspense, and autofixers work together to deliver stable, working web app generations at scale.

Use Vercel AI Gateway from Claude Code via the Anthropic-compatible endpoint, with a URL change and AI Gateway usage and cost tracking.

How Vercel built AI-generated pixel trading cards for Next.js Conf and Ship AI, then turned the same pipeline into a v0 template and festive holiday experiment.

You can now access the Z.ai GLM-4.7 model on Vercel's AI Gateway with no other provider accounts required.

Introducing agents, tool execution approval, DevTools, full MCP support, reranking, image editing, and more.

You can now access the Gemini 3 Flash model on Vercel's AI Gateway with no other provider accounts required.

Cline scales its open source coding agent with Vercel AI Gateway, delivering global performance, transparent pricing, and enterprise reliability.

A database of tutorials, videos, and best practices for building on Vercel, written by engineers from across the company.

You can now access the GPT 5.2 models on Vercel's AI Gateway with no other provider accounts required.

You can now access the GPT 5.1 Codex Max model with Vercel's AI Gateway with no other provider accounts required.

You can now access Amazon's latest model Nova 2 Lite on Vercel AI Gateway with no other provider accounts required.

You can now access Mistral's latest model, Mistral Large 3, on Vercel AI Gateway with no other provider accounts required.

You can now access the newest DeepSeek V3.2 models, V3.2 and V3.2 Speciale in Vercel AI Gateway with no other provider accounts required.

You can now access the newest Arcee AI model Trinity Mini in Vercel AI Gateway with no other provider accounts required.

You can now access Prime Intellect AI's Intellect-3 model in Vercel AI Gateway with no other provider accounts required.

You can now access the newest image model FLUX.2 Pro from Black Forest Labs in Vercel AI Gateway with no other provider accounts required.

You can now access Anthropic's latest model Claude Opus 4.5 in Vercel AI Gateway with no other provider accounts required.

At Vercel, we’re building self-driving infrastructure, a system that autonomously manages production operations, improves application code using real-world insights, and learns from the unpredictable nature of production itself.

You can now access xAI's Grok 4.1 models in Vercel AI Gateway with no other provider accounts required.

You can now access Google's latest model Nano Banana Pro (Gemini 3 Pro Image) in Vercel AI Gateway with no other provider accounts required.

You can now access Google's latest model Gemini 3 Pro in Vercel AI Gateway with no other provider accounts required.

The Gemini 3 Pro Preview model, released today, is now available through AI Gateway and in production on v0.app.

You can now access the two GPT 5.1 Codex models with Vercel's AI Gateway with no other provider accounts required.

You can now access the two GPT 5.1 models with Vercel's AI Gateway with no other provider accounts required.

Model fallbacks now supported in Vercel AI Gateway in addition to provider routing, giving you failover options when models fail or are unavailable.

You can now search for domains on Vercel using AI. Results are fast and creative, and you have full control via the search bar.

Vercel BotID Deep Analysis protected Nous Research by blocking advanced automated abuse from attacking their application

A low severity vulnerability of input validation bypass on Vercel AI SDK has been mitigated and fixed

You can now access Moonshot AI's Kimi K2 Thinking and Kimi K2 Thinking Turbo with Vercel's AI Gateway with no other provider accounts required.

The AI Gateway is a simple application deployed on Vercel, but it achieves scale, efficiency, and resilience by running on Fluid compute and leveraging Vercel’s global infrastructure.

Improved observability of redirects and rewrites are now generally available under the Observability tab on Vercel's Dashboard. Redirect and rewrite request logs are now drainable to any configured Drain

Microfrontends now generally available, enabling you to split large applications into smaller units that render as one cohesive experience for users.

Vercel now detects and deploys Fastify, a fast and low overhead web framework, with zero configuration.

BotID Deep Analysis is a sophisticated, invisible bot detection product. This article is about how BotID Deep Analysis adapted to a novel attack in real time, and successfully classified sessions that would have slipped through other services.

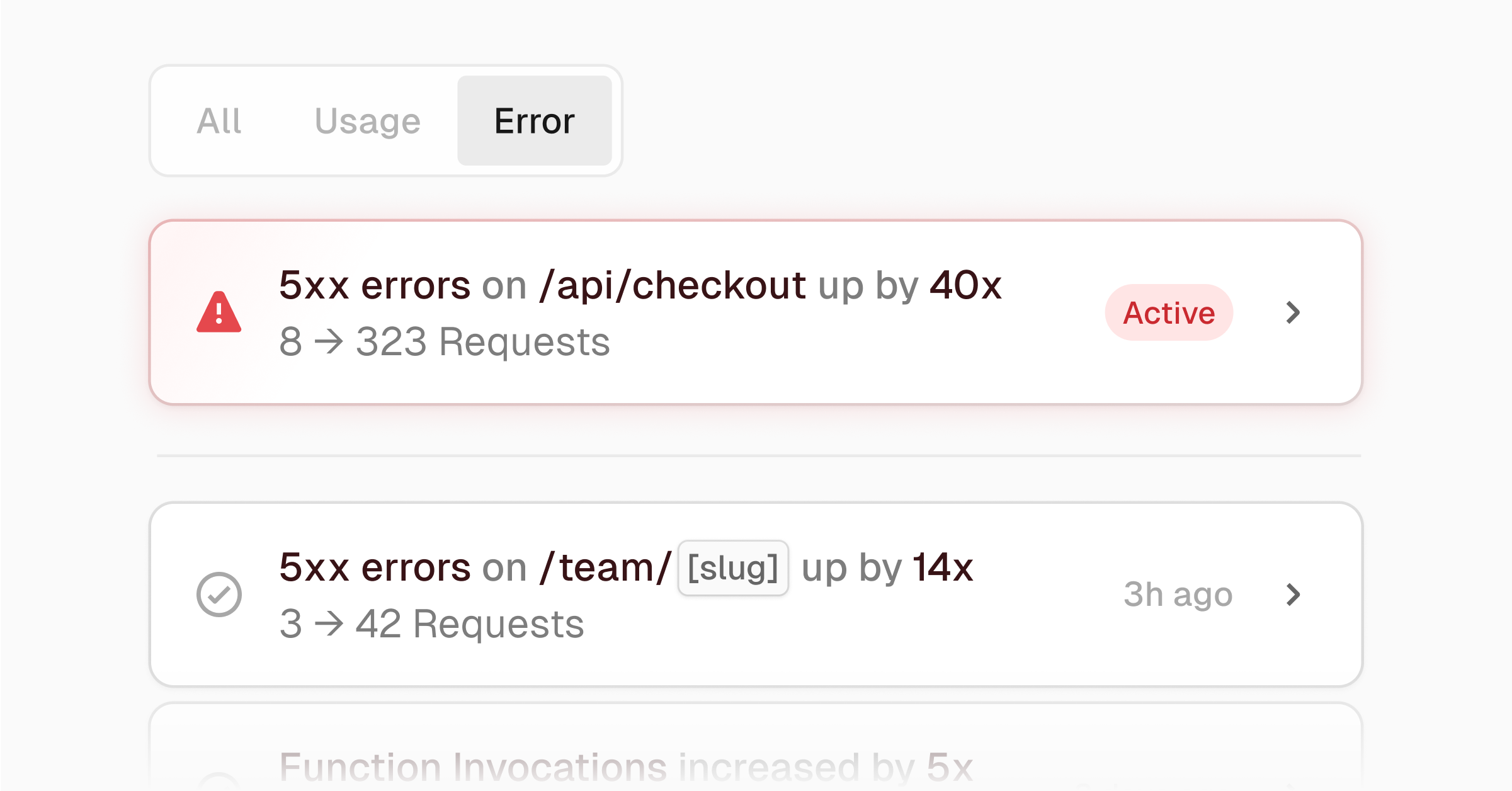

Vercel Agent Investigation intelligently conducts incident response investigations to alert, analyze, and suggest remediation steps

Details on ISR cache keys, cache tags, and cache revalidation reasons are now available in Runtime Logs for all customers

You can now access OpenAI's GPT-OSS-Safeguard-20B with Vercel's AI Gateway with no other provider accounts required.

Vercel has achieved TISAX Assessment Level 2 security standard used in the automotive and manufacturing industries

Vercel has achieved TISAX Assessment Level 2 security standard to align with automotive and manufacturing industries

You can now access MiniMax M2 with Vercel's AI Gateway for free with no other provider accounts required.

The Bun runtime is now available in Public Beta for Vercel Functions. Benchmarks show Bun reduced average latency by 28% for CPU-bound Next.js rendering compared to Node.js.

Vercel Functions now supports the Bun runtime, giving developers faster performance options and greater flexibility for optimizing JavaScript workloads.

David Totten joins Vercel as VP of Global Field Engineering from Databricks to oversee Sales Engineering, Developer Success, Professional Services, and Customer Support Engineering under one integrated organization

Earlier this year we introduced the foundations of the AI Cloud: a platform for building intelligent systems that think, plan, and act. At Ship AI, we showed what comes next. What and how to build with the AI Cloud.

AI Chat is now live on Vercel docs. Ask questions, load docs as context for page-aware answers, and copy chats as Markdown for easy sharing.

Vercel Firewall and Observability Plus can now configure Custom Rules targeting specific server actions

Discover AI Agents & Services on the Vercel Marketplace. Integrate agentic tools, automate workflows, and build with unified billing and observability.

Vercel Agent can now automatically run AI investigations on anomalous events for faster incident response.

Build, scale, and orchestrate AI backends on Vercel. Deploy Python or Node frameworks with zero config and optimized compute for agents and workflows.

Agents and Tools are available in the Vercel Marketplace, enabling AI-powered workflows in your projects with native integrations, unified billing, and built-in observability.

Vercel AI Cloud combines unified model routing and failover, elastic cost-efficient compute that only bills for active CPU time, isolated execution for untrusted code, and workflow durability that survives restarts, deploys, and long pauses.

Braintrust joins the Vercel Marketplace with native support for the Vercel AI SDK and AI Gateway, enabling developers to monitor, evaluate, and improve AI application performance in real time.

You can now access Claude Haiku 4.5 with Vercel's AI Gateway with no other provider accounts required.

Vercel and Salesforce are partnering to help teams build, ship, and scale AI agents across the Salesforce ecosystem, starting with Slack.

Use the Apps SDK, Next.js, and mcp-handler to build and deploy ChatGPT apps on Vercel, complete with custom UI and app-specific functionality.

Vercel now uses uv, a fast Python package manager written in Rust, as the default package manager during the installation step for all Python builds.

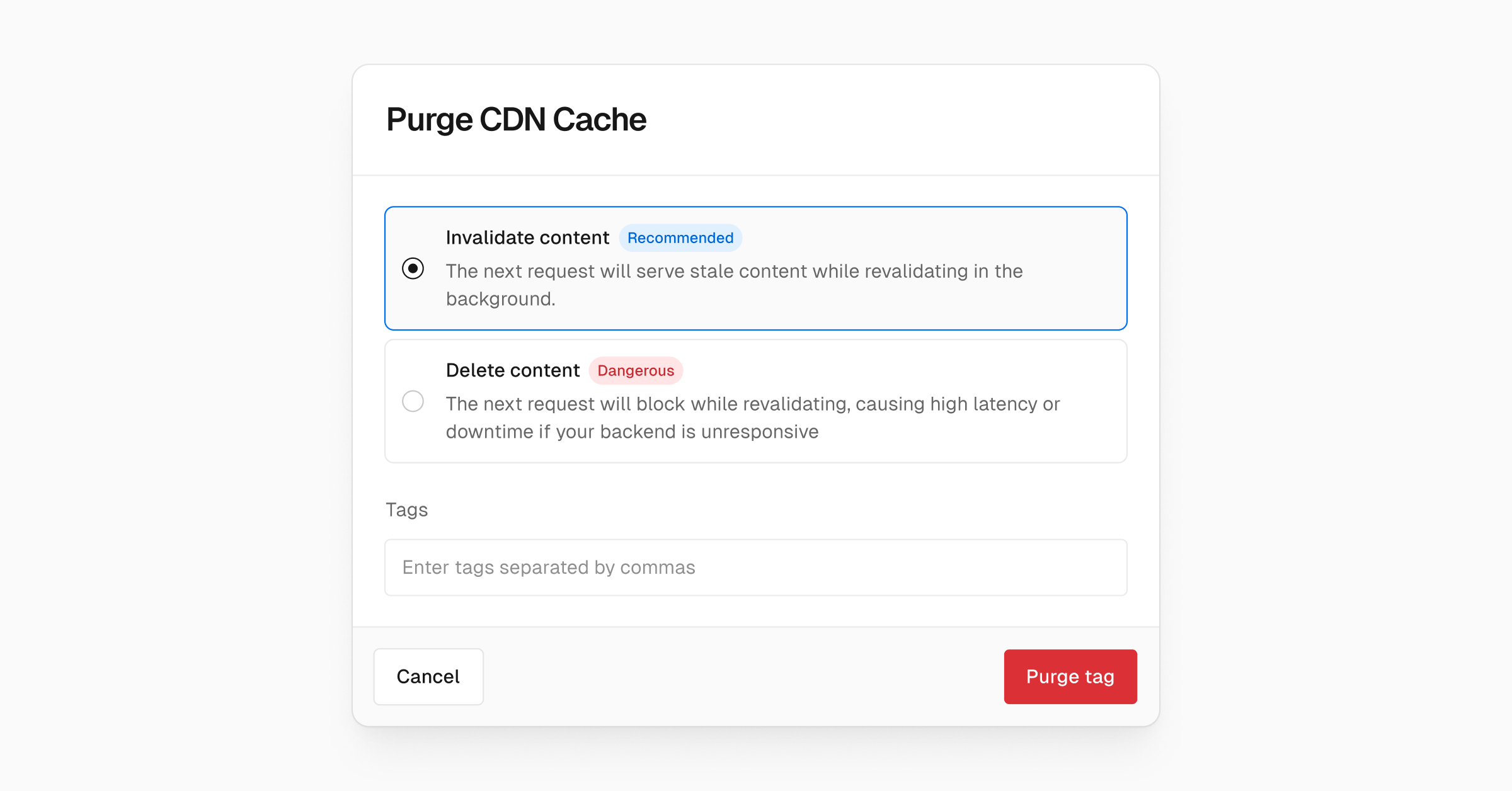

You can now invalidate the CDN cache contents by tag providing a way to revalidate content without increasing latency for your users

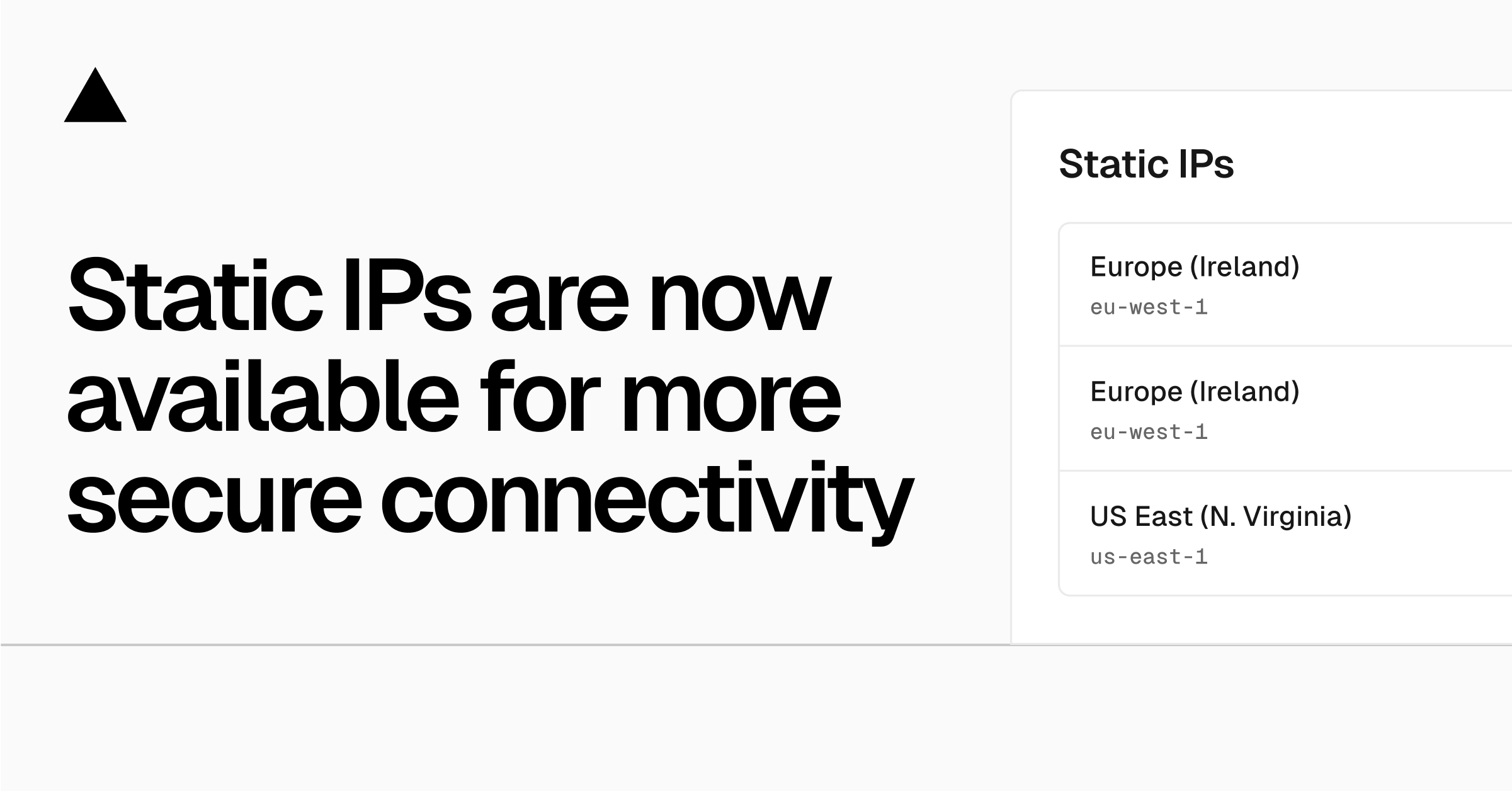

Teams on Pro and Enterprise can now access Static IPs to connect to services that require IP allowlisting. Static IPs give your projects consistent outbound IPs without needing the full networking stack of Secure Compute.

You can now enable Fluid Compute on a per-deployment basis. By setting "fluid": true in your vercel.json

Publishing v0 apps on Vercel got 1.1 seconds faster on average due to some optimizations on sending source files during deployment creation.

Today, Vercel announced an important milestone: a Series F funding round valuing our company at $9.3 billion.

stripe claimable sandbox available on vercel marketplace and v0. You can test your flow fully in test mode and go claim it when ready to go live

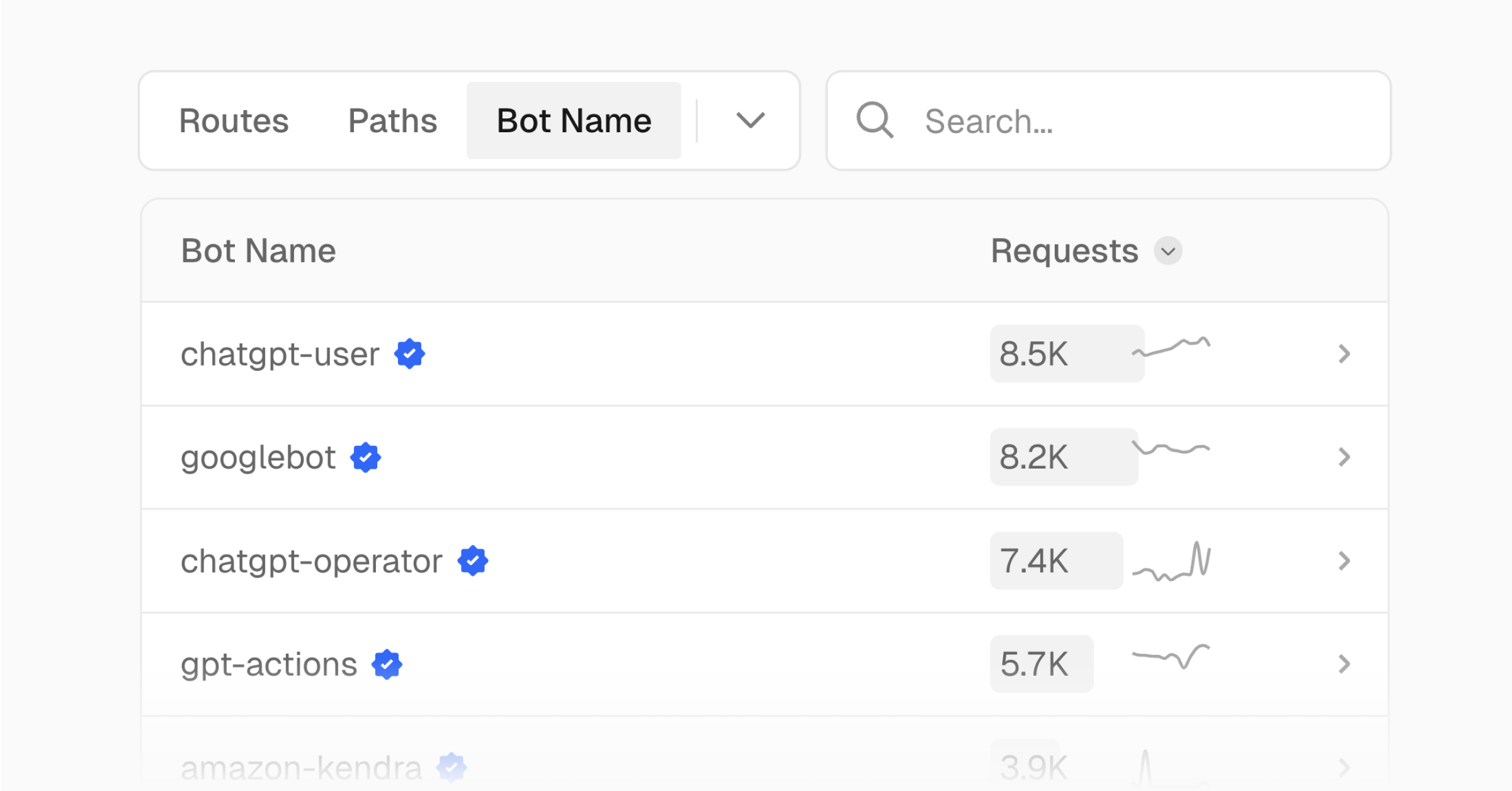

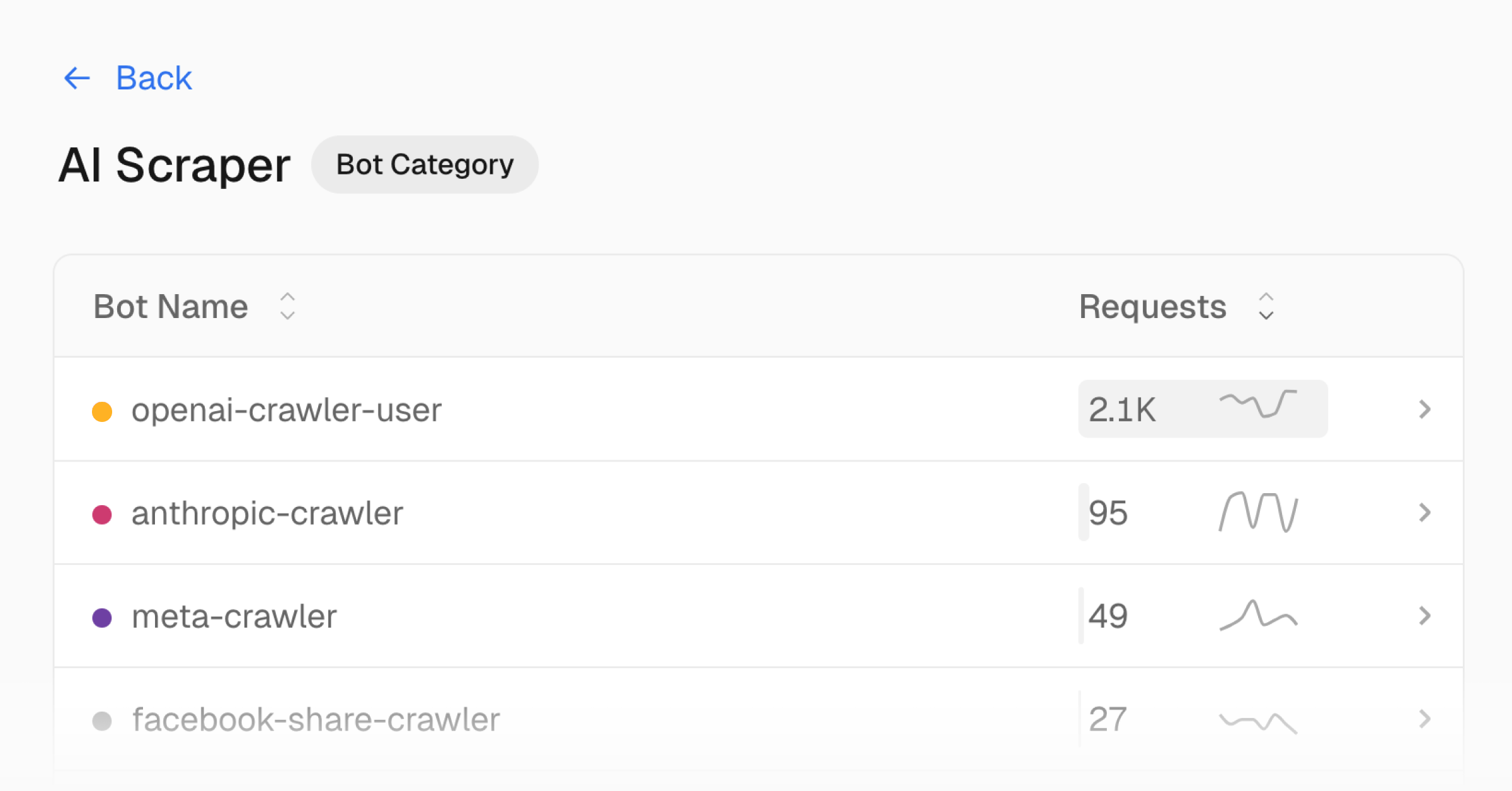

Analyze traffic to your Vercel projects by bot name, bot category, and bot verification status in Vercel Observability

Claude Sonnet 4.5 is now available on Vercel AI Gateway and across the Vercel AI Cloud. Also introducing a new coding agent platform template.

Vercel Functions using Node.js can now detect when a request is cancelled and stop execution before completion. This includes actions like navigating away, closing a tab, or hitting stop on an AI chat to terminate compute processing early.

FastAPI, a modern, high-performance, web framework for building APIs with Python based on standard Python type hints, is now supported with zero-configuration.

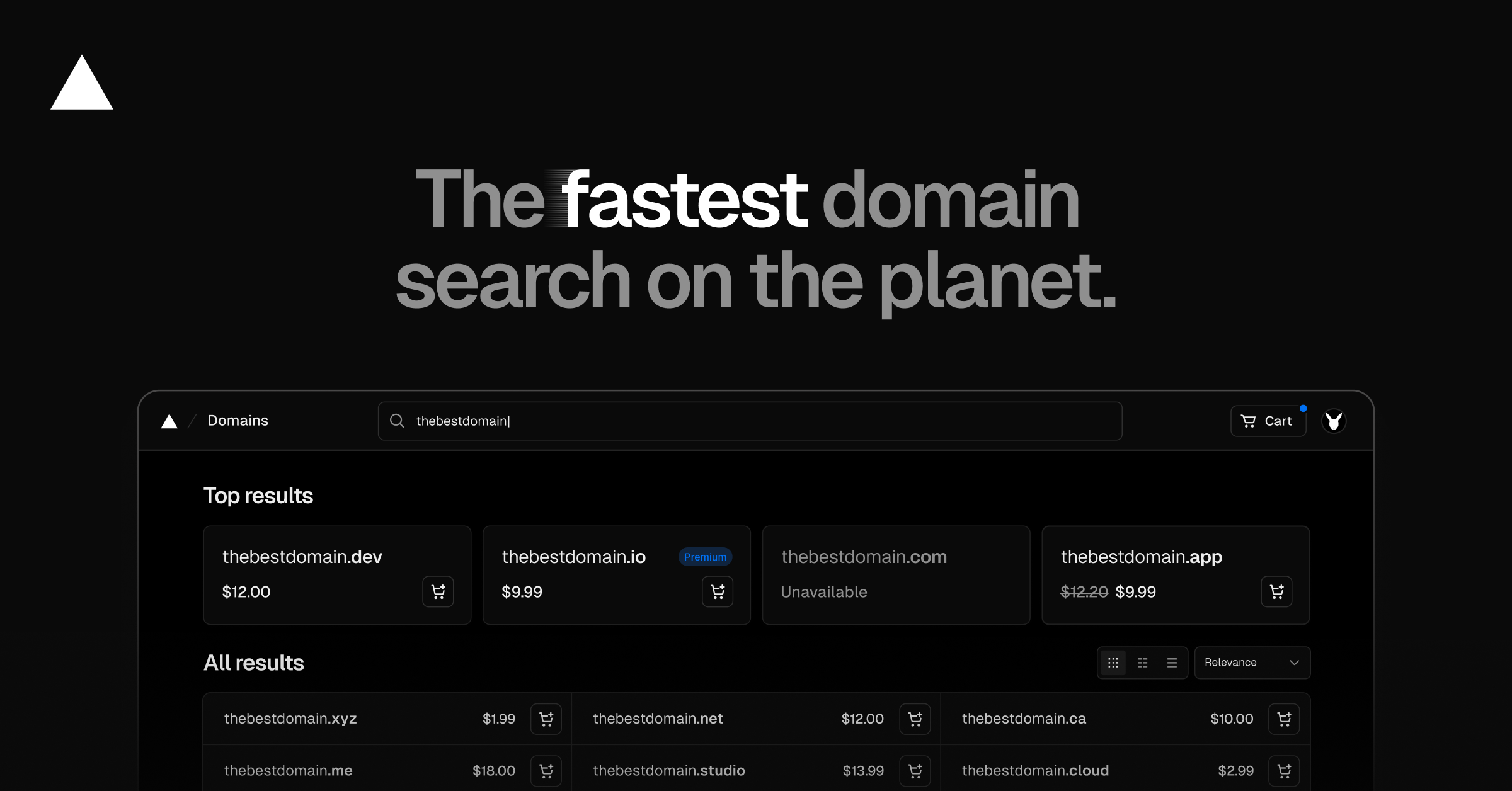

We rebuilt the Vercel Domains experience to make search and checkout significantly faster and more reliable.

Vercel now does regional request collapsing on cache miss for Incremental Static Regeneration (ISR).

The Vercel CDN now supports request collapsing for ISR routes. For a given path, only one function invocation per region runs at once, no matter how many concurrent requests arrive.

It's now possible to run custom queries against all external API requests that where made from Vercel Functions

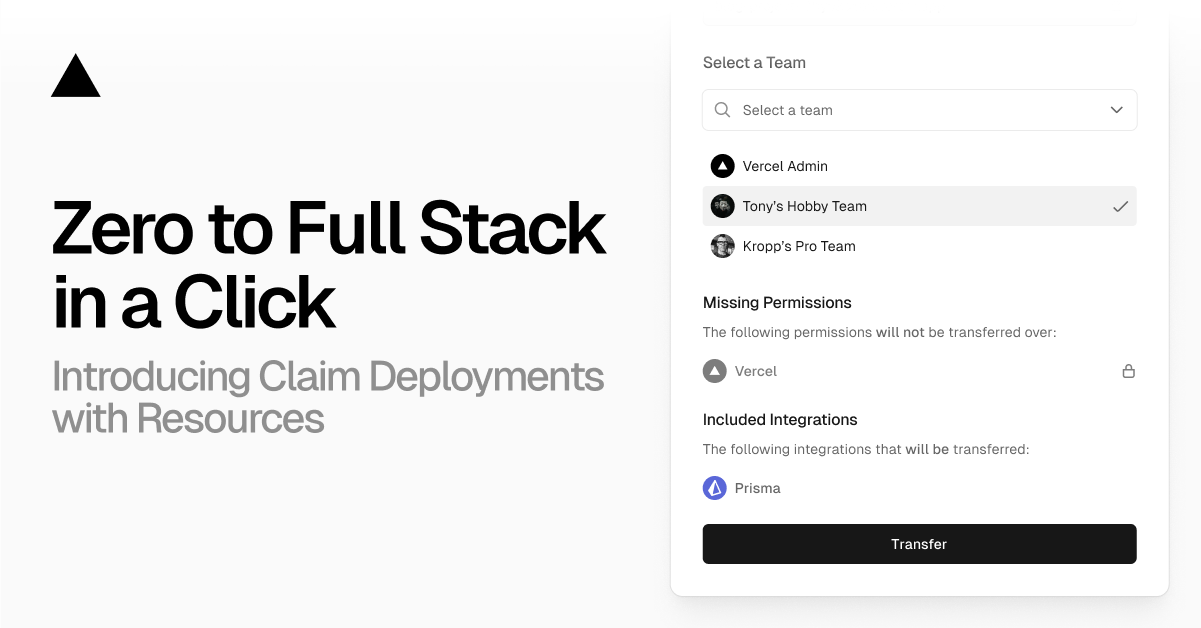

Vercel now supports transferring resources like databases between teams as part of the Claim Deployments flow. Developers building AI agents, no-code tools, and workflow apps can instantly deploy projects and resources

Enterprise customers with Observability Plus can now receive anomaly alerts for errors through the limited beta

A financial institution's suspicious bot traffic turned out to be Google bots crawling SEO-poisoned URLs from years ago. Here's how BotID revealed the real problem.

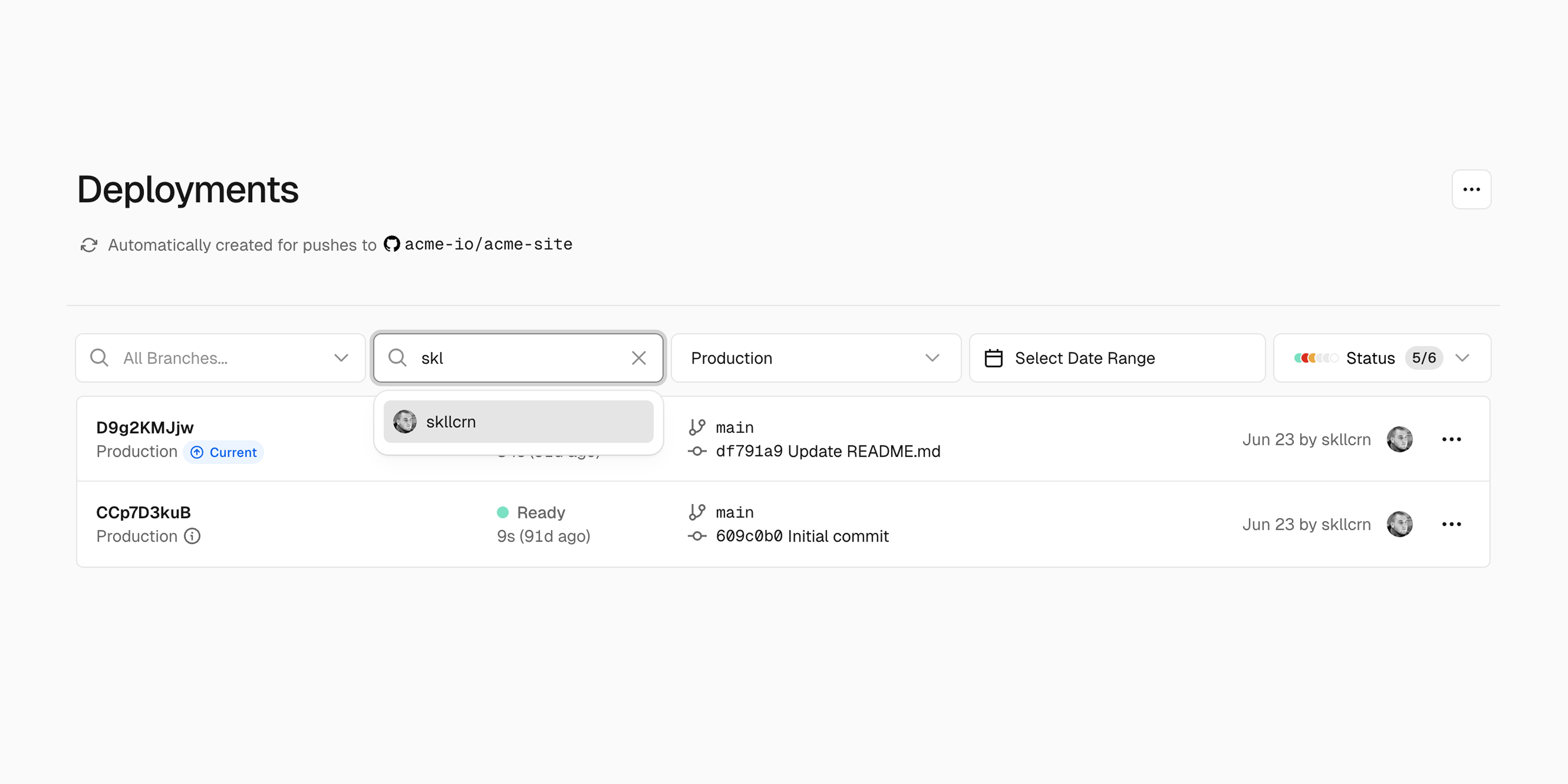

Easily filter deployments on the Vercel dashboard by the Vercel username or email, or git username (if applicable).

We replaced slow JSON path lookups with Bloom filters in our global routing service, cutting memory usage by 15% and reducing 99th percentile lookup times from hundreds of milliseconds to under 1 ms. Here’s how we did it.

Observability Plus will replace the legacy Monitoring subscription. Pro customers using Monitoring should migrate to Observability Plus to author custom queries on their Vercel data.

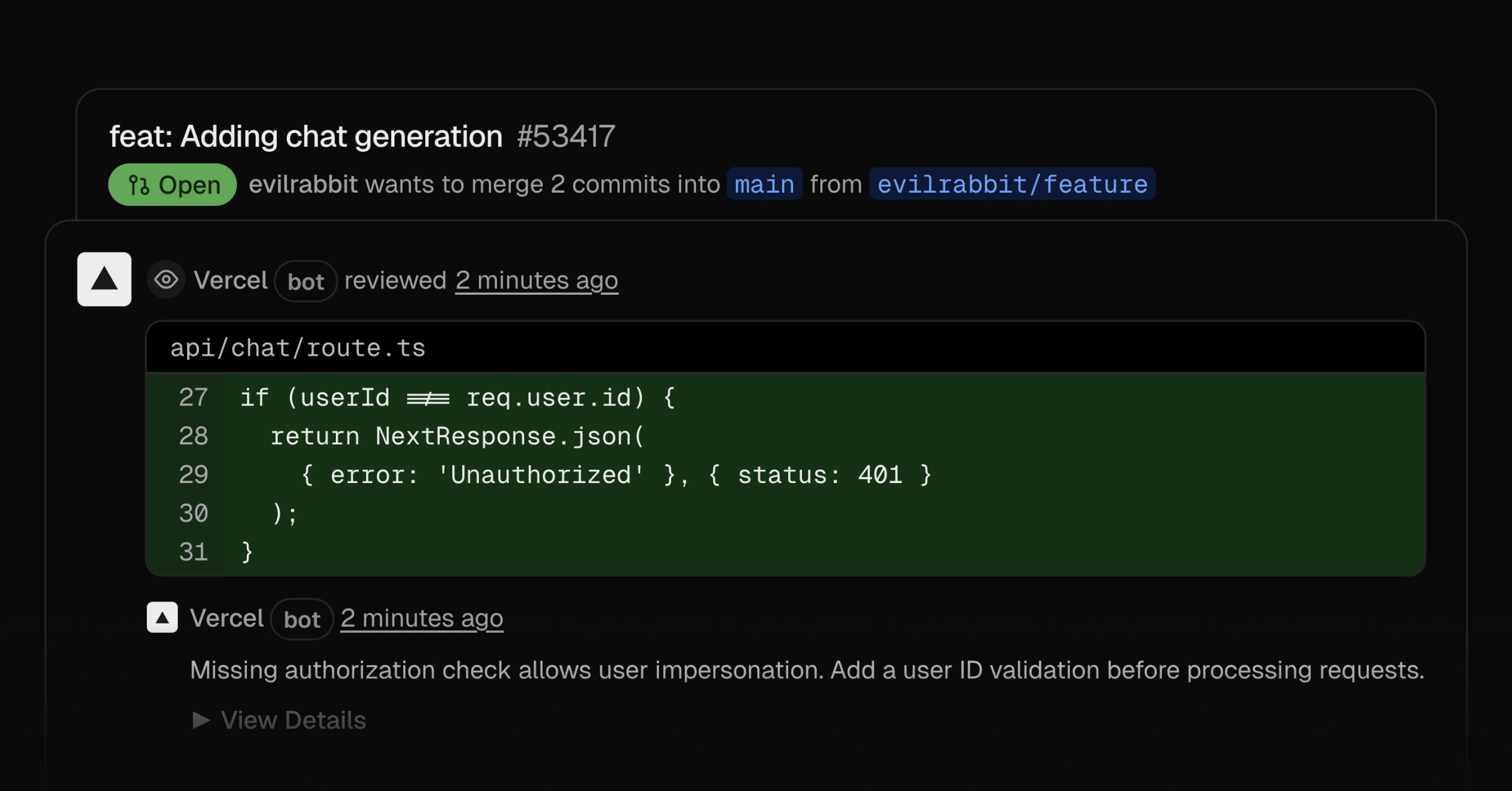

Vercel Agent now provides high signal AI code reviews and fix suggestions to speed up your development process

Vibe coding is revolutionizing how we work. English is now the fastest growing programming language. Our state of vibe coding report outlines what you need to know.

Use mcp-to-ai-sdk to generate MCP tools directly into your project. Gain security, reliability, and prompt-tuned control while avoiding dynamic MCP risks.

Ongoing Shai-Halud npm supply chain attacks affected popular packages. Vercel responded swiftly, secured builds, and notified impacted users.

Learn more about how Rox runs global, AI-driven sales ops on fast, reliable infrastructure thanks to Vercel

The 150-year-old Norwegian brand leveraged Next.js and Vercel to achieve 154% Black Friday growth and 30%+ conversion lift while competing against industry titans in a crowded space

You can now access Qwen3 Next, two models from QwenLM, designed to be ultra-efficient, using Vercel's AI Gateway with no other provider accounts required.

Introducing x402-mcp, a library that integrates with the AI SDK to bring x402 paywalls to Model Context Protocol (MCP) servers to let agents discover and call pay for MCP tools easily and securely.

We built x402-mcp to integrate x402 payments with Model Context Protocol (MCP) servers and the Vercel AI SDK.

You can now access LongCat-Flash Chat from Meituan using Vercel AI Gateway, with no Meituan account required.

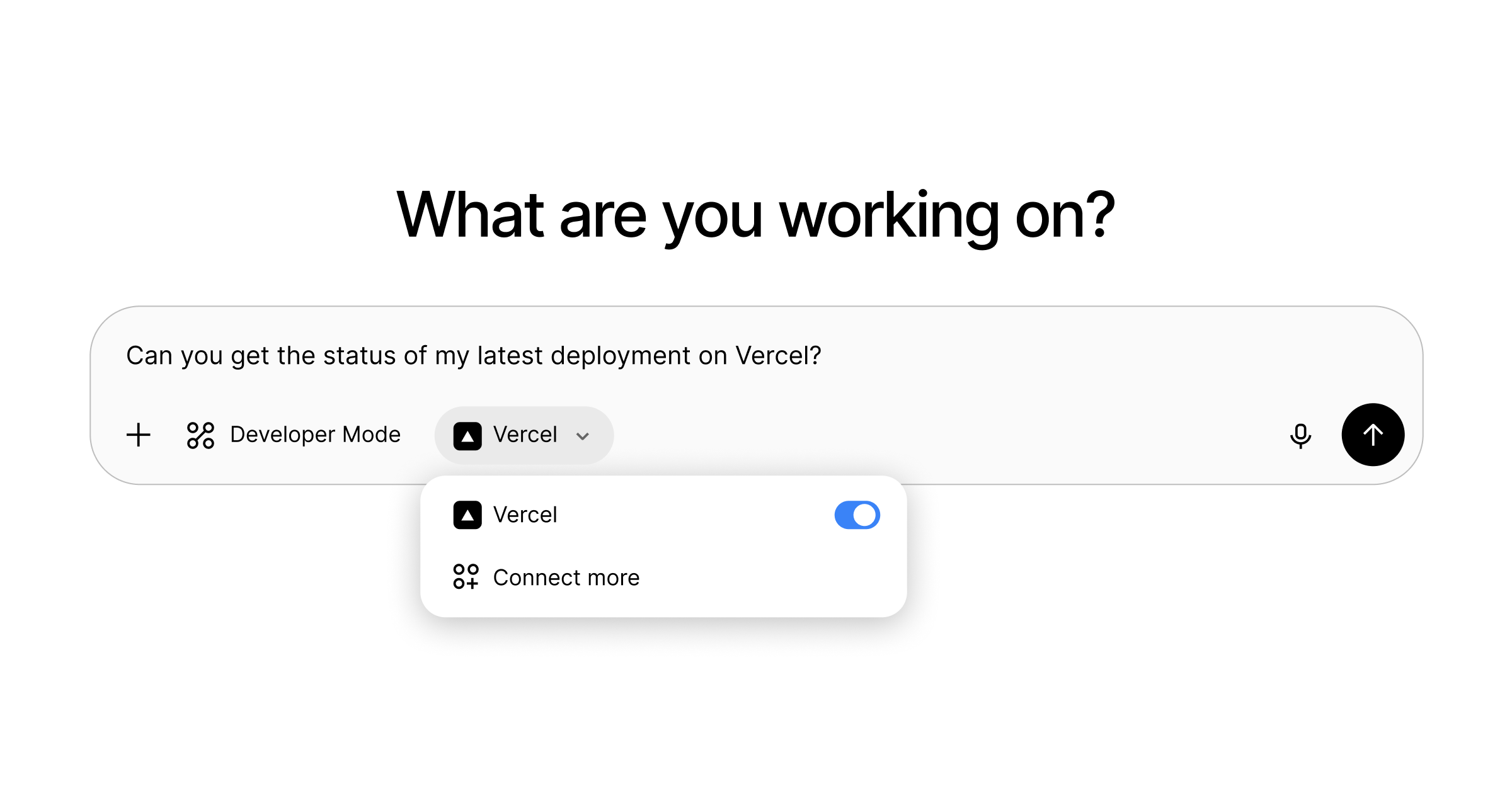

Use Vercel MCP with ChatGPT to explore projects, view logs, share access to protected deployments, and more.

The second wave of MCP, building for LLMs, not developers. Explore the evolution of MCP as it shifts from developer-focused tools to LLM-native integrations. Discover the future of AI connectivity.

You can now access the AI SDK and AI Gateway with the vercel/ai-action@v2 GitHub Action. Use it to generate text or structured JSON directly in your workflows by configuring a prompt, model, and api-key. Learn more in the docs.

NPM packages installation got faster for v0 builds. It went from 5 seconds to 1.5 seconds on average, which is a 70% reduction.

Deploy your Slack agent to Vercel's AI Cloud using @vercel/slack-bolt to take advantage of AI Gateway, Fluid compute, and more.

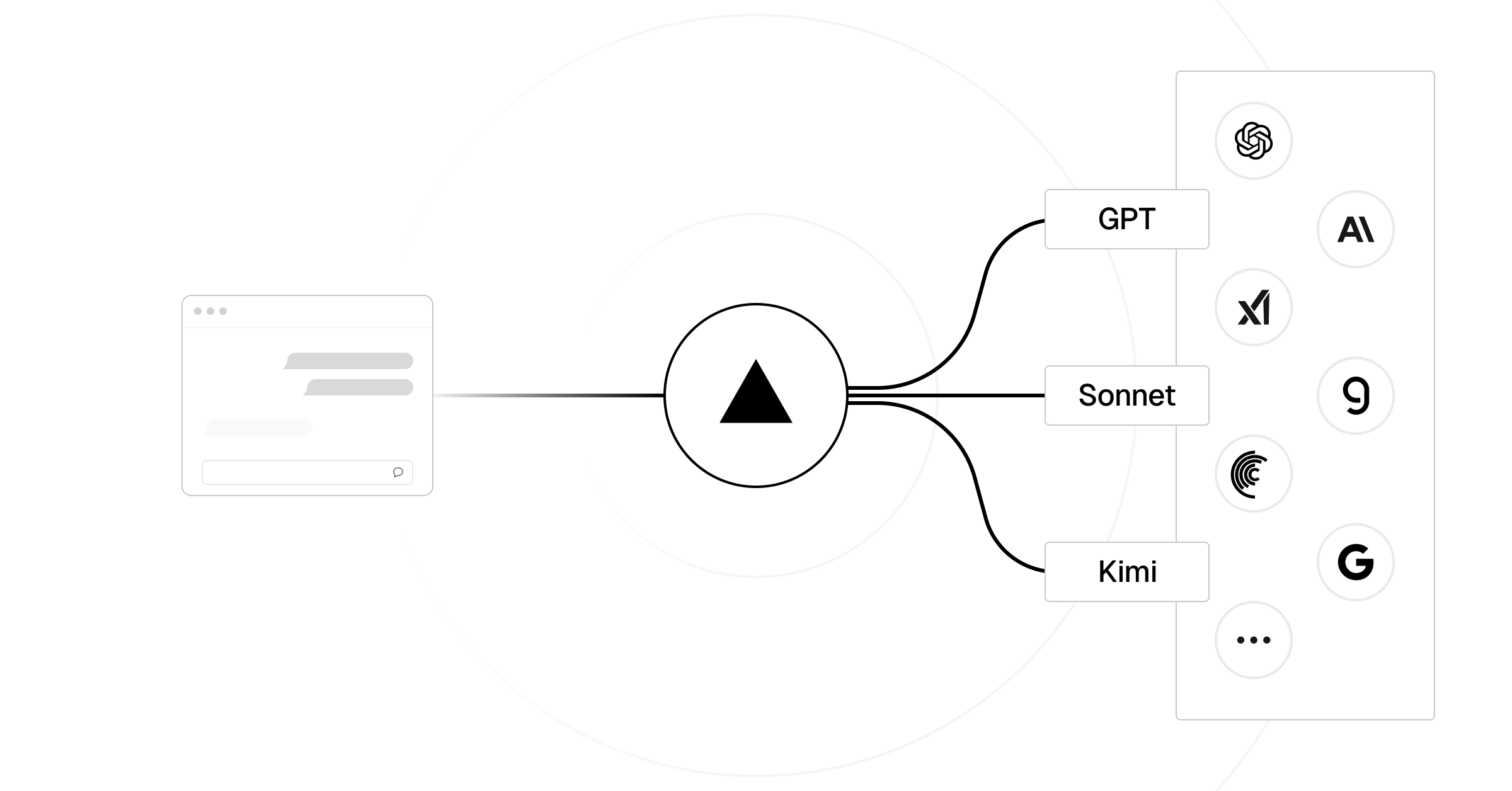

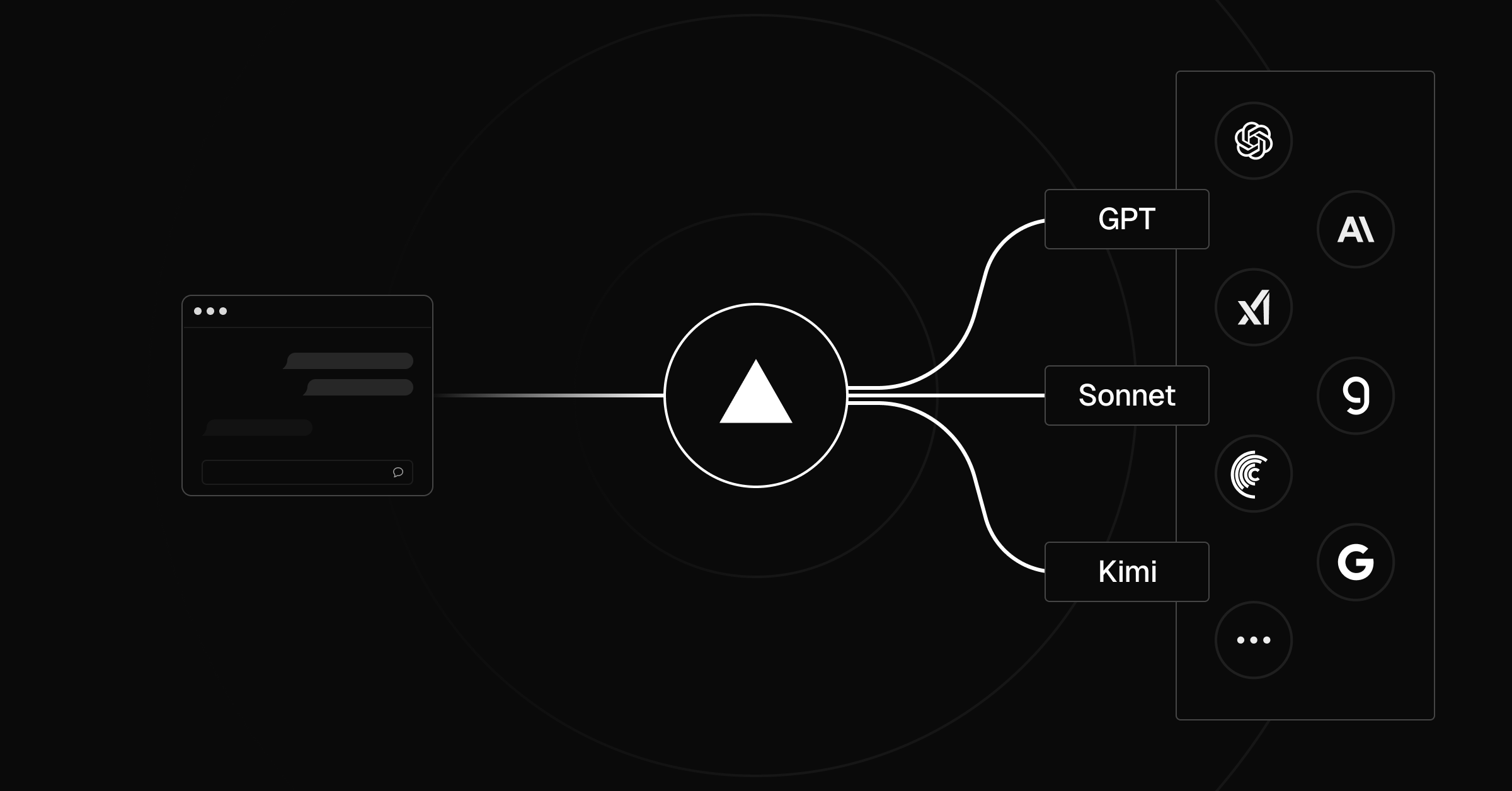

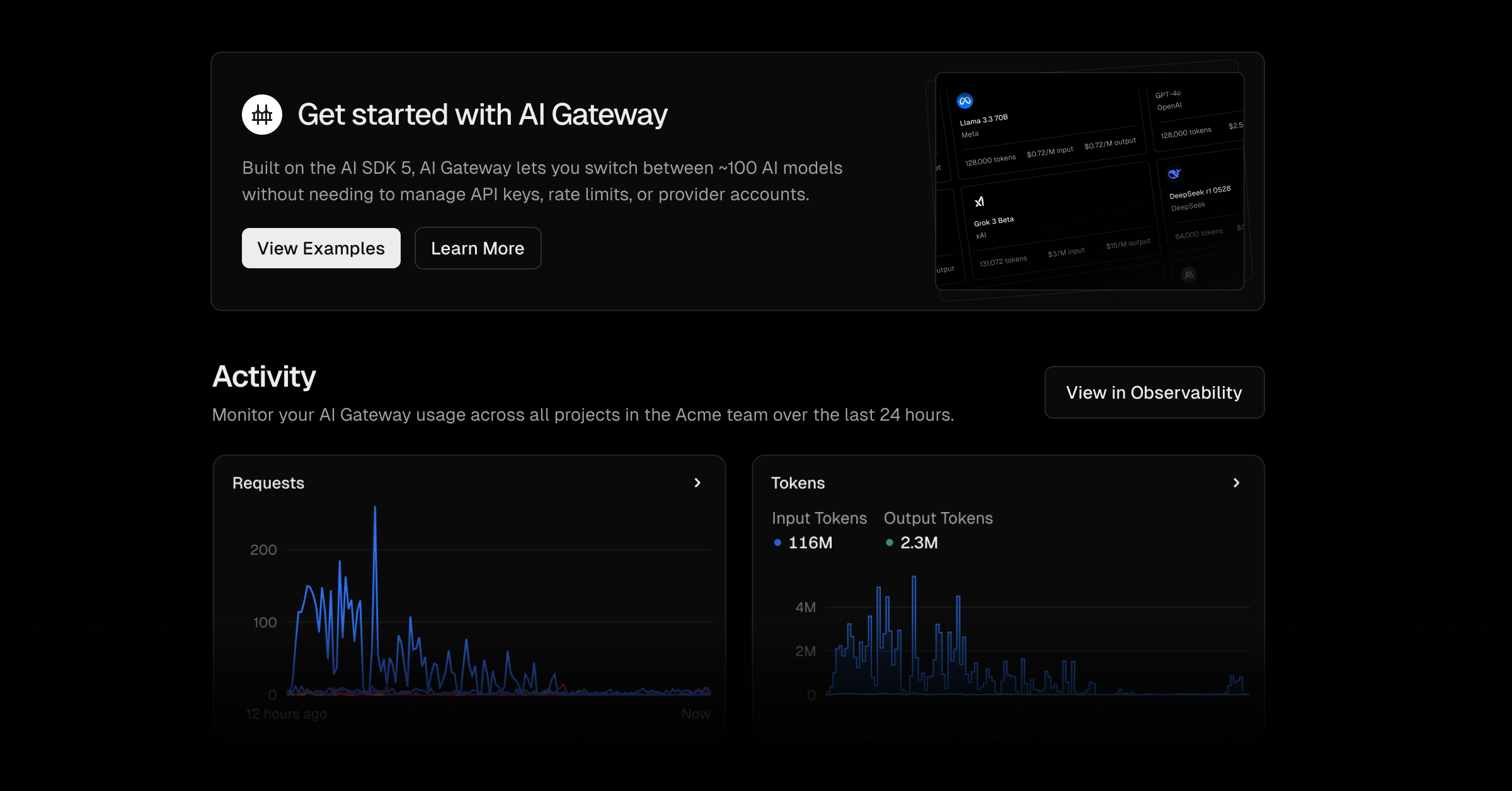

AI Gateway is now generally available, providing a single interface to access hundreds of AI models with transparent pricing and built-in observability.

AI Gateway, now generally available, ensures availability when a provider fails, avoiding low rate limits and providing consistent reliability for AI workloads.

llms.txt is an emerging standard for making content such as docs available for direct consumption by AIs. We’re proposing a convention to include such content directly in HTML responses.

Coxwave's journey to cutting deployment times by 85% and building AI-native products faster with Vercel

Connect Cursor to Vercel MCP to manage projects and deployments, analyze logs, search docs, and more

The GPT-5 family of models released today, are now available through AI Gateway and are in production on our own v0.dev applications. Thanks to OpenAI, Vercel has been testing these models for a few weeks in v0, Next.js, AI SDK, and Vercel Sandbox.

Focus on your AI’s intelligence, not the UI scaffolding. AI Elements is now available as a new Vercel product to help frontend engineers build AI-driven interfaces in a fraction of the time.

Vercel now has an official hosted MCP server (aka Vercel MCP), which you can use to connect your favorite AI tools, such as Claude or VS Code, directly to Vercel.

You can now access Claude Opus 4.1, a new model released by Anthropic today, using Vercel's AI Gateway with no other provider accounts required.

You can now access gpt-oss by OpenAI, an open-weight reasoning model designed to push the open model frontier, using Vercel's AI Gateway with no other provider accounts required.

Vibe coding makes it possible for anyone to ship a viral app. But every line of AI-generated code is a potential vulnerability. Security cannot be an afterthought, it must be the foundation. Turn ideas into secure apps with v0.

Introducing type-safe chat, agentic loop control, new specification, tool enhancements, speech generation, and more.

You can now access GLM-4.5 and GLM-4.5 Air, new flagship models from Z.ai designed to unify frontier reasoning, coding, and agentic capabilities, using Vercel's AI Gateway with no other provider accounts required.

Model Context Protocol (MCP) is a new spec that helps standardize the way large language models (LLMs) access data and systems, extending what they can do beyond their training data.

You can now access Kimi K2 from Moonshot AI using Vercel's AI Gateway, with no Moonshot AI account required.

Learn how to build, extend, and automate AI-generated apps like BI tools and website builders with v0 Platform API

OpenAI-compatible API endpoints now supported in AI Gateway giving you access to 100s of models with no code rewrites required

Copy Vercel documentation pages as markdown, or open them in AI providers, such as v0, Claude, or ChatGPT.

Search 1M+ GitHub repositories from your AI agent using Grep's MCP server. Your agent can now reference coding patterns and solutions used in open source projects to solve problems.

You can now access Kimi K2 from Moonshot AI using Vercel's AI Gateway, with no Moonshot AI account required.

We made it simple to build, preview, and ship any frontend, from marketing pages to dynamic apps, without managing infrastructure. Now we’re introducing the next layer: the Vercel AI Cloud.

Build background jobs and AI workflows with Inngest, now on the Vercel Marketplace. Native support for Next.js, preview environments, and branching.

Vercel Ship 2025 added new building blocks for an AI era: Fast, flexible, and secure by default. Lower costs with Fluid's Active CPU pricing, Rolling Releases for safer deployments, invisible CAPTCHA with BotID. See these and more in our recap.

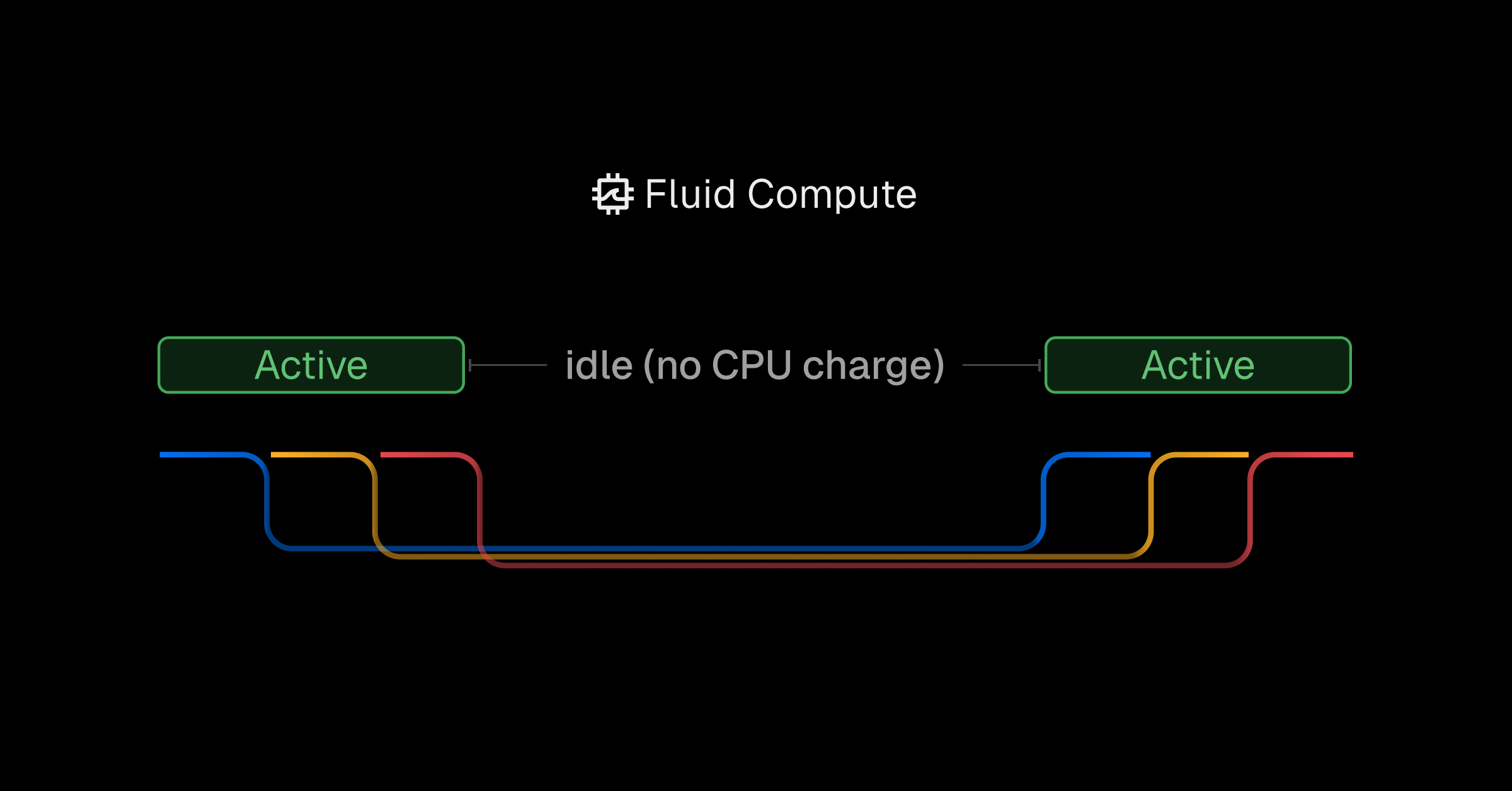

Pricing for Vercel Functions on Fluid compute has been reduced. All Fluid-based compute now uses an Active CPU pricing model, offering up to 90% savings in addition to the cost efficiency already delivered by Fluid's concurrency model.

AI Gateway is now in Beta, giving you a single endpoint to access a wide range of AI models across providers, with better uptime, faster responses, no lock-in.

We’re welcoming Keith Messick as Chief Marketing Officer to support our growth, engage on more channels, and (as always) amplify the voice of the developer. Keith is a longtime enterprise CMO and comes to Vercel from database leader, Redis.

MCP is becoming the standard for building AI model integrations. See how you can use Vercel's open-source MCP adapter to quickly build your own MCP server, like the teams at Zapier, Composio, and Solana.

AI is changing how content gets discovered. Now, SEO ranking ≠ LLM visibility. No one has all the answers, but here's how we're adapting our approach to SEO for LLMs and AI search.

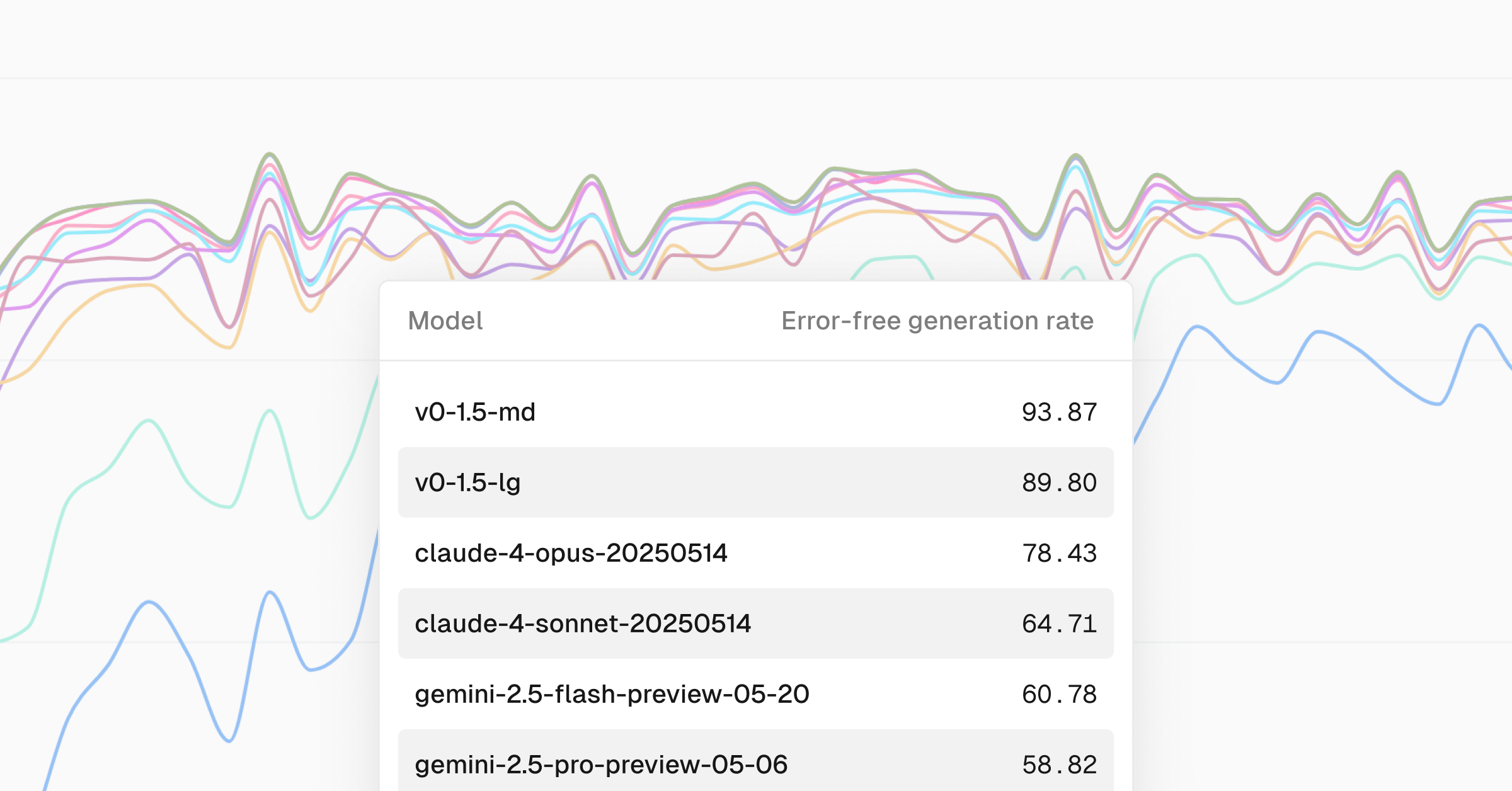

Try v0-1.5-md and v0-1.5-lg in beta on the v0 Models API, now offering two new model sizes for more flexible performance and accuracy. Ideal for everything from quick responses to deep analysis.

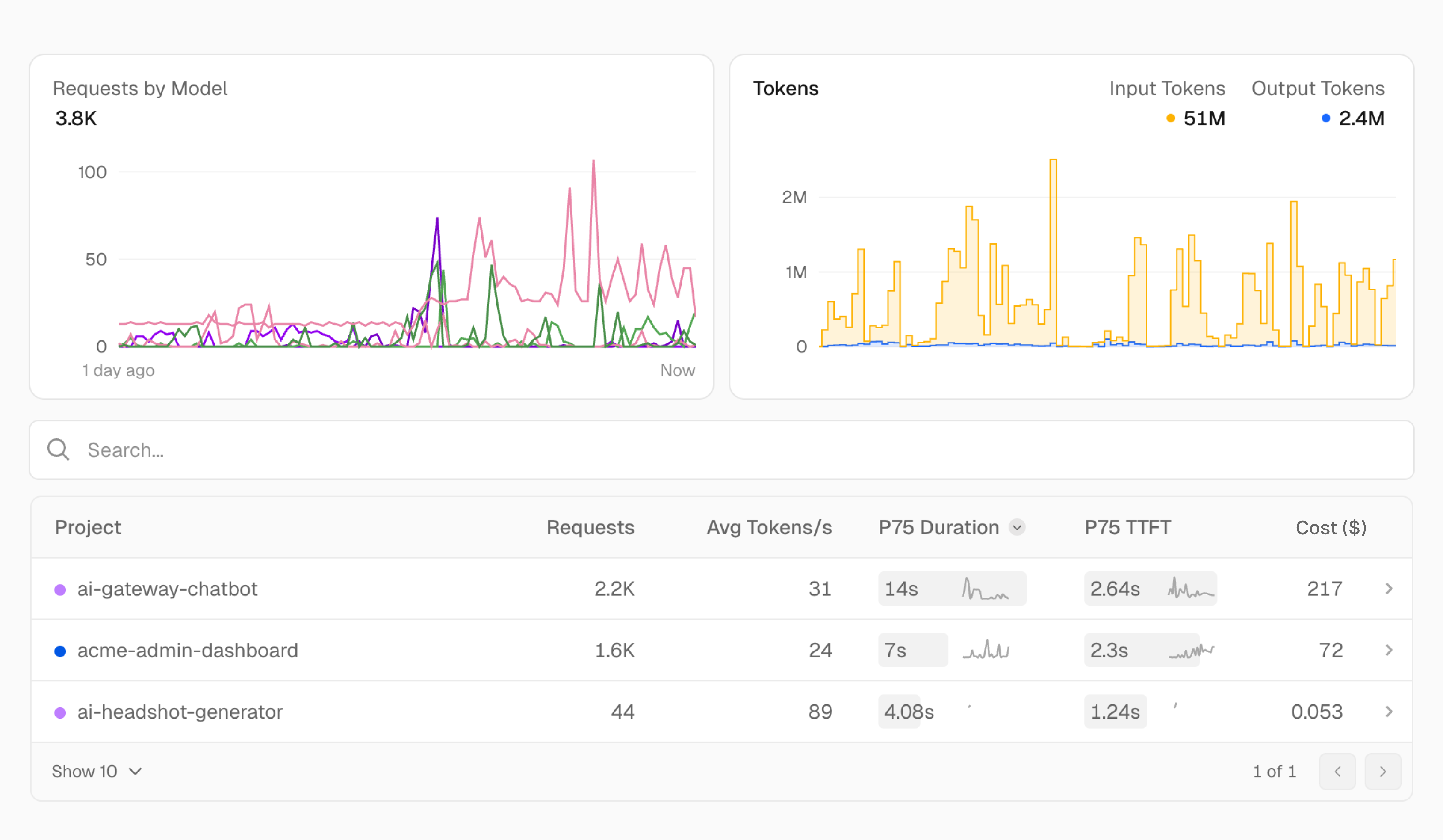

Vercel Observability now includes a dedicated AI section to surface metrics related to the AI Gateway.

Learn how to design secure AI agents that resist prompt injection attacks. Understand tool scoping, input validation, and output sanitization strategies to protect LLM-powered systems.

Learn how to build reliable, domain-specific AI agents by simulating tasks manually, structuring logic with code, and optimizing with real-world feedback. A clear, hands-on approach to practical automation.

Learn how v0's composite AI models combine RAG, frontier LLMs, and AutoFix to build accurate, up-to-date web app code with fewer errors and faster output.

Fluid, our newly announced compute model, eliminates wasted compute by maximizing resource efficiency. Instead of launching a new function for every request, it intelligently reuses available capacity, ensuring that compute isn’t sitting idle.

With the AI Gateway, build with any model instantly. No API keys, no configuration, no vendor lock-in.

Customers on all plans can now benefit from faster build cache restoration times. We've made architectural improvements to builds to help customers build faster.

Fern used Vercel and Next.js to achieve efficient multi-tenancy, faster development cycles, and 50-80% faster load times

Learn how Consensys modernized MetaMask.io using Vercel and Next.js—cutting deployment times, improving collaboration across teams, and unlocking dynamic content with serverless architecture.

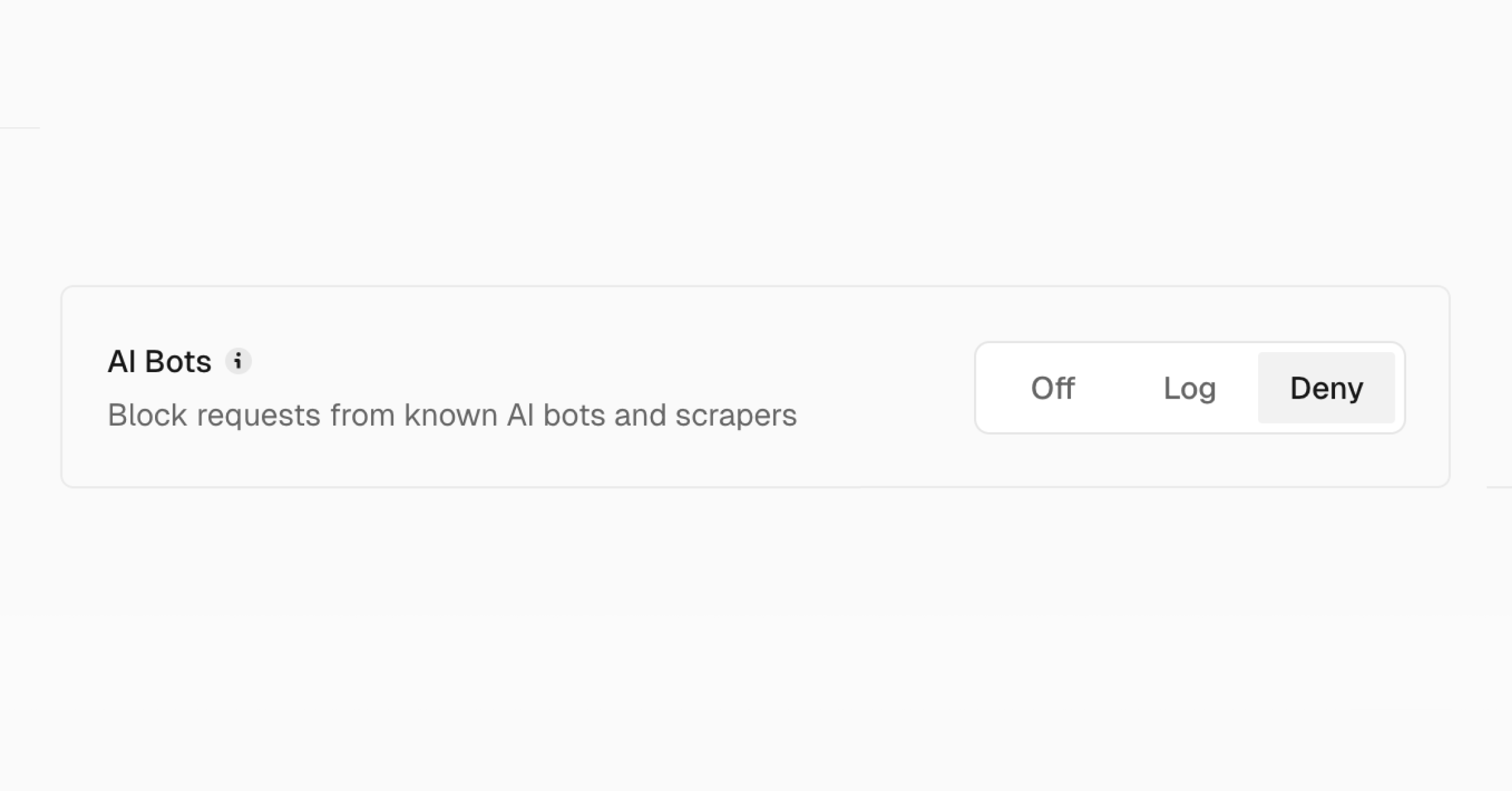

Protect your content from unauthorized AI crawlers with Vercel's new AI bot managed ruleset, offering one-click protection against known AI bots while automatically updating to catch new crawlers without any maintenance.

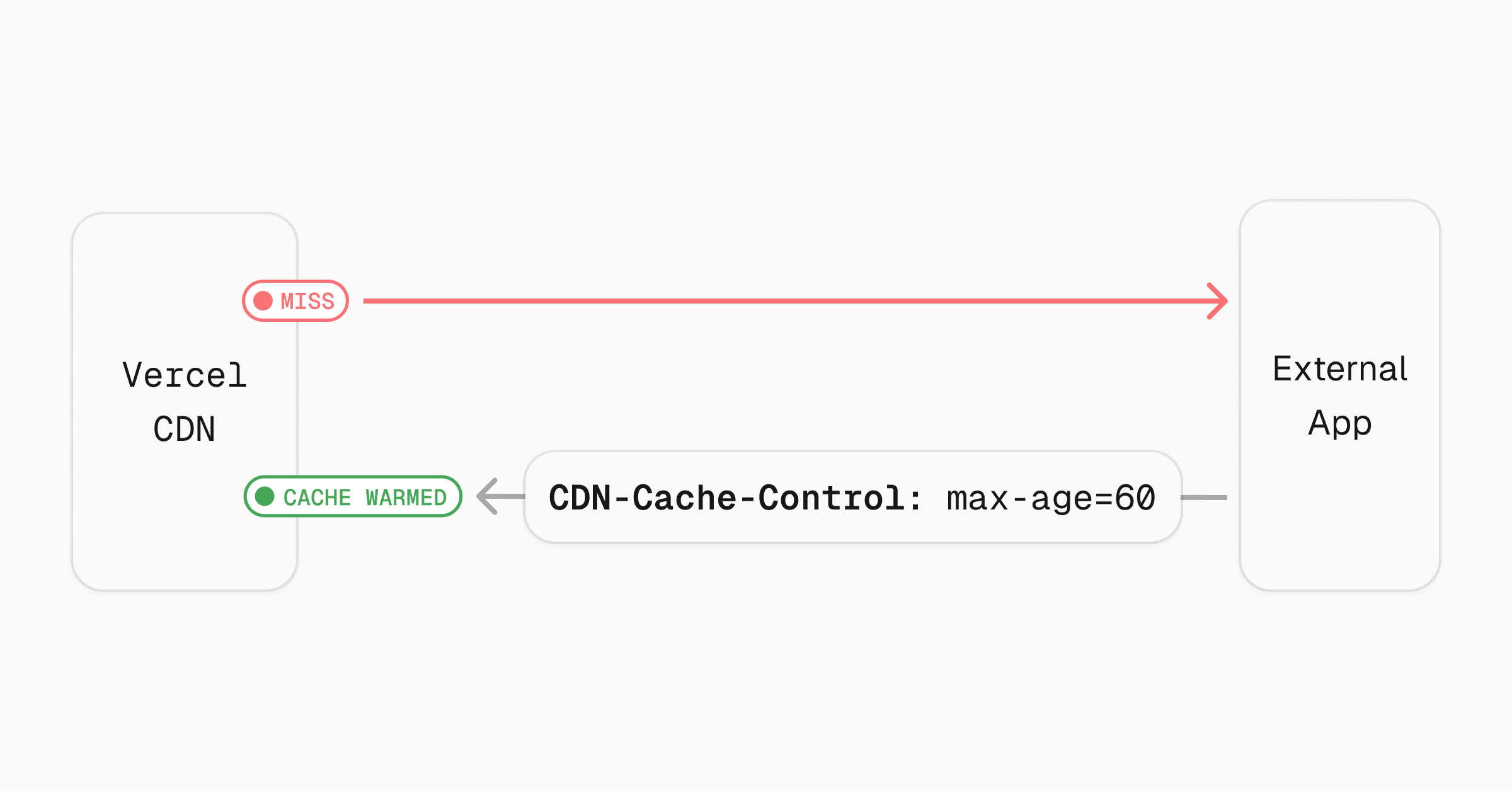

Vercel's CDN now supports CDN-Cache-Control headers for external backends, giving you simple, powerful caching control without any configuration changes.

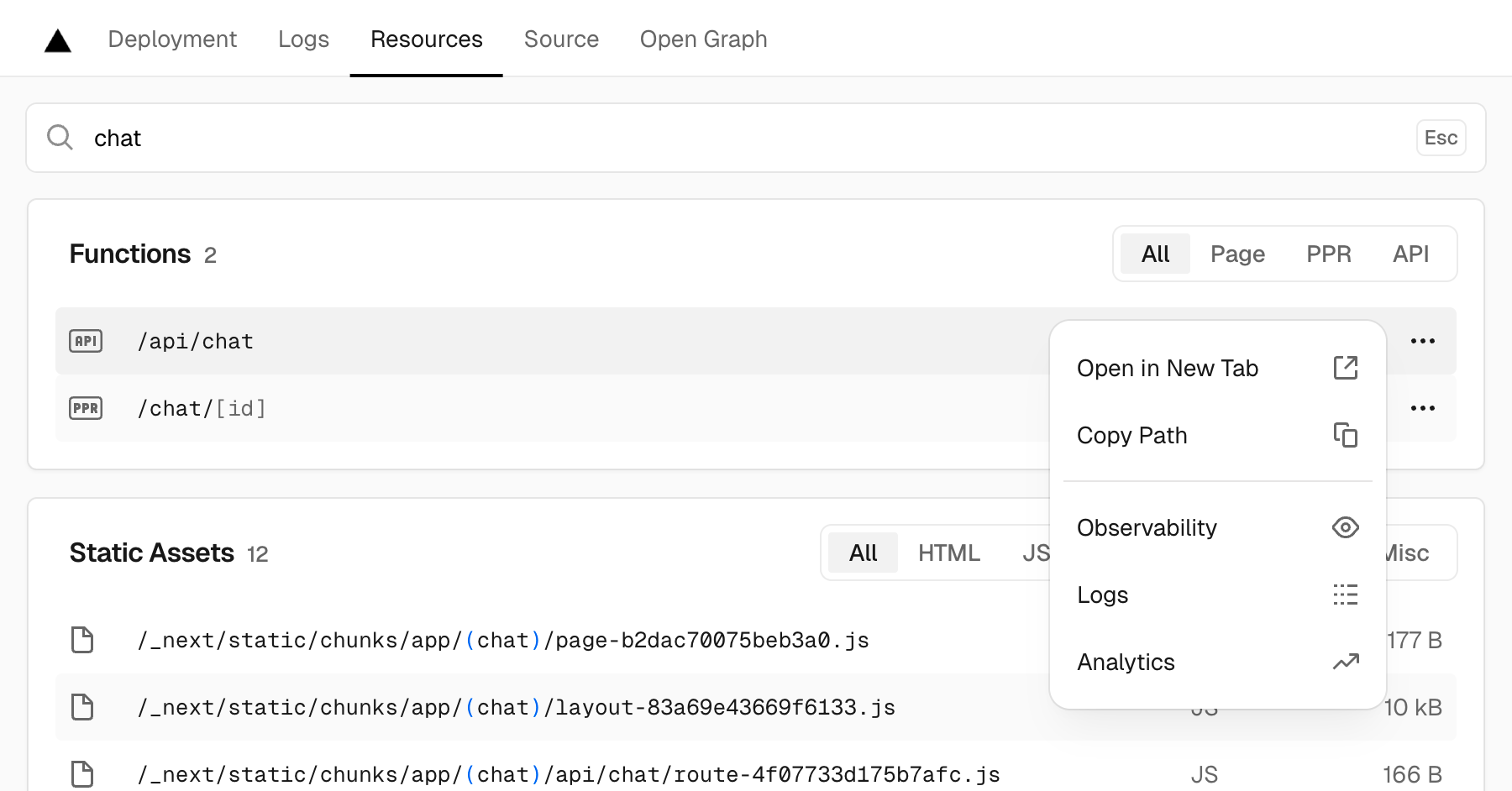

The Resources tab is replacing the Functions tab for deployments in the Vercel Dashboard. It displays and allows you to search and filter middleware, static assets, and functions.

More flexible pricing for v0 that scales with your usage and lets you pay on-demand through credits.

Announcing the spring 2025 cohort of Vercel's Open Source Program. Open source community frameworks, libraries, and tools we rely on every day to build the web,

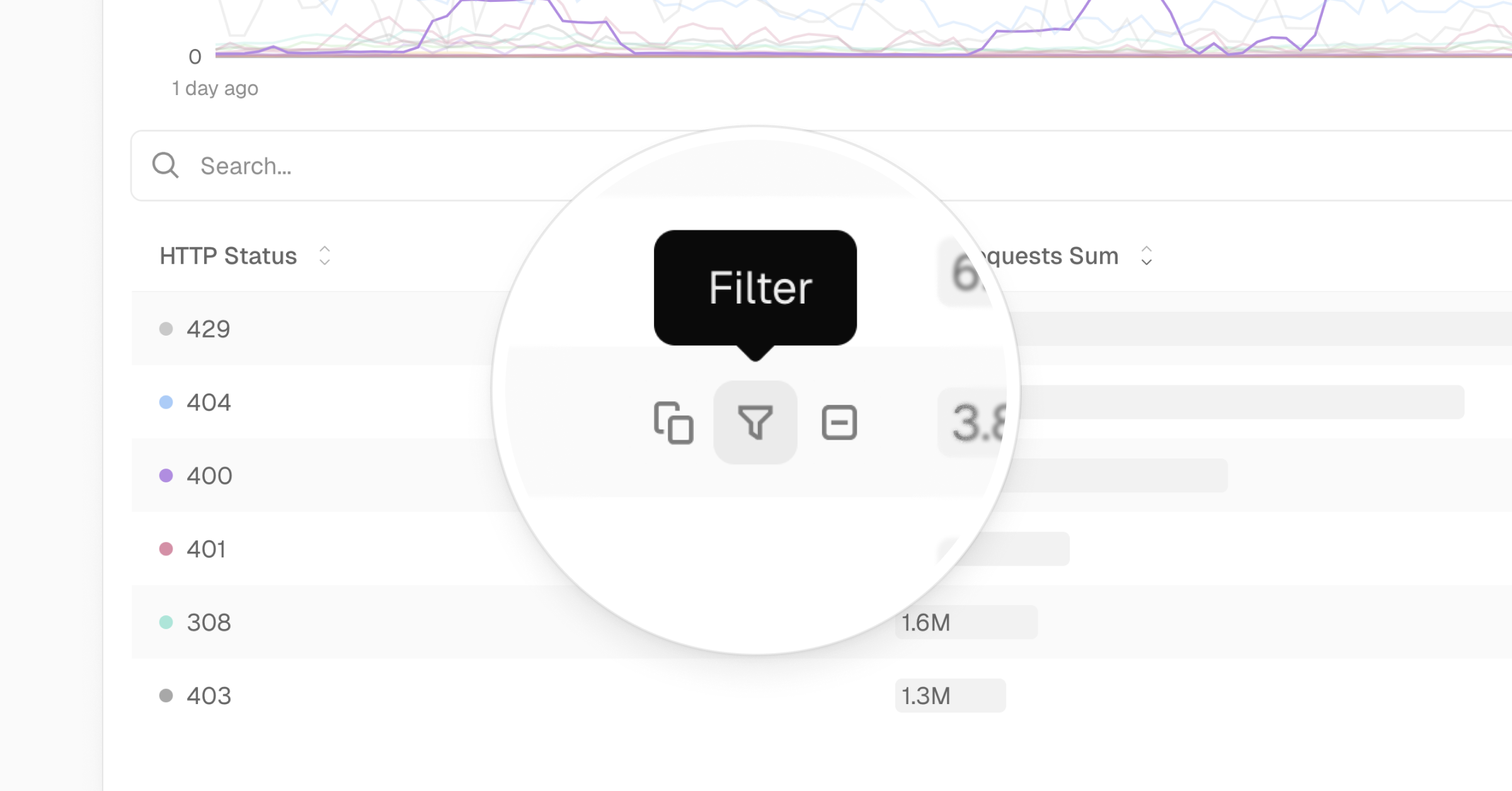

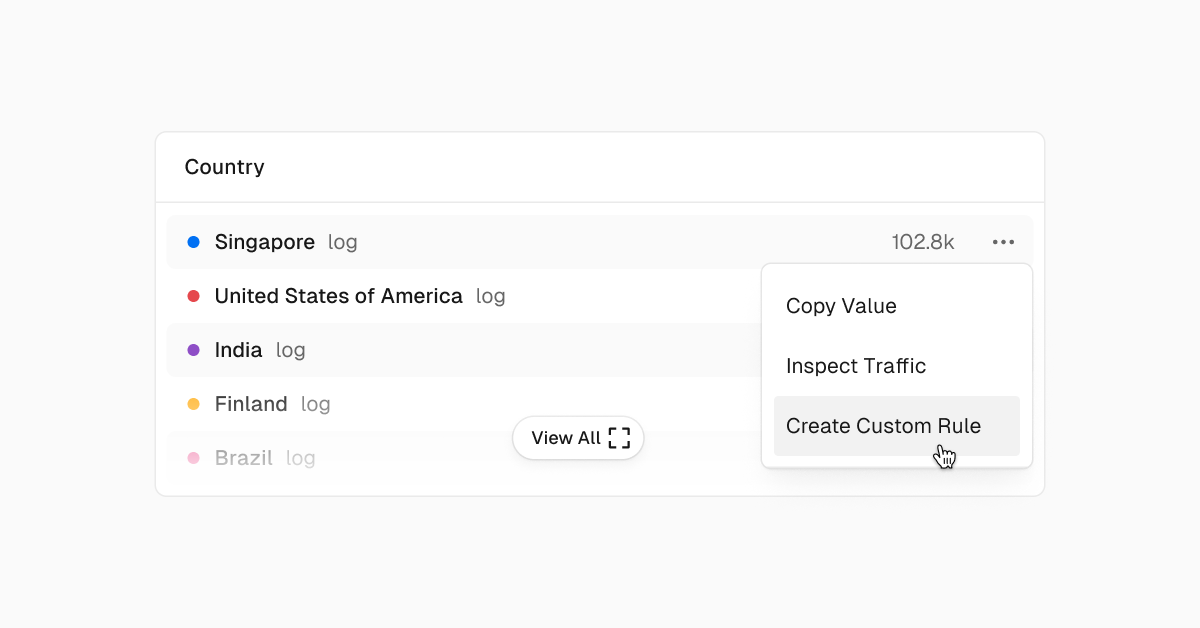

Copy, filter, or exclude any value in Observability queries with new one-click actions, making it faster to analyze incoming traffic.

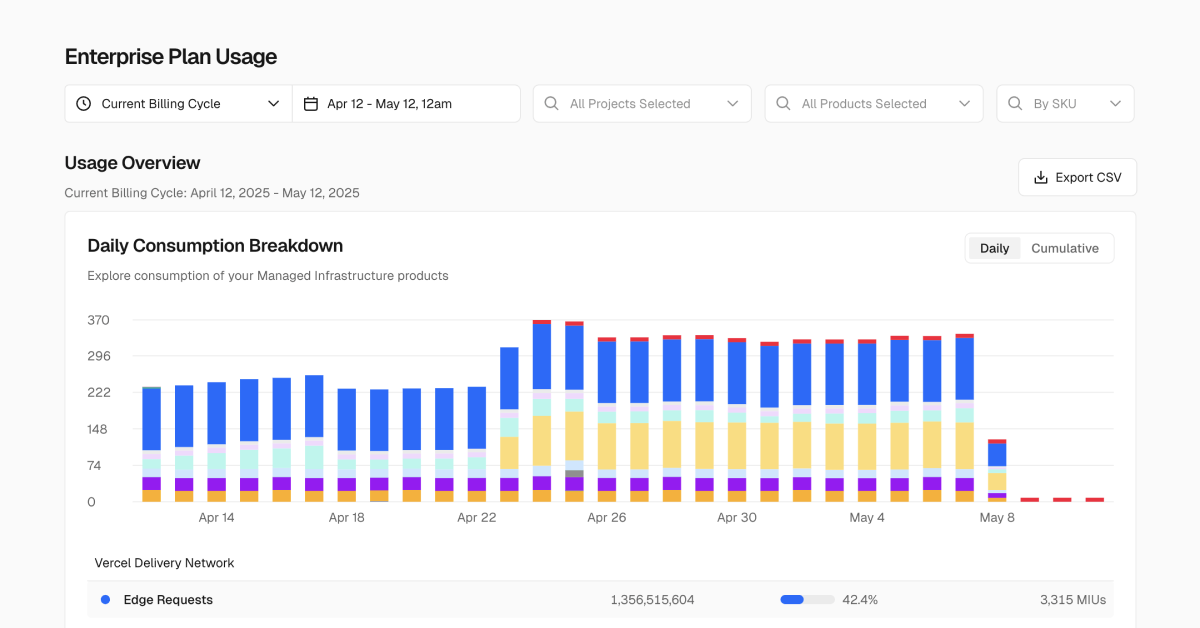

We’ve launched a new usage dashboard for Enterprise teams to analyze Vercel usage and costs with detailed breakdowns and export options.

Hobby and Pro teams on Vercel now have higher usage limits on Web Analytics, including reduced costs and smaller billable increments

Vercel’s CDN proxy read timeout now increased to 120 seconds across all plans, enabling long-running AI workloads and reducing 504 gateway timeout errors. Available immediately at no cost, including Hobby (free) plans.

Run MCP servers on with Next.js or Node.js in your Vercel project with 1st class support for Anthropic's MCP SDK

Find out which ai crawlers or search engines are scraping your content. Act later on using Vercel Firewall if wanted

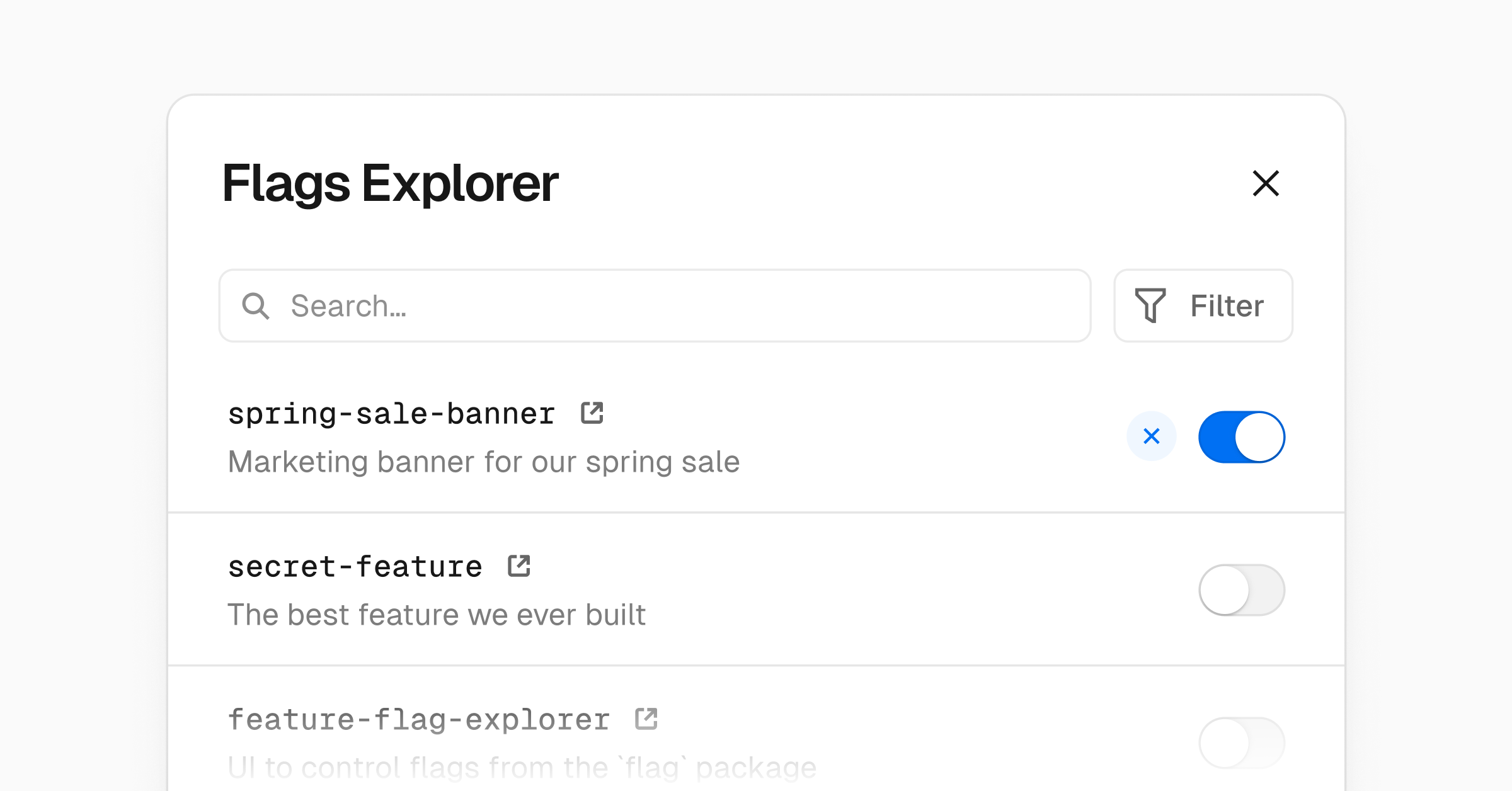

View and override feature flags in your browser with Flags Explorer – now generally available for all customers

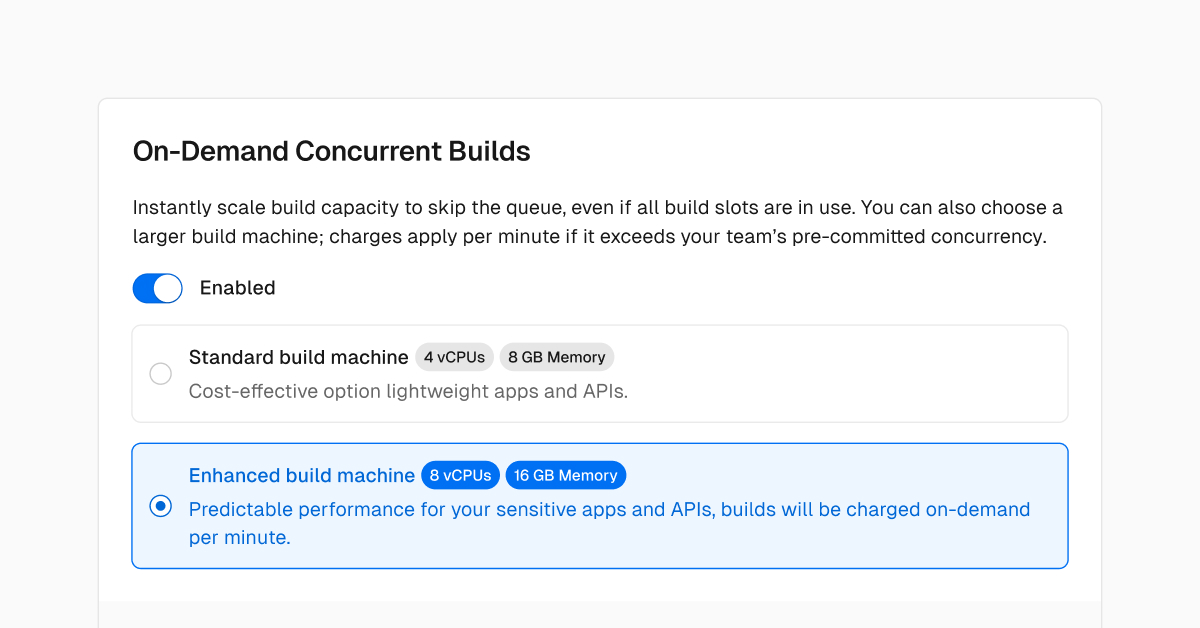

Enhanced Builds can now be enabled on demand per project for Pro and Enterprise teams. These builds offer double the compute. Customers already using Enhanced Builds are seeing, with no action required, up to 25% reductions in build times.

Introducing first-party integrations, the Flags Explorer, and improvements to the Flags SDK to improve feature flag workflow on Vercel.

A six-week program to help you scale your AI company offering over $4M in credits from Vercel, v0, AWS, and leading AI platforms

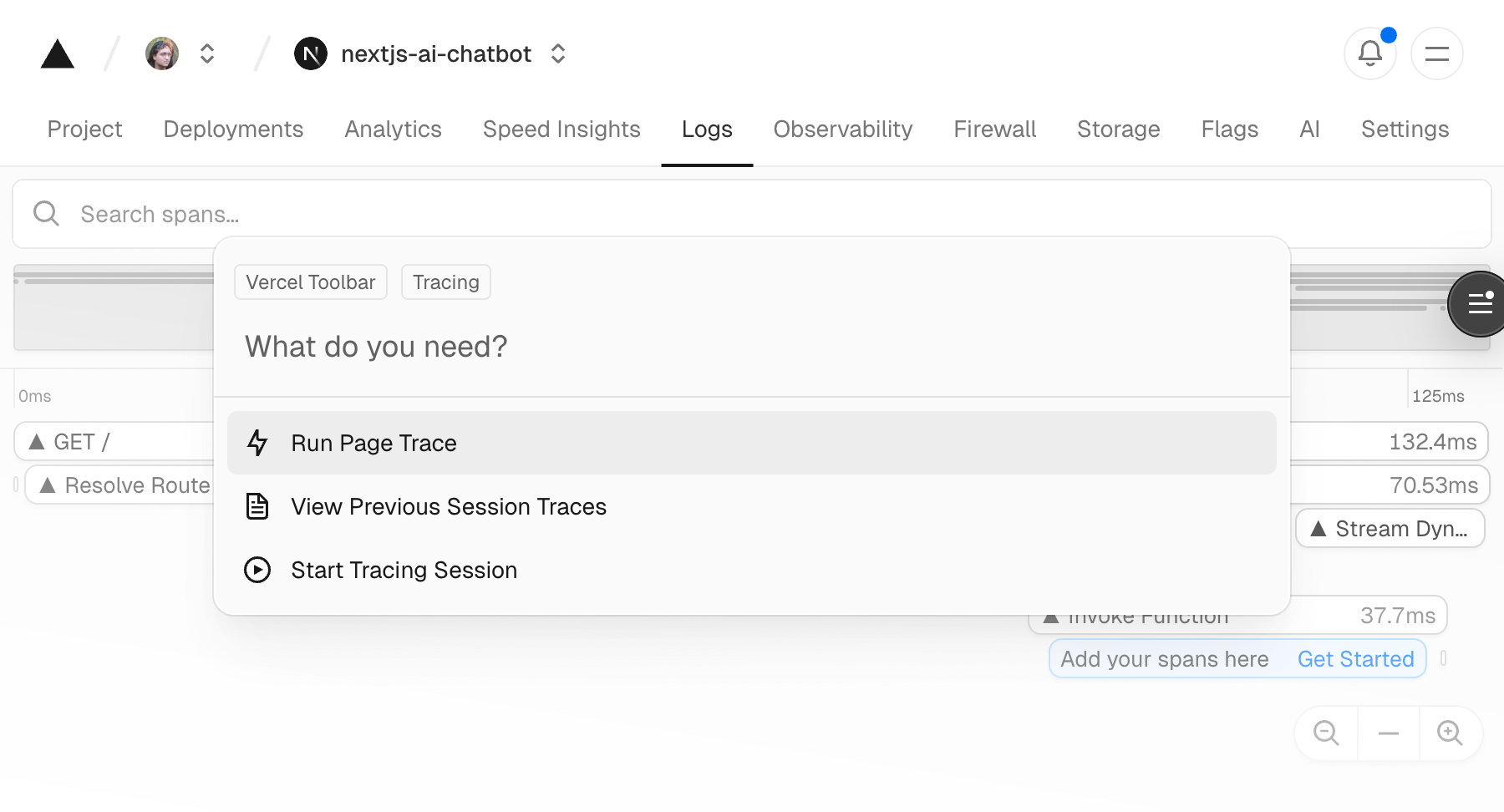

Vercel session tracing is now available on all plans, providing insight into steps Vercel's infrastructure took to serve a request alongside user code spans.

Vercel discovered and patched an information disclosure vulnerability in the Flags SDK (CVE-2025-46332). Users should upgrade to [email protected].

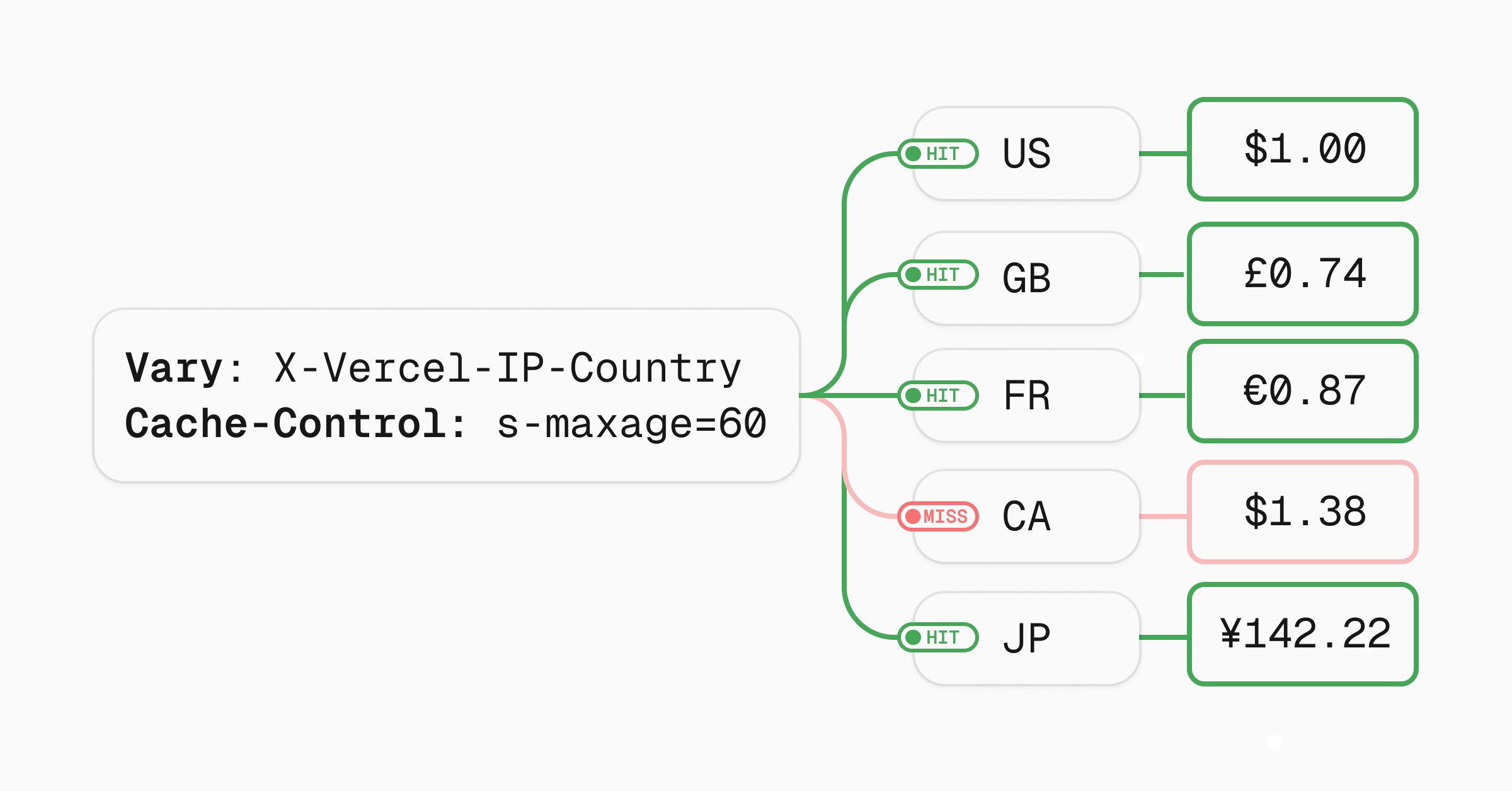

Vercel now supports the HTTP Vary header, enabling faster delivery of personalized, cached content based on location and language. Improve site performance and reduce compute automatically with Edge Network caching.

Understanding how v0 ensures everything you create is seo-ready by default, without changing how you build

Vercel customers can now create custom WAF rules directly from the chart displayed on the Firewall tab of the Vercel dashboard.

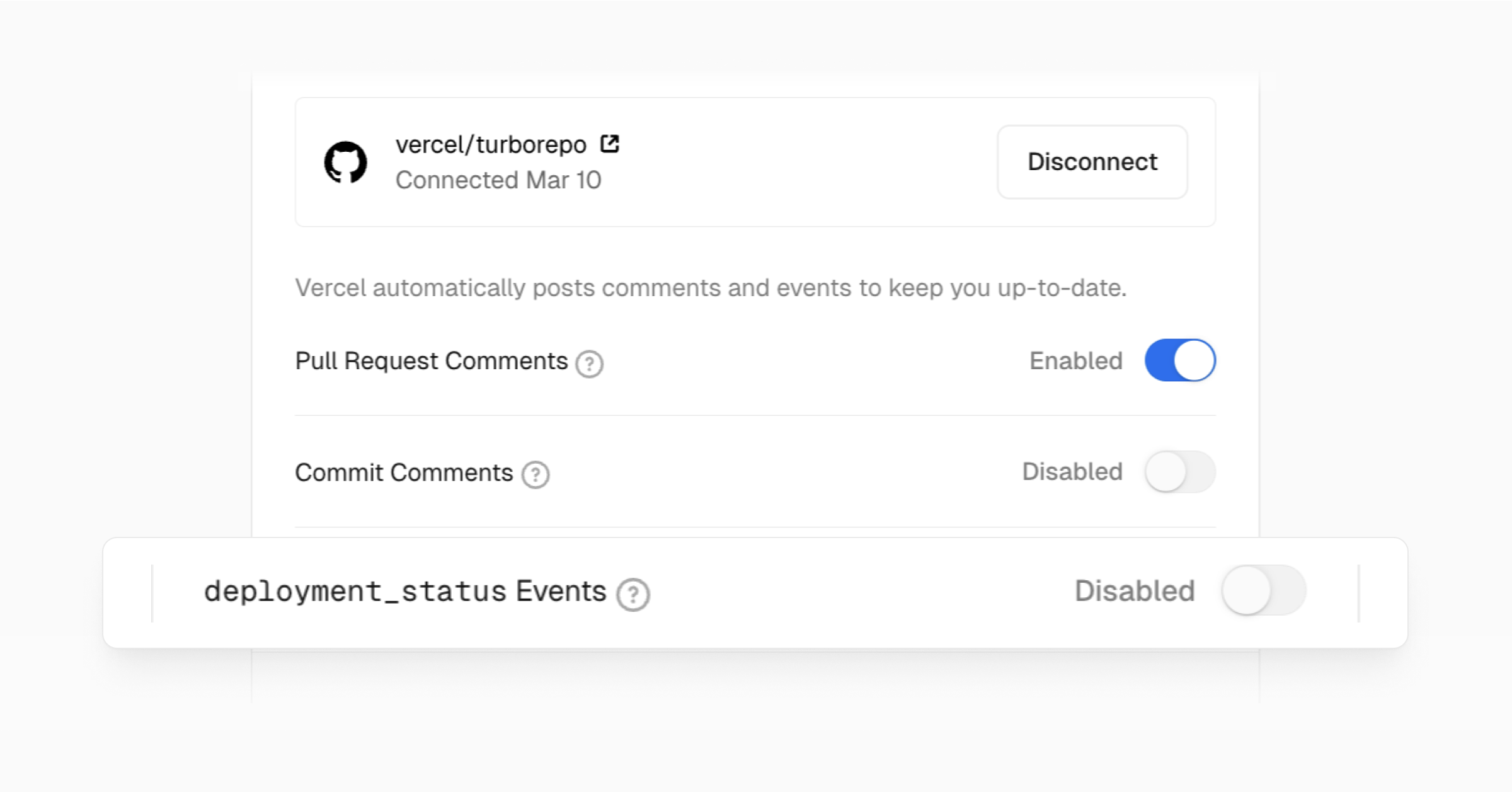

You can now disable webhook events that Vercel sends to GitHub Actions that create many notifications on GitHub pull requests.

The open web wins: A U.S. court ended Apple’s 27% fee on external payments, letting developers link freely and offer better, direct checkout experiences.

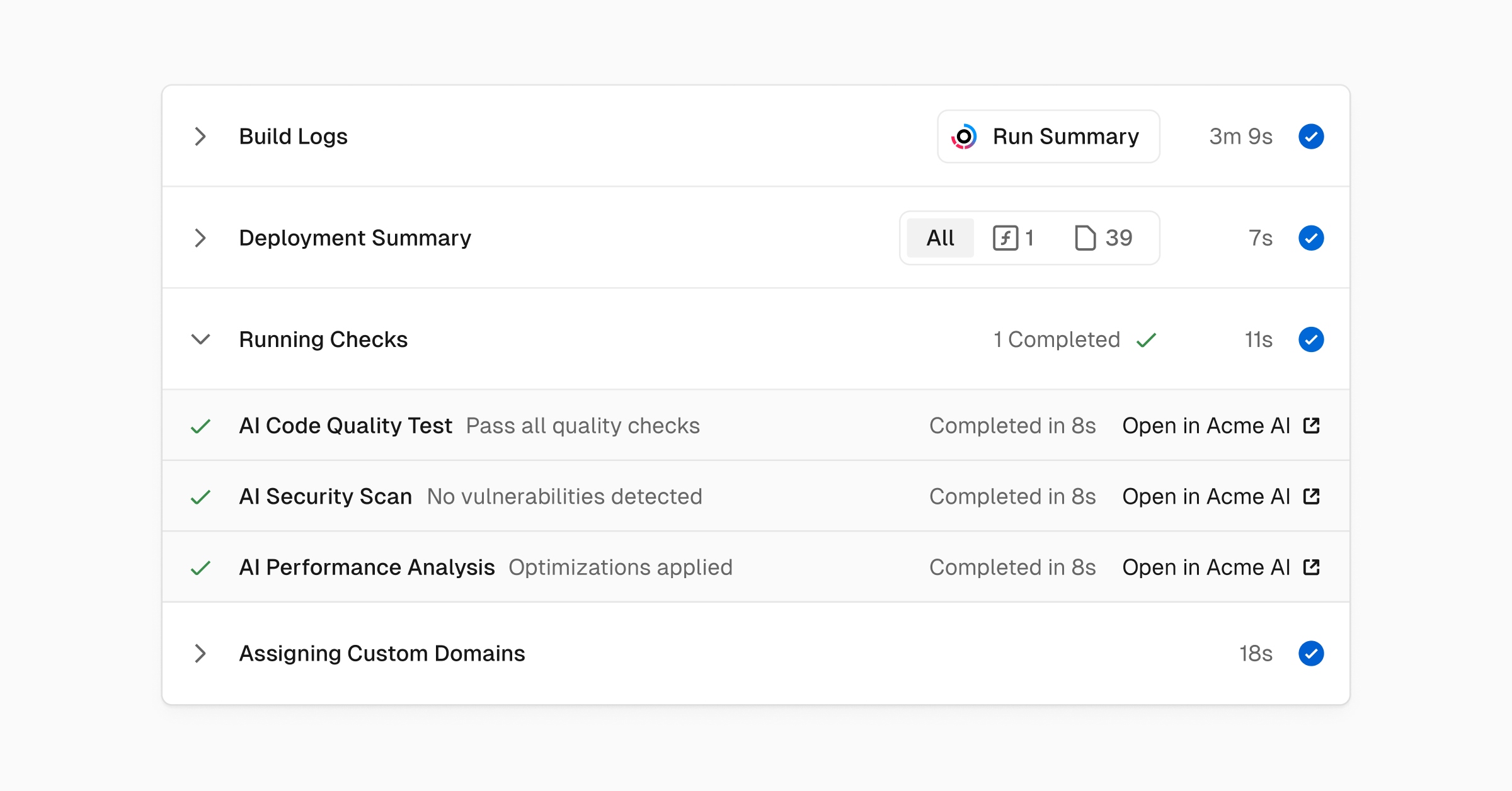

Vercel Marketplace now supports the Checks API, enabling providers to deliver automated post-deployment tests and surface results directly in the dashboard. Improve developer experience with seamless quality checks and actionable insights

Security researchers reviewing the Remix web framework have discovered two high-severity vulnerabilities in React Router. Vercel proactively deployed mitigation to the Vercel Firewall and Vercel customers are protected.

Pricing for on-demand concurrent builds has been reduced by more than 50%. Billable intervals have also been lowered from 10 minutes to 1 minute.