Last updated: 2026/02/25 03:00

In this post, we show you how to build a comprehensive photo search system using the AWS Cloud Development Kit (AWS CDK) that integrates Amazon Rekognition for face and object detection, Amazon Neptune for relationship mapping, and Amazon Bedrock for AI-powered captioning.

Helionは、開発者が高性能なMLカーネルを記述できる高水準DSLであり、最適化の複雑なタスクを自動調整エンジンに委ねています。この自動調整エンジンは、実装選択の広大な高次元空間を探索し、ターゲットハードウェア上でのパフォーマンスを最大化する構成を発見します。しかし、自動調整には長い時間がかかるという欠点があり、ユーザーからの不満の一因となっています。新たに開発されたLFBOパターン検索アルゴリズムは、機械学習の技術を用いて自動調整エンジンの効率を改善し、候補構成の評価数を大幅に削減します。このアルゴリズムにより、NVIDIA B200カーネルでは自動調整時間を36.5%短縮し、カーネルのレイテンシを平均2.6%改善しました。AMD MI350カーネルでも同様の改善が見られ、特定のカーネルでは最大50%の時間短縮が達成されています。 • Helionは高性能MLカーネルを記述するためのDSLで、最適化を自動調整エンジンに委ねる。 • 自動調整エンジンは高次元の実装選択空間を探索し、パフォーマンスを最大化する構成を見つける。 • 自動調整には長い時間がかかり、ユーザーからの不満が多い。 • LFBOパターン検索アルゴリズムは機械学習を用いて自動調整の効率を改善し、評価する候補構成の数を減少させる。 • NVIDIA B200カーネルで自動調整時間を36.5%短縮し、レイテンシを2.6%改善。 • AMD MI350カーネルでも自動調整時間を25.9%短縮し、レイテンシを1.7%改善。 • 特定のカーネルでは最大50%の時間短縮が達成されている。

In this post, we demonstrate how to train CodeFu-7B, a specialized 7-billion parameter model for competitive programming, using Group Relative Policy Optimization (GRPO) with veRL, a flexible and efficient training library for large language models (LLMs) that enables straightforward extension of diverse RL algorithms and seamless integration with existing LLM infrastructure, within a distributed Ray cluster managed by SageMaker training jobs. We walk through the complete implementation, covering data preparation, distributed training setup, and comprehensive observability, showcasing how this unified approach delivers both computational scale and developer experience for sophisticated RL training workloads.

This post explores the implementation of Dottxt’s Outlines framework as a practical approach to implementing structured outputs using AWS Marketplace in Amazon SageMaker.

In this post, we are exciting to announce availability of Global CRIS for customers in Thailand, Malaysia, Singapore, Indonesia, and Taiwan and give a walkthrough of technical implementation steps, and cover quota management best practices to maximize the value of your AI Inference deployments. We also provide guidance on best practices for production deployments.

We’re excited to announce the availability of Anthropic’s Claude Opus 4.6, Claude Sonnet 4.6, Claude Opus 4.5, Claude Sonnet 4.5, and Claude Haiku 4.5 through Amazon Bedrock global cross-Region inference for customers operating in the Middle East. In this post, we guide you through the capabilities of each Anthropic Claude model variant, the key advantages of global cross-Region inference including improved resilience, real-world use cases you can implement, and a code example to help you start building generative AI applications immediately.

Some solid advice from a couple of Frontend Masters courses made for a fast, secure, and ready to scale deployment system.

You can now access OpenAI's newest model GPT 5.3 Codex via Vercel's AI Gateway with no other provider accounts required.

A Blog post by NVIDIA on Hugging Face

An open-source TUI coding agent with observational memory that compresses context without losing it.

In this post, we examine how Bedrock Robotics tackles this challenge. By joining the AWS Physical AI Fellowship, the startup partnered with the AWS Generative AI Innovation Center to apply vision-language models that analyze construction video footage, extract operational details, and generate labeled training datasets at scale, to improve data preparation for autonomous construction equipment.

In this post, we explore how Sonrai, a life sciences AI company, partnered with AWS to build a robust MLOps framework using Amazon SageMaker AI that addresses these challenges while maintaining the traceability and reproducibility required in regulated environments.

In this blog post, we demonstrate how Hexagon collaborated with Amazon Web Services to scale their AI model production by pretraining state-of-the-art segmentation models, using the model training infrastructure of Amazon SageMaker HyperPod.

Hugging Face smolagents is an open source Python library designed to make it straightforward to build and run agents using a few lines of code. We will show you how to build an agentic AI solution by integrating Hugging Face smolagents with Amazon Web Services (AWS) managed services. You'll learn how to deploy a healthcare AI agent that demonstrates multi-model deployment options, vector-enhanced knowledge retrieval, and clinical decision support capabilities.

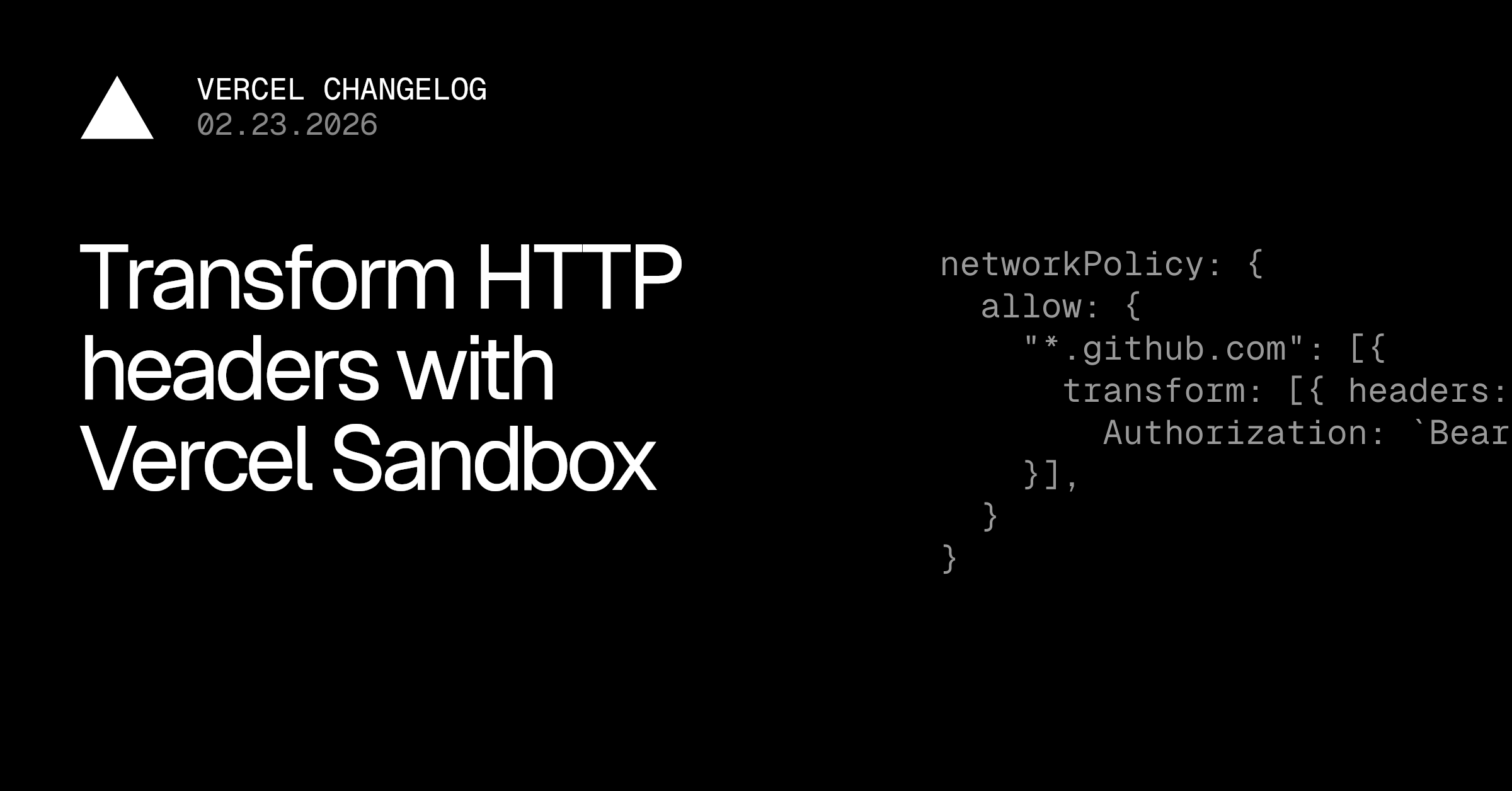

Sandbox network policies now support HTTP header injection to prevent secrets exfiltration by untrusted code

Chat SDK is now open source and available in public beta. It's a TypeScript library for building chat bots that work across Slack, Microsoft Teams, Google Chat, Discord, GitHub, and Linear — from a single codebase.

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に生成AIを利用してコードの自動生成や補完を行います。具体的には、開発者が入力したコメントやコードの一部を基に、AIが適切なコードを提案する機能があります。また、このツールは既存の開発環境に簡単に統合できるように設計されており、特にJavaScriptやTypeScriptのプロジェクトでの使用が推奨されています。これにより、開発の効率が向上し、エラーの削減が期待されます。 • AIを活用したコード自動生成ツールの紹介 • 開発者が入力したコメントに基づいてコードを提案する機能 • JavaScriptやTypeScriptプロジェクトへの統合が容易 • 開発効率の向上とエラー削減が期待される • 生成AIを利用した新しい開発手法の提案

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に生成AIを利用してコードの自動生成や修正を行うことができます。具体的には、開発者が意図する機能を自然言語で入力すると、ツールがそれに基づいてコードを生成します。また、既存のコードに対しても改善提案を行う機能があります。これにより、開発の効率が向上し、エラーの削減が期待されます。さらに、ツールは多くのプログラミング言語に対応しており、幅広い開発環境で利用可能です。 • AI技術を活用した新しい開発ツールの紹介 • 自然言語での入力に基づくコードの自動生成機能 • 既存コードへの改善提案機能 • 開発効率の向上とエラー削減の期待 • 多くのプログラミング言語に対応している

A key part of Agent Builder is its memory system. In this article we cover our rationale for prioritizing a memory system, technical details of how we built it, learnings from building the memory system, what the memory system enables, and discuss future work.

You can't build reliable agents without understanding how they reason, and you can't validate improvements without systematic evaluation.

In 2025, Amazon SageMaker AI saw dramatic improvements to core infrastructure offerings along four dimensions: capacity, price performance, observability, and usability. In this series of posts, we discuss these various improvements and their benefits. In Part 1, we discuss capacity improvements with the launch of Flexible Training Plans. We also describe improvements to price performance for inference workloads. In Part 2, we discuss enhancements made to observability, model customization, and model hosting.

In 2025, Amazon SageMaker AI made several improvements designed to help you train, tune, and host generative AI workloads. In Part 1 of this series, we discussed Flexible Training Plans and price performance improvements made to inference components. In this post, we discuss enhancements made to observability, model customization, and model hosting. These improvements facilitate a whole new class of customer use cases to be hosted on SageMaker AI.

In this post, you’ll use a six-step checklist to build a new MCP server or validate and adjust an existing MCP server for Amazon Quick integration. The Amazon Quick User Guide describes the MCP client behavior and constraints. This is a “How to” guide for detailed implementation required by 3P partners to integrate with Amazon Quick with MCP.

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に生成AIを利用してコードの自動生成や補完を行います。具体的には、開発者が入力したコメントや関数名に基づいて、AIが適切なコードを提案する機能があります。また、ツールは多くのプログラミング言語に対応しており、特にJavaScriptやPythonでの使用が推奨されています。これにより、開発者は生産性を向上させ、エラーを減少させることが期待されます。さらに、ユーザーインターフェースも直感的で使いやすく設計されています。 • AI技術を活用した新しい開発ツールの紹介 • コードの自動生成や補完機能を提供 • 開発者が入力したコメントや関数名に基づいて提案 • 多くのプログラミング言語に対応、特にJavaScriptやPythonが推奨 • 生産性向上とエラー減少が期待される • 直感的で使いやすいユーザーインターフェース

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

By Jacob Talbot Agent Builder gets better the more you use it because it remembers your feedback. Every correction you make, preference you share, and approach that works well is something that your agent can hold onto and apply the next time. Memory is one of the things that makes

In this post, we explain how you can use the Flyte Python SDK to orchestrate and scale AI/ML workflows. We explore how the Union.ai 2.0 system enables deployment of Flyte on Amazon Elastic Kubernetes Service (Amazon EKS), integrating seamlessly with AWS services like Amazon Simple Storage Service (Amazon S3), Amazon Aurora, AWS Identity and Access Management (IAM), and Amazon CloudWatch. We explore the solution through an AI workflow example, using the new Amazon S3 Vectors service.

この記事では、AIがオンラインでの信頼性にどのように影響を与えるかを探る新しい研究について述べています。Microsoftの「メディアの整合性と認証:現状、方向性、未来」という報告書は、現在の認証方法の限界を評価し、それを強化する方法を模索しています。著者たちは、デジタルの欺瞞を防ぐための単一の解決策は存在しないと結論付け、出所、使用されたツール、変更の有無などの情報を提供する手法(プロヴェナンス、透かし、デジタルフィンガープリンティングなど)を提案しています。報告書は、メディアの信頼性を高めるための道筋を示し、特に深層偽造が増加する中で、より高品質なコンテンツ指標を認識することの重要性を強調しています。Microsoftは2019年からメディアのプロヴェナンス技術を先駆けており、2021年にはメディアの整合性を標準化するためのC2PAを共同設立しました。 • AIによる深層偽造がニュースや選挙、ブランドへの信頼を脅かしている。 • Microsoftの報告書は、現在の認証方法の限界を評価し、強化策を探る。 • 単一の解決策ではデジタルの欺瞞を防げないと結論付けている。 • プロヴェナンス、透かし、デジタルフィンガープリンティングなどの手法が提案されている。 • メディアの信頼性を高めるための「高信頼性認証」の方向性が示されている。 • 深層偽造が増加する中で、より高品質なコンテンツ指標を認識することが重要。 • Microsoftはメディアのプロヴェナンス技術を2019年から開発している。

In this blog post, we will guide you through establishing data source connectivity between Amazon Quick Sight and Snowflake through secure key pair authentication.

3.1 Pro is designed for tasks where a simple answer isn’t enough.

Learn how we empower organizations to operate confidently, knowing their workloads run on a platform designed to meet stringent expectations.

Windsurf is the world's most advanced AI coding assistant for developers and enterprises. Windsurf Editor — the first AI-native IDE that keeps developers in flow.

A Blog post by NVIDIA on Hugging Face

The more effort you put in to what you put in, the higher quality you're going to get out.

You can now access Google's newest model, Gemini 3.1 Pro Preview via Vercel's AI Gateway with no other provider accounts required.

Build video generation into your apps with AI Gateway. Create product videos, dynamic content, and marketing assets at scale.

Generate AI videos with xAI Grok Imagine via the AI SDK. Text-to-video, image-to-video, and video editing with natural audio and dialogue.

Generate stylized AI videos with Alibaba Wan models via the Vercel AI Gateway. Text-to-video, image-to-video, and unique style transfer (R2V) to transform existing footage into anime, watercolor, and more.

Generate AI videos with Kling models via the AI Gateway. Text-to-video with audio, image-to-video with first/last frame control, and motion transfer. 7 models including v3.0, v2.6, and turbo variants.

Generate photorealistic AI videos with the Veo models via Vercel AI Gateway. Text-to-video and image-to-video with native audio generation. Up to 4K resolution with fast mode options.

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。特に、AIを用いたコード生成やデバッグ支援の機能が強調されており、開発者が効率的に作業を進めるための具体的な手法が紹介されています。また、これらのツールがどのようにして開発プロセスを改善し、エラーを減少させるかについても触れています。さらに、実装方法や必要な環境についての詳細も提供されており、実際の導入に向けたステップが明確に示されています。 • AI技術を活用した新しい開発ツールの紹介 • コード生成やデバッグ支援の機能が強調されている • 開発プロセスの改善とエラーの減少に寄与する • 具体的な実装方法や必要な環境についての詳細が提供されている • 導入に向けたステップが明確に示されている

CEO Sundar Pichai’s remarks at the opening ceremony of the AI Impact Summit 2026

Define test cases, run experiments, and track response quality for every change you make.

In this post, we demonstrate how to build unified intelligence systems using Amazon Bedrock AgentCore through our real-world implementation of the Customer Agent and Knowledge Engine (CAKE).

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に生成AIを利用してコードの自動生成や補完を行います。具体的には、開発者が入力したコメントやコードの一部を基に、AIが適切なコードを提案する機能があります。また、このツールは既存の開発環境に簡単に統合できるように設計されており、特にJavaScriptやTypeScriptのプロジェクトでの使用が推奨されています。これにより、開発の効率が向上し、エラーの削減が期待されます。 • AI技術を活用した新しい開発ツールの紹介 • 生成AIを利用したコードの自動生成や補完機能 • 開発者が入力したコメントやコードに基づく提案 • 既存の開発環境への簡単な統合 • JavaScriptやTypeScriptプロジェクトでの使用推奨 • 開発効率の向上とエラー削減の期待

In this post, we present a comprehensive evaluation framework for Amazon agentic AI systems that addresses the complexity of agentic AI applications at Amazon through two core components: a generic evaluation workflow that standardizes assessment procedures across diverse agent implementations, and an agent evaluation library that provides systematic measurements and metrics in Amazon Bedrock AgentCore Evaluations, along with Amazon use case-specific evaluation approaches and metrics.

Discover how insurers use agentic AI to modernize claims, underwriting, and risk while improving efficiency and customer experience.

A Blog post by IBM Research on Hugging Face

Lyria 3 is now available in the Gemini app. Create custom, high-quality 30-second tracks from text and images.

Lyria 3 is now available in the Gemini app. Create custom, high-quality 30-second tracks from text and images.

Discover how agentic AI is transforming revenue growth management with faster decision intelligence grounded in governance and financial truth.

Today, we're expanding what you can do with LangSmith Agent Builder. It’s an big update built around a simple idea: working with an agent should feel like working with a teammate. We rebuilt Agent Builder around this idea. There is now an always available agent (”Chat”) that you can

An overview of Google’s new global partnerships and funding announcements at the AI Impact Summit in India.

A look at the partnerships and investments Google announced at the AI Impact Summit 2026.

Learn how monday Service developed an eval-driven development framework for their customer-facing service agents.

A Blog post by NVIDIA on Hugging Face

A look at our 2026 Responsible AI Progress Report.

この記事では、AIシステムが地図上でルートを視覚的に追跡できるようにするための合成データ生成システムを提案しています。現在のマルチモーダル大規模言語モデル(MLLM)は、画像内の物体を認識する能力に優れていますが、地図上の幾何学的およびトポロジー的関係を理解することが難しいという課題があります。この問題を解決するために、著者たちは「MapTrace」と呼ばれる新しいタスク、データセット、および合成データ生成パイプラインを導入しました。このシステムは、MLLMに地図上でのパス追跡の基本的なスキルを教えることを目的としています。著者たちは、Gemini 2.5 ProとImagen-4モデルを使用して生成した200万の質問応答ペアをオープンソース化し、研究コミュニティにさらなる探求を促しています。 • MLLMは地図上のルートを正しく追跡するのが難しい。 • 地図の物理的なルールを学ぶためのデータが不足している。 • 手動でのパス注釈は困難で、大規模なデータセットの収集が実質的に不可能。 • 合成データ生成パイプラインを設計し、多様な高品質の地図を自動生成。 • 生成されたデータは、意図したルートに従い、通行不可能な領域を避ける。

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIがリアルタイムでサポートを提供し、効率的なコーディングを実現します。具体的には、AIがコードの提案を行ったり、エラーを検出したりする機能が含まれています。また、ユーザーインターフェースは直感的で使いやすく、開発者がすぐに利用できるように設計されています。さらに、このツールは既存の開発環境と簡単に統合できるため、導入のハードルが低いことも特徴です。最終的に、開発者の生産性を向上させることが期待されています。 • AI技術を活用した新しい開発ツールの紹介 • リアルタイムでのコード提案やエラー検出機能 • 直感的で使いやすいユーザーインターフェース • 既存の開発環境との簡単な統合 • 開発者の生産性向上が期待される

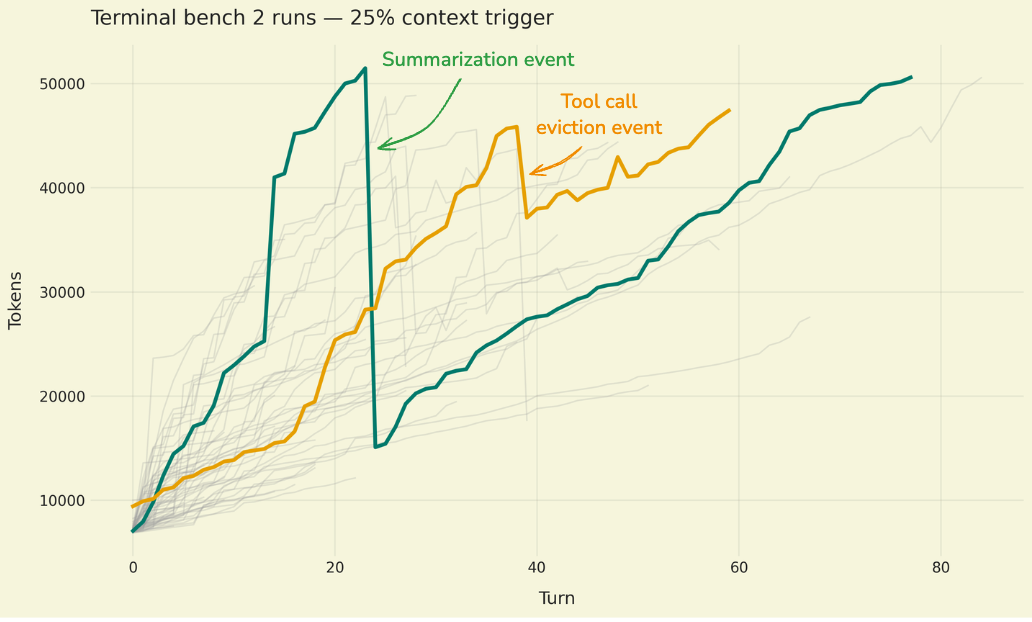

TLDR: Our coding agent went from Top 30 to Top 5 on Terminal Bench 2.0. We only changed the harness. Here’s our approach to harness engineering (teaser: self-verification & tracing help a lot). The Goal of Harness Engineering The goal of a harness is to mold the inherently spiky

Discover how Microsoft and partners are accelerating grid modernization with AI, unified data, and secure, scalable utility operations at DTECH 2026.

New Microsoft Cyber Pulse report outlines why organizations should observe, govern, and secure their AI transformation to move forward.

Google DeepMind announces new AI partnerships in India to advance scientific research, empower students with interactive Gemini-powered learning, and support national goals in agriculture and renewable energy.

You can now access the Recraft V4 image model via Vercel's AI Gateway with no other provider accounts required.

You can now access Anthropic's newest model Claude Sonnet 4.6 via Vercel's AI Gateway with no other provider accounts required.

Claude Sonnet 4.6 is now available in Windsurf with limited-time promotional pricing for self serve users: 2x credits without thinking and 3x credits with thinking.

The release of Anthropic Claude Sonnet 4.5 in the AWS GovCloud (US) Region introduces a straightforward on-ramp for AI-assisted development for workloads with regulatory compliance requirements. In this post, we explore how to combine Claude Sonnet 4.5 on Amazon Bedrock in AWS GovCloud (US) with Claude Code, an agentic coding assistant released by Anthropic. This […]

You can now access Alibaba's latest model, Qwen 3.5 Plus, via Vercel's AI Gateway with no other provider accounts required.

Learn how Mastra is optimized for modern AI systems, and how to implement a similar approach.

Today, we are announcing three new capabilities that address these requirements: proxy configuration, browser profiles, and browser extensions. Together, these features give you fine-grained control over how your AI agents interact with the web. This post will walk through each capability with configuration examples and practical use cases to help you get started.

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に自然言語処理を用いた機能が強化されています。具体的には、開発者が自然言語で指示を出すと、AIがそれに基づいてコードを生成することが可能です。また、ツールは既存の開発環境に簡単に統合できるよう設計されており、ユーザーは特別な設定を行うことなく利用を開始できます。これにより、開発の効率が大幅に向上し、エラーの削減にも寄与します。さらに、AIの学習能力により、使用するほどに精度が向上する点も特徴です。 • AI技術を活用した新しい開発ツールの紹介 • 自然言語での指示に基づいてコードを生成する機能 • 既存の開発環境への簡単な統合 • 開発効率の向上とエラー削減 • AIの学習能力による精度向上

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に自然言語処理を用いた機能が強化されています。具体的には、開発者が自然言語で指示を出すと、AIがそれに基づいてコードを生成することが可能です。また、ツールは既存の開発環境に簡単に統合できるよう設計されており、ユーザーは特別な設定を行うことなく利用を開始できます。これにより、開発の効率が大幅に向上し、エラーの削減にも寄与します。さらに、AIの学習能力により、使用するほどに精度が向上する点も特徴です。 • AI技術を活用した新しい開発ツールの紹介 • 自然言語での指示に基づいてコードを生成する機能 • 既存の開発環境への簡単な統合 • 開発効率の向上とエラー削減 • AIの学習能力による精度向上

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特にエラーの検出やコードの最適化に役立ちます。具体的には、AIがリアルタイムでコードを分析し、改善点を提案する機能が搭載されています。また、ユーザーインターフェースは直感的で使いやすく、導入も簡単です。さらに、他の開発環境との互換性も考慮されており、幅広いプラットフォームで利用可能です。これにより、開発者は生産性を向上させることが期待されます。 • AI技術を活用した新しい開発ツールの紹介 • リアルタイムでコードを分析し、改善点を提案する機能 • 直感的で使いやすいユーザーインターフェース • 簡単な導入プロセス • 幅広いプラットフォームとの互換性 • 生産性向上が期待される

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に自然言語処理を用いた機能が強化されています。具体的には、開発者が自然言語で指示を出すと、AIがそれに基づいてコードを生成することが可能です。また、ツールは既存の開発環境に簡単に統合できるよう設計されており、ユーザーは特別な設定を行うことなく利用を開始できます。さらに、AIによるコード生成は、開発の効率を大幅に向上させることが期待されています。 • AI技術を活用した新しい開発ツールの紹介 • 自然言語での指示に基づいてコードを生成する機能 • 既存の開発環境への簡単な統合 • 開発効率の向上が期待される • 自然言語処理を用いた強化された機能

Every time LLMs get better, the same question comes back: "Do you still need an agent framework?" It's a fair question. The best way to build agents changes as the models get more performant and evolve, but fundamentally, the agent is a system around the model, so they will not

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

In this post, we show how to create an AI-powered recruitment system using Amazon Bedrock, Amazon Bedrock Knowledge Bases, AWS Lambda, and other AWS services to enhance job description creation, candidate communication, and interview preparation while maintaining human oversight.

In this post, we provide you with a comprehensive approach to achieve this. First, we introduce a context message strategy that maintains continuous communication between servers and clients during extended operations. Next, we develop an asynchronous task management framework that allows your AI agents to initiate long-running processes without blocking other operations. Finally, we demonstrate how to bring these strategies together with Amazon Bedrock AgentCore and Strands Agents to build production-ready AI agents that can handle complex, time-intensive operations reliably.

Mexico-based Cemex is rolling out an AI-powered financial agent that is transforming how top executives make better informed decisions.

Interrupt - The Agent Conference by LangChain - is where builders come to learn what's actually working in production. This year, we're bringing together more than 1,000 developers, product leaders, researchers, and founders to share what's coming next for agents—and how to build it. Join us May 13-14

Learn about our AI-first, end-to-end, security platform that helps you protect every layer of the AI stack and secure with agentic AI.

Gemini 3 Deep Thinkは、科学、研究、工学の分野における進展を目指すAIシステムです。このシステムは、データ分析やシミュレーションを通じて、複雑な問題を解決するための新しい手法を提供します。特に、研究者やエンジニアが直面する課題に対して、迅速かつ正確な情報を提供することを目的としています。Gemini 3は、ユーザーが必要とする情報を効率的に取得できるように設計されており、科学的な発見を加速させることが期待されています。さらに、このシステムは、さまざまなデータソースと統合され、リアルタイムでの分析が可能です。 • Gemini 3 Deep Thinkは科学、研究、工学の進展を目指すAIシステムである。 • データ分析やシミュレーションを通じて複雑な問題を解決する新しい手法を提供する。 • 研究者やエンジニアが直面する課題に迅速かつ正確な情報を提供することを目的としている。 • ユーザーが必要とする情報を効率的に取得できるように設計されている。 • 科学的な発見を加速させることが期待されている。 • さまざまなデータソースと統合され、リアルタイムでの分析が可能である。

We’re releasing a major upgrade to Gemini 3 Deep Think, our specialized reasoning mode.

Find out how Pantone built the Palette Generator using Azure Cosmos DB with multiagent architecture to respond to users dynamically.

You can now access MiniMax M2.5 through Vercel's AI Gateway with no other provider accounts required.

OpenAI's GPT-5.3-Codex-Spark, an ultra-fast model optimized for real-time coding, is now available in Windsurf's Arena Mode Fast and Hybrid battle groups.

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に自然言語処理を用いた機能が強化されています。具体的には、開発者が自然言語で指示を出すと、AIがそれに基づいてコードを生成することが可能です。また、ツールは既存の開発環境に簡単に統合できるよう設計されており、ユーザーは特別な設定を行うことなく利用を開始できます。これにより、開発の効率が大幅に向上し、エラーの削減にも寄与します。さらに、AIの学習能力により、使用するほどに精度が向上する点も特徴です。 • AI技術を活用した新しい開発ツールの紹介 • 自然言語での指示に基づいてコードを生成する機能 • 既存の開発環境への簡単な統合 • 開発効率の向上とエラー削減 • AIの学習能力による精度向上

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

Discover how agentic cloud operations and Azure Copilot bring intelligence and continuous optimization to modern cloud environments. Learn more.

Today we’re excited to announce that the NVIDIA Nemotron 3 Nano 30B model with 3B active parameters is now generally available in the Amazon SageMaker JumpStart model catalog. You can accelerate innovation and deliver tangible business value with Nemotron 3 Nano on Amazon Web Services (AWS) without having to manage model deployment complexities. You can power your generative AI applications with Nemotron capabilities using the managed deployment capabilities offered by SageMaker JumpStart.

This post shows you how to implement robust error handling strategies that can help improve application reliability and user experience when using Amazon Bedrock. We'll dive deep into strategies for optimizing performances for the application with these errors. Whether this is for a fairly new application or matured AI application, in this post you will be able to find the practical guidelines to operate with on these errors.

This post shows you how to implement intelligent notification filtering using Amazon Bedrock and its gen-AI capabilities. You'll learn model selection strategies, cost optimization techniques, and architectural patterns for deploying gen-AI at IoT scale, based on Swann Communications deployment across millions of devices.

LinqAlpha is a Boston-based multi-agent AI system built specifically for institutional investors. The system supports and streamlines agentic workflows across company screening, primer generation, stock price catalyst mapping, and now, pressure-testing investment ideas through a new AI agent called Devil’s Advocate. In this post, we share how LinqAlpha uses Amazon Bedrock to build and scale Devil’s Advocate.

You can now access Z.AI's latest model, GLM 5, via Vercel's AI Gateway with no other provider accounts required.

本記事では、変動する機械の可用性に対応したジョブスケジューリングの新しいアルゴリズムを紹介しています。クラウドインフラにおいて、リソースは静的ではなく、ハードウェアの故障やメンテナンス、電力制限などにより常に変動します。特に、優先度の高いタスクがリソースを要求するため、低優先度のバッチジョブには変動する「残余」キャパシティが残ります。非プリエンプティブなジョブは中断できないため、スケジューラは長時間のジョブを今開始するか、より安全なウィンドウを待つかの判断を迫られます。研究では、時間変動するキャパシティの下でスループットを最大化するための定数因子近似アルゴリズムを提供し、変動するクラウド環境での堅牢なスケジューラ構築の理論的基盤を示しています。オフラインとオンラインの2つの環境での結果も考察されています。 • 変動する機械の可用性に対応したジョブスケジューリングのアルゴリズムを提案 • リソースは静的ではなく、常に変動することを考慮 • 非プリエンプティブなジョブは中断できず、スケジューラはリスクを考慮する必要がある • スループット最大化のための定数因子近似アルゴリズムを提供 • オフラインとオンラインの2つの環境でのスケジューリング問題を考察 • オフライン設定では、単純な戦略が意外に良好な結果を示す

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIがリアルタイムでサポートを提供し、効率的なコーディングを実現します。具体的には、AIがコードの提案を行ったり、エラーを検出したりする機能が含まれています。また、ユーザーインターフェースは直感的で使いやすく、開発者がすぐに利用できるように設計されています。さらに、このツールは既存の開発環境と高い互換性を持ち、導入が容易です。最終的に、開発者はより迅速に高品質なソフトウェアを作成できるようになります。 • AI技術を活用した新しい開発ツールの紹介 • リアルタイムでのコード提案やエラー検出機能 • 直感的で使いやすいユーザーインターフェース • 既存の開発環境との高い互換性 • 迅速な高品質なソフトウェア開発の実現

Observational memory async buffering (default-on) with new structured streaming events, workspace mounts with CompositeFilesystem for unified multi-provider file access

In this Q&A, Michelle introduces M12 and considers what kinds of AI-powered startup solutions will drive the next wave of AI innovation.

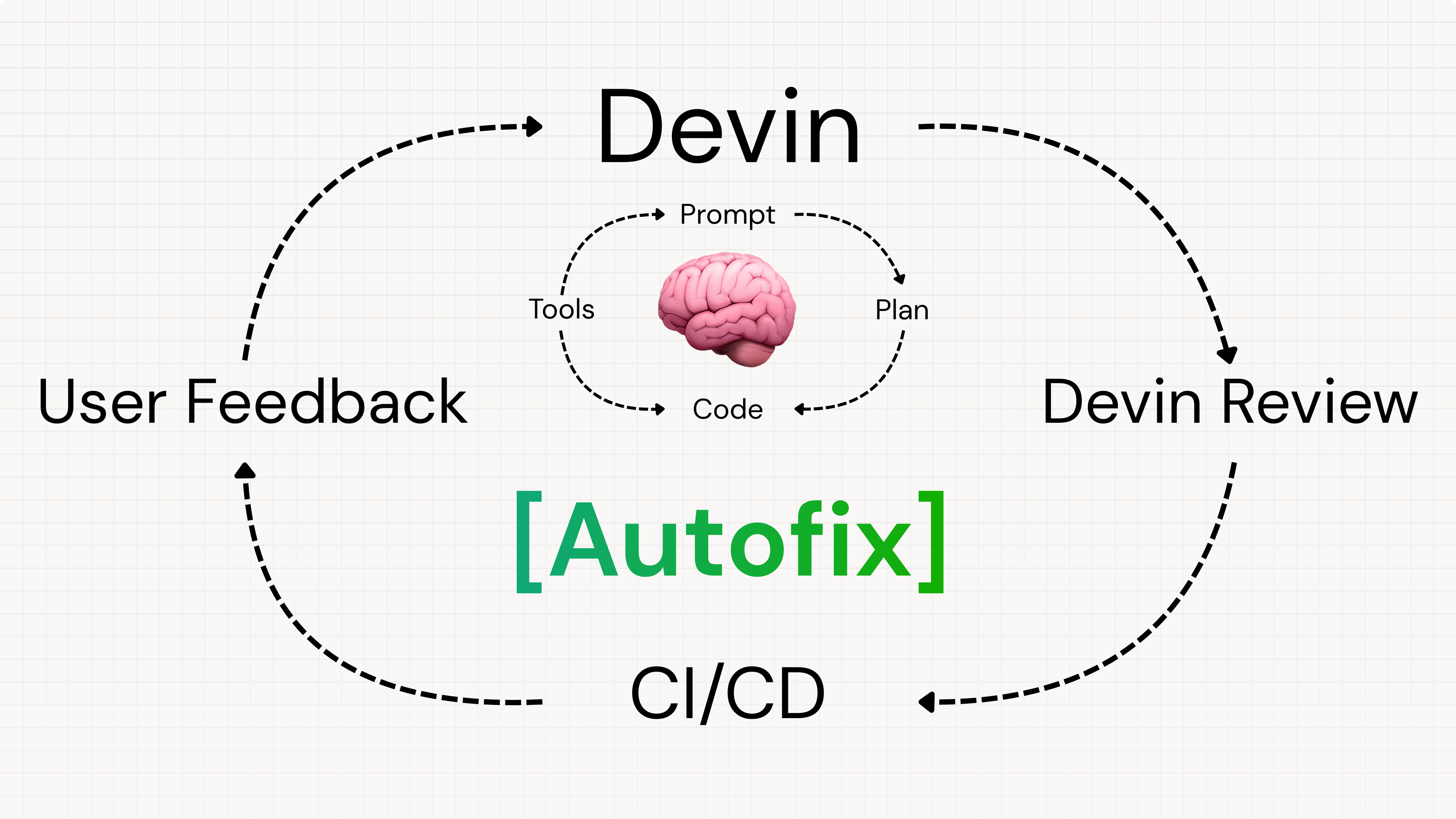

We built a feature that massively increased our internal token spend on Devin. But our PRs are now much more free of bugs and we can't go back.

In this post, we discuss how Amazon Nova in Amazon Bedrock can be used to implement an AI-powered image recognition solution that automates the detection and validation of module components, significantly reducing manual verification efforts and improving accuracy.

Iberdrola, one of the world’s largest utility companies, has embraced cutting-edge AI technology to revolutionize its IT operations in ServiceNow. Through its partnership with AWS, Iberdrola implemented different agentic architectures using Amazon Bedrock AgentCore, targeting three key areas: optimizing change request validation in the draft phase, enriching incident management with contextual intelligence, and simplifying change model selection using conversational AI. These innovations reduce bottlenecks, help teams accelerate ticket resolution, and deliver consistent and high-quality data handling throughout the organization.

DialogLabは、動的な人間とAIのグループ会話を作成、シミュレーション、テストするためのオープンソースプロトタイピングフレームワークです。このフレームワークは、会話の社会的設定(参加者、役割、サブグループ)と時間的進行を分離し、複雑なダイナミクスを簡素化した三段階のワークフロー(作成、テスト、検証)を提供します。DialogLabは、エージェントのペルソナを定義し、ターンテイキングのルールを管理し、スクリプトされたナarrativeと即興の間の遷移を調整するための統一インターフェースを提供します。評価結果によると、DialogLabは多様なパーティデザインを効率的にサポートし、リアルで適応可能な会話の設計を可能にします。 • DialogLabは動的な人間とAIのグループ会話を作成、シミュレーション、テストするためのフレームワークである。 • 会話の社会的設定と時間的進行を分離することで、複雑なダイナミクスを簡素化する。 • 三段階のワークフロー(作成、テスト、検証)を提供し、効率的な反復をサポートする。 • エージェントのペルソナ、ターンテイキングのルール、即興とスクリプトの遷移を管理できる。 • 評価結果は、リアルで適応可能な多様なパーティデザインを可能にすることを示している。

Amazon Nova Sonic delivers real-time, human-like voice conversations through the bidirectional streaming interface. In this post, you learn how Amazon Nova Sonic can solve some of the challenges faced by cascaded approaches, simplify building voice AI agents, and provide natural conversational capabilities. We also provide guidance on when to choose each approach to help you make informed decisions for your voice AI projects.

Thank you to Nuno Campos from Witan Labs, Tomas Beran and Mikayel Harutyunyan from E2B, Jonathan Wall from Runloop, and Ben Guo from Zo Computer for their review and comments. TL;DR: * More and more agents need a workspace: a computer where they can run code, install packages, and access

In this Q&A, Michelle introduces M12 and considers what kinds of AI-powered startup solutions will drive the next wave of AI innovation.

Explore Microsoft’s work with HTS technology to enhance power density, support high‑performance workloads, and reduce community impact. Learn more.

Safer Internet Day 2026 helps educators and families support AI awareness, digital citizenship, and safer online habits.

Runtime logs are now available via Vercel's MCP server, enabling AI agents to analyze performance, fix errors, and more.

Today, we're thrilled to announce that LangSmith, the agent engineering platform from LangChain, is available in Google Cloud Marketplace. Google Cloud customers can now procure LangSmith through their existing Google Cloud accounts, enabling seamless billing, simplified procurement, and the ability to draw down on existing Google Cloud commitments. LangSmith is

This blog post dives deeper into the implementation architecture for the Automated Reasoning checks rewriting chatbot.

この記事では、Google DeepMindの生物音響基盤モデル「Perch 2.0」が、鳥や他の陸上動物の音声データを用いて訓練され、海洋音響の課題においても優れた性能を発揮することを説明しています。特に、Perch 2.0は水中音声データを含まないにもかかわらず、クジラの音声分類において効果的に機能します。Googleは、クジラの監視と保護のために外部科学者と協力しており、2024年には多種クジラモデルをリリースしました。新たに発見された音やデータに対してカスタム分類器を作成するために、転移学習を活用するアプローチが紹介されており、これにより計算資源と実験の負担が大幅に軽減されます。最終的に、Perch 2.0は海洋生態系の洞察を拡大するための重要なツールとなることが期待されています。 • Perch 2.0は鳥の音声データを基に訓練され、海洋音響の課題に適用可能である。 • 新しい音の発見やデータに対してカスタム分類器を作成するために転移学習を利用する。 • 転移学習により、計算資源と実験の負担が軽減される。 • Perch 2.0は水中音声データを含まないが、クジラの音声分類において優れた性能を示す。 • Googleはクジラの監視と保護のために外部科学者と協力している。

Learn how agentic commerce reshapes discovery, decision‑making, and influence, helping CMOs compete in AI‑driven retail. Read more.

Learn how insurers are using agentic AI to scale adoption, improve efficiency, and modernize claims and operations.

In this post, we show how this integrated approach transforms enterprise LLM fine-tuning from a complex, resource-intensive challenge into a streamlined, scalable solution for achieving better model performance in domain-specific applications.

Working with the Generative AI Innovation Center, New Relic NOVA (New Relic Omnipresence Virtual Assistant) evolved from a knowledge assistant into a comprehensive productivity engine. We explore the technical architecture, development journey, and key lessons learned in building an enterprise-grade AI solution that delivers measurable productivity gains at scale.

In this post, you will learn how to deploy Fullstack AgentCore Solution Template (FAST) to your Amazon Web Services (AWS) account, understand its architecture, and see how to extend it for your requirements. You will learn how to build your own agent while FAST handles authentication, infrastructure as code (IaC), deployment pipelines, and service integration.

Gemini Deep Think is accelerating discovery in maths, physics, and computer science by acting as a powerful scientific companion for researchers.

This post walks through how agent-to-agent collaboration on Amazon Bedrock works in practice, using Amazon Nova 2 Lite for planning and Amazon Nova Act for browser interaction, to turn a fragile single-agent setup into a predictable multi-agent system.

Learn how to build a Frontier organization by taking a human-centered approach to AI transformation with insights from Judson Althoff, Microsoft CEO of Commercial Business.

Why competitive advantage in AI comes from the platform you deploy agents on, not the agents themselves.

Learn how we built an AI Engine Optimization system to track coding agents using Vercel Sandbox, AI Gateway, and Workflows for isolated execution.

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に生成AIを利用してコードの自動生成や補完を行います。具体的には、開発者が入力したコメントやコードの一部を基に、AIが適切なコードを提案する機能があります。また、ツールは多くのプログラミング言語に対応しており、特にJavaScriptやPythonでの利用が推奨されています。これにより、開発者は作業効率を大幅に向上させることができ、エラーの削減や開発時間の短縮が期待されます。 • AI技術を活用した新しい開発ツールの紹介 • コードの自動生成や補完機能を提供 • 開発者が入力したコメントを基にコードを提案 • 多くのプログラミング言語に対応、特にJavaScriptやPythonが推奨 • 作業効率の向上、エラー削減、開発時間の短縮が期待される

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に生成AIを利用してコードの自動生成や修正を行うことができます。具体的には、開発者が意図する機能を自然言語で入力すると、ツールがそれに基づいてコードを生成します。また、既存のコードに対しても改善提案を行う機能があります。これにより、開発の効率が向上し、エラーの削減が期待されます。さらに、ツールは多くのプログラミング言語に対応しており、幅広い開発環境で利用可能です。 • AIを活用したコード自動生成ツールの紹介 • 自然言語での入力に基づくコード生成機能 • 既存コードへの改善提案機能 • 開発効率の向上とエラー削減の期待 • 多くのプログラミング言語に対応

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

A human-inspired memory system that scores ~95% on LongMemEval — with a completely stable context window.

この記事では、Mamba-2のState-Space Dual (SSD)モジュールを最適化するために、5つのSSDカーネルを1つのTritonカーネルに統合した方法について説明しています。この最適化により、NVIDIA A100およびH100 GPU上で1.50倍から2.51倍の速度向上が得られました。カーネルの統合により、起動オーバーヘッドが削減され、冗長なメモリ操作が回避され、すべての入力サイズでカーネルが高速化されます。記事では、SSDカーネルの統合方法、残るボトルネック、ベンチマーク結果、オープンソースでのカーネルリリース計画についても触れています。Mamba-2は、長いシーケンスにスケーラブルなモデルであり、特に128Kトークン以上の長いコンテキストに対して魅力的です。 • Mamba-2のSSDモジュールを最適化し、速度向上を実現した。 • 5つのSSDカーネルを1つのTritonカーネルに統合した。 • NVIDIA A100およびH100 GPUで1.50倍から2.51倍の速度向上を達成。 • カーネルの統合により、起動オーバーヘッドが削減され、メモリ操作が効率化された。 • Mamba-2は長いシーケンスにスケーラブルで、特に128Kトークン以上に対応。

Today, we're announcing structured outputs on Amazon Bedrock—a capability that fundamentally transforms how you can obtain validated JSON responses from foundation models through constrained decoding for schema compliance. In this post, we explore the challenges of traditional JSON generation and how structured outputs solves them. We cover the two core mechanisms—JSON Schema output format and strict tool use—along with implementation details, best practices, and practical code examples.

In this post, we demonstrate how to use the CLI and the SDK to create and manage SageMaker HyperPod clusters in your AWS account. We walk through a practical example and dive deeper into the user workflow and parameter choices.

In this post, we explore the Amazon Nova rubric-based judge feature: what a rubric-based judge is, how the judge is trained, what metrics to consider, and how to calibrate the judge. We chare notebook code of the Amazon Nova rubric-based LLM-as-a-judge methodology to evaluate and compare the outputs of two different LLMs using SageMaker training jobs.

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に生成AIを利用してコードの自動生成や補完を行います。具体的には、開発者が入力したコードの意図を理解し、適切なコードスニペットを提案する機能があります。また、ユーザーインターフェースは直感的で使いやすく、学習曲線が緩やかであることが強調されています。さらに、このツールは既存の開発環境に簡単に統合できるため、導入のハードルが低い点もメリットとして挙げられています。 • AIを活用したコード自動生成ツールの紹介 • 開発者の意図を理解し、適切なコードスニペットを提案 • 直感的なユーザーインターフェース • 既存の開発環境への簡単な統合 • 学習曲線が緩やかで使いやすい

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に生成AIを利用してコードの自動生成や補完を行います。具体的には、開発者が入力したコメントや関数名に基づいて、AIが適切なコードを提案する機能があります。また、ツールは多くのプログラミング言語に対応しており、特にJavaScriptやPythonでの利用が推奨されています。これにより、開発者はコーディングの効率を大幅に向上させることができ、エラーの削減や開発時間の短縮が期待されます。 • AIを活用したコード自動生成ツールの紹介 • 開発者が入力した情報に基づいてコードを提案 • JavaScriptやPythonなど多くの言語に対応 • コーディング効率の向上が期待される • エラー削減や開発時間短縮のメリット

Associa collaborated with the AWS Generative AI Innovation Center to build a generative AI-powered document classification system aligning with Associa’s long-term vision of using generative AI to achieve operational efficiencies in document management. The solution automatically categorizes incoming documents with high accuracy, processes documents efficiently, and provides substantial cost savings while maintaining operational excellence. The document classification system, developed using the Generative AI Intelligent Document Processing (GenAI IDP) Accelerator, is designed to integrate seamlessly into existing workflows. It revolutionizes how employees interact with document management systems by reducing the time spent on manual classification tasks.

In this post, you will learn how to configure and use Amazon Nova Multimodal Embeddings for media asset search systems, product discovery experiences, and document retrieval applications.

この記事では、PyTorchを使用した高効率な推論システムの設計について説明しています。PyTorchは、特に推薦システムやランキングの分野での最先端研究において広く使用されており、迅速なモデルの導入が求められています。Metaの重要な機械学習ワークロードを支えるこのシステムは、Deep Learning Recommendation Model(DLRM)や新しいモデリング技術(DHEN、HSTUなど)を管理しています。推論環境での効率的なモデル運用のためには、トレーニングモデルを最適化された推論モデルに変換する堅牢なパイプラインが必要です。これにより、高スループットと厳しいレイテンシ要件を満たすことが可能になります。 • PyTorchは推薦システムにおいて主流のフレームワークである。 • 高効率かつ迅速なモデル導入が可能な推論システムを設計している。 • Metaの機械学習ワークロードを支えるために、多様なMLアーキテクチャを管理している。 • トレーニングモデルを推論モデルに変換するための堅牢なパイプラインが必要。 • 推論モデルはトレーニングモデルの前方ロジックを反映し、最適化を可能にする。

Delegate complex tasks end-to-end and execute reliably with Claude Opus 4.6, now available in Microsoft Foundry.

Learn how Google's NAI framework uses AI to make technology more adaptive, inclusive and helpful for everyone.

A Blog post by ServiceNow-AI on Hugging Face

Google Cloud built an industry-first AI tool to help U.S. Ski and Snowboard athletes.

Learn more about Google’s new ad that will run during football’s Big Game on February 8.

You can now access Anthropic's latest model, Claude Opus 4.6, via Vercel's AI Gateway with no other provider accounts required.

A six-week program to help you scale your AI company offering over $6M in credits from Vercel, v0, AWS, and leading AI platforms

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に生成AIを利用してコードの自動生成や修正を行うことができます。具体的には、開発者が意図する機能を自然言語で入力すると、ツールがそれに基づいてコードを生成します。また、既存のコードに対しても改善提案を行うことができ、開発効率を大幅に向上させることが期待されています。さらに、このツールは多くのプログラミング言語に対応しており、特にJavaScriptやPythonでの利用が推奨されています。 • AIを活用したコード自動生成ツールの紹介 • 自然言語での入力に基づくコード生成機能 • 既存コードへの改善提案機能 • JavaScriptやPythonなど多言語対応 • 開発効率の向上が期待される

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に生成AIを利用してコードの自動生成や補完を行います。具体的には、開発者が入力したコメントやコードの一部を基に、AIが適切なコードを提案する機能があります。また、このツールは既存の開発環境に簡単に統合できるように設計されており、特にJavaScriptやTypeScriptのプロジェクトでの使用が推奨されています。これにより、開発の効率が向上し、エラーの削減が期待されます。 • AIを活用したコード自動生成ツールの紹介 • 開発者が入力したコメントに基づいてコードを提案する機能 • JavaScriptやTypeScriptプロジェクトへの統合が容易 • 開発効率の向上とエラー削減が期待される • 生成AIを利用した新しい開発手法の提案

Google Researchは、ユーザーのユニークなニーズに適応するマルチモーダルAIツールを組み込んだ「Natively Adaptive Interfaces(NAI)」を導入し、ユニバーサルデザインを再定義しています。このアプローチは、障害を持つ人々と共に開発され、アクセシビリティを開発プロセスの初期から組み込むことを重視しています。NAIは、静的なナビゲーションを動的なエージェント駆動のモジュールに置き換え、デジタルアーキテクチャを受動的なツールから能動的なコラボレーターへと変革します。さらに、障害者コミュニティとの共同設計を通じて、彼らの経験と専門知識をソリューションの中心に据えることを目指しています。マルチモーダルAIツールは、アクセシブルなインターフェースを構築するための有望な道を提供し、特に文書の可読性向上において、中央のオーケストレーターが戦略的な読み取り管理者として機能します。 • Natively Adaptive Interfaces(NAI)を導入し、ユニバーサルデザインを再定義 • 障害を持つ人々と共に開発し、アクセシビリティを初期から組み込む • 静的ナビゲーションを動的エージェント駆動のモジュールに置き換える • 障害者コミュニティとの共同設計を重視し、彼らの経験を反映 • マルチモーダルAIツールがアクセシブルなインターフェース構築に寄与 • 中央のオーケストレーターが文書の可読性を向上させる役割を果たす

File access, command execution, and reusable skills for agents that actually get things done.

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に生成AIを利用してコードの自動生成や補完を行います。具体的には、開発者が入力したコメントやコードの一部を基に、AIが適切なコードを提案する機能があります。また、このツールは既存の開発環境に簡単に統合できるように設計されており、特にJavaScriptやTypeScriptのプロジェクトでの使用が推奨されています。これにより、開発の効率が向上し、エラーの削減が期待されます。 • AIを活用したコード自動生成ツールの紹介 • 開発者が入力したコメントに基づいてコードを提案する機能 • JavaScriptやTypeScriptプロジェクトへの統合が容易 • 開発効率の向上とエラー削減が期待される • 生成AIを利用した新しい開発手法の提案

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に自然言語処理を用いた機能が強化されています。具体的には、開発者が自然言語で指示を出すと、AIがそれに基づいてコードを生成することが可能です。また、ツールは既存の開発環境に簡単に統合できるよう設計されており、ユーザーは特別な設定を行うことなくすぐに利用を開始できます。これにより、開発の効率が大幅に向上し、エラーの削減にも寄与します。 • AIを活用した新しい開発ツールの紹介 • 自然言語での指示に基づいてコードを生成する機能 • 既存の開発環境への簡単な統合 • 開発効率の向上 • エラー削減の効果

Learn how Microsoft research uncovers backdoor risks in language models and introduces a practical scanner to detect tampering and strengthen AI security.

Google AI announcements from January

Building upon our earlier work of marketing campaign image generation using Amazon Nova foundation models, in this post, we demonstrate how to enhance image generation by learning from previous marketing campaigns. We explore how to integrate Amazon Bedrock, AWS Lambda, and Amazon OpenSearch Serverless to create an advanced image generation system that uses reference campaigns to maintain brand guidelines, deliver consistent content, and enhance the effectiveness and efficiency of new campaign creation.

この記事では、AIモデルを効率化するための部分選択アルゴリズム「Sequential Attention」を紹介しています。特徴選択は、機械学習や深層学習において重要な課題であり、特に非線形な特徴の相互作用が複雑なため、効果的な特徴の選定が求められます。Sequential Attentionは、モデルのトレーニングプロセスに選択を統合し、トレーニングコストを最小限に抑えつつ、精度を維持します。この手法は、注意メカニズムを活用して、段階的に最適な要素を選択することで、従来の一括選択法の限界を克服します。最終的に、Sequential Attentionは、深層学習モデルの構造を最適化するために実際のシナリオで使用されています。 • AIモデルの効率化を目的とした部分選択アルゴリズム「Sequential Attention」を提案 • 特徴選択はNP困難であり、特に非線形な特徴の相互作用が複雑 • Sequential Attentionはトレーニングプロセスに選択を統合し、コストを最小限に抑える • 注意メカニズムを利用して段階的に最適な要素を選択 • この手法は深層学習モデルの構造を最適化するために実際に使用されている

A Blog post by NVIDIA on Hugging Face

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に生成AIを利用してコードの自動生成や修正を行うことができます。具体的には、開発者が意図する機能を自然言語で入力すると、ツールがそれに基づいてコードを生成します。また、既存のコードに対しても改善提案を行う機能があります。これにより、開発の効率が向上し、エラーの削減が期待されます。さらに、ツールは多くのプログラミング言語に対応しており、幅広い開発環境で利用可能です。 • AIを活用したコード自動生成ツールの紹介 • 自然言語での入力に基づくコード生成機能 • 既存コードへの改善提案機能 • 開発効率の向上とエラー削減の期待 • 多くのプログラミング言語に対応

Parallel's Web Search and other tools are now available on Vercel AI Gateway, AI SDK, and Marketplace. Add web search to any model with domain filtering, date constraints, and agentic mode support.

Parallel is now available on the Vercel Marketplace with a native integration for Vercel projects. Developers can add Parallel’s web tools and AI agents to their apps in minutes, including Search, Extract, Tasks, FindAll, and Monitoring

How Stably, a 6-person team, ships AI testing agents faster with Vercel, moving from weeks to hours. Their shift highlights how Vercel's platform eliminates infrastructure anxiety, boosting autonomous testing and enabling quick enterprise growth.

Agents can pause before tool calls. Workflows can suspend at steps. But when they're working together, where's the right place for a human-in-the-loop?

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に生成AIを利用してコードの自動生成や修正を行うことができます。具体的には、開発者が意図する機能を自然言語で入力すると、ツールがそれに基づいてコードを生成します。また、既存のコードに対しても改善提案を行う機能があります。これにより、開発の効率が向上し、エラーの削減が期待されます。さらに、ツールは多くのプログラミング言語に対応しており、幅広い開発環境で利用可能です。 • AI技術を活用した新しい開発ツールの紹介 • 自然言語での入力に基づくコードの自動生成機能 • 既存コードへの改善提案機能 • 開発効率の向上とエラー削減の期待 • 多くのプログラミング言語に対応している

BGL is a leading provider of self-managed superannuation fund (SMSF) administration solutions that help individuals manage the complex compliance and reporting of their own or a client’s retirement savings, serving over 12,700 businesses across 15 countries. In this blog post, we explore how BGL built its production-ready AI agent using Claude Agent SDK and Amazon Bedrock AgentCore.

In this post, we demonstrate how to build a secure file upload solution by integrating Google Drive with Amazon Quick Suite custom connectors using Amazon API Gateway and AWS Lambda.

This post explores nine essential best practices for building enterprise AI agents using Amazon Bedrock AgentCore. Amazon Bedrock AgentCore is an agentic platform that provides the services you need to create, deploy, and manage AI agents at scale. In this post, we cover everything from initial scoping to organizational scaling, with practical guidance that you can apply immediately.

この記事では、Included Healthとの提携により、実際のバーチャルケアにおける会話型AIの評価を目的とした全国規模の無作為化研究を開始することが発表されています。この研究は、シミュレーションや過去のデータを超えて、AIが臨床環境でどのように機能するかについての厳密な前向き証拠を収集することを目指しています。AIシステムは医療専門知識へのアクセスを大幅に向上させ、医師が患者と過ごす時間を増やす可能性がありますが、これらの技術を責任を持って開発するためには、証拠に基づく厳密なアプローチが必要です。研究は、診断や管理の推論、個別の健康インサイト、健康情報のナビゲーションにおけるAIの使用に関する基礎研究に基づいています。新しい研究は、全国規模での無作為化対照試験の設定を使用し、参加者からの同意を得て実施されます。 • 全国規模の無作為化研究を通じて、実際のバーチャルケアにおける会話型AIの評価を行う。 • AIシステムは医療専門知識へのアクセスを向上させ、医師の患者との時間を増やす可能性がある。 • 研究は、診断や管理の推論におけるAIの使用に関する基礎研究に基づいている。 • 無作為化対照試験の設定を使用し、全国から参加者を募集する。 • AIの安全性と有用性を評価するための責任あるアプローチを採用している。

この記事では、IBM ResearchのBurkhard RingleinとvLLMチームが、PyTorchに基づく新しいドメイン特化型言語Helionを用いて、AIのパフォーマンスクリティカルなカーネルであるPaged Attentionを実装する過程を説明しています。Helionは、パフォーマンスポータビリティを向上させるための広範なオートチューニング機能を備えており、Tritonよりもさらに進んだ性能を提供することを目指しています。vLLMは、NVIDIA、AMD、IntelのGPUやカスタムアクセラレータで実行可能なLLM推論のための効率的なバックエンドを提供します。Helionのオートチューナーは、アルゴリズム的な側面を変更する自由度が高く、より高度な探索アルゴリズムを特徴としています。記事では、Helionを用いたPaged Attentionの実装方法や、並列化のアプローチについても詳しく述べられています。 • Helionは高性能でポータブルなカーネルの開発を容易にするための新しい言語である。 • vLLMはLLM推論に広く使用され、NVIDIA、AMD、IntelのGPUで実行可能である。 • TritonはPythonで書かれたドメイン特化型言語で、JITコンパイルを提供する。 • Helionのオートチューナーは、アルゴリズム的な側面を変更できる自由度が高い。 • Paged Attentionの実装には、Helionの「Qブロック」概念が使用されている。

On November 21, 2025, Amazon SageMaker introduced a built-in data agent within Amazon SageMaker Unified Studio that transforms large-scale data analysis. In this post, we demonstrate, through a detailed case study of an epidemiologist conducting clinical cohort analysis, how SageMaker Data Agent can help reduce weeks of data preparation into days, and days of analysis development into hours—ultimately accelerating the path from clinical questions to research conclusions.

Learn how startups can use Azure credits to accelerate development with GitHub, scale with AKS, and build AI‑powered features. Read more.

A Blog post by Hugging Face on Hugging Face

TRAE now integrates with Vercel for one-click deployments and access to hundreds of AI models through a single API key. Available in both SOLO and IDE modes.

Apollo Skills teaches AI agents to write production-quality GraphQL. Covers Apollo Client, Apollo Server, Connectors, schema design, and operations. Install: npx skills add apollographql/skills. Works with Claude Code, Cursor, Copilot. Open source at github.com/apollographql/skills

A Blog post by Photoroom on Hugging Face

この記事では、NVIDIA DGX Sparkを使用してLlama 3.1-8B-Instructモデルのフルファインチューニングを行い、LLMに「推論」機能を追加する方法を紹介しています。合成データを用いて、特定のトピックに対する推論能力を強化することが可能であり、DGX Spark上での実行は1日以内で完了します。合成思考トレースを生成するための手法や、Synthetic-Data-Kitを使用したデータ準備の手順も詳述されています。最終的に、Chain of Thoughtを用いた応答生成のためのカスタムプロンプトの設定方法も説明されています。 • NVIDIA DGX Sparkを使用してLlama 3.1-8B-Instructモデルのフルファインチューニングを実施 • 合成データを用いて特定のトピックに対する推論能力を強化 • DGX Spark上での実行は1日以内で完了 • Synthetic-Data-Kitを使用してデータを準備する手法を紹介 • Chain of Thoughtを用いた応答生成のためのカスタムプロンプトの設定方法を説明

Scientists are working to sequence the genome of every known species on Earth.

We’re expanding Game Arena with Poker and Werewolf, while Gemini 3 Pro and Flash top our chess leaderboard.

Financial services leaders are managing cloud concentration risk and meeting regulatory exit planning expectations while enabling AI-powered innovation.

Explore first-party and partner agents that streamline inventory to deliver—from procurement to customer satisfaction—with Dynamics 365.

In this post, we illustrate how Clarus Care, a healthcare contact center solutions provider, worked with the AWS Generative AI Innovation Center (GenAIIC) team to develop a generative AI-powered contact center prototype. This solution enables conversational interaction and multi-intent resolution through an automated voicebot and chat interface. It also incorporates a scalable service model to support growth, human transfer capabilities--when requested or for urgent cases--and an analytics pipeline for performance insights.

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に生成AIを利用してコードの自動生成や補完を行います。具体的には、開発者が入力したコメントや関数名に基づいて、AIが適切なコードを提案する機能があります。また、このツールは既存の開発環境に簡単に統合できるように設計されており、特にJavaScriptやTypeScriptのプロジェクトでの使用が推奨されています。これにより、開発の効率が向上し、エラーの削減が期待されます。 • AIを活用したコード自動生成ツールの紹介 • 開発者が入力した情報に基づいてコードを提案する機能 • JavaScriptやTypeScriptプロジェクトへの統合が容易 • 開発効率の向上とエラー削減が期待される • 生成AIを利用した新しい開発手法の提案

This post explores how you can use Amazon S3-based templates to simplify ModelOps workflows, walk through the key benefits compared to using Service Catalog approaches, and demonstrates how to create a custom ModelOps solution that integrates with GitHub and GitHub Actions—giving your team one-click provisioning of a fully functional ML environment.

In this post, we walk through how global cross-Region inference routes requests and where your data resides, then show you how to configure the required AWS Identity and Access Management (IAM) permissions and invoke Claude 4.5 models using the global inference profile Amazon Resource Name (ARN). We also cover how to request quota increases for your workload. By the end, you'll have a working implementation of global cross-Region inference in af-south-1.

AssistLoop is now available in the Vercel Marketplace, making it easy to add AI-powered customer support to Next.js apps deployed on Vercel.

Cubic joins the Vercel Agents Marketplace, offering teams an AI code reviewer with full codebase context, unified billing, and automated fixes.

AI agents need secure, isolated environments that spin up instantly. Vercel Sandbox is now generally available with filesystem snapshots, container support, and production reliability.

Arena Mode brings side-by-side model comparison directly into your IDE, plus Plan Mode and Megaplan for smarter task planning.

Learn how the Chrome Tooling team optimized token usage for AI assistance, and discover techniques for more efficient data usage with LLMs.

Read about the latest product updates, events, and content from the LangChain team

Read about the latest product updates, events, and content from the LangChain team

We’re excited to join in Cursor, Cloudflare, Vercel, git-ai, OpenCode and others in support of [Agent Trace](https://agent-trace.dev/). As described in the spec, Agent Trace is an open, vendor-neutral spec for recording AI contributions alongside human authorship in version-controlled codebases.

Empower the web community and invite more to build cross-platform apps

The agent-based approach we present is applicable to any type of enterprise content, from product documentation and knowledge bases to marketing materials and technical specifications. To demonstrate these concepts in action, we walk through a practical example of reviewing blog content for technical accuracy. These patterns and techniques can be directly adapted to various content review needs by adjusting the agent configurations, tools, and verification sources.

A Blog post by NVIDIA on Hugging Face

Google AI Ultra subscribers in the U.S. can now try out Project Genie.

Google AI Ultra subscribers in the U.S. can now try out Project Genie.

この記事では、ExecuTorchとArm SME2を使用して、モバイルデバイス上での機械学習推論の加速について説明しています。特に、SqueezeSAMというインタラクティブな画像セグメンテーションモデルが、Instagramのカットアウト機能を支えており、これによりユーザーは画像内のオブジェクトを簡単に切り抜くことができます。SME2は、Armv9アーキテクチャに導入された高度なCPU命令セットで、マトリックス指向の計算ワークロードを加速します。実験結果によると、SME2を使用することで、SqueezeSAMの推論レイテンシが大幅に改善され、INT8モデルでは1.83倍、FP16モデルでは3.9倍の速度向上が見られました。これにより、モバイルアプリケーションのインタラクティブな機能がより迅速に実行可能となり、開発者は精度とワークフローに応じた柔軟な選択ができるようになります。 • ExecuTorchとArm SME2を使用してモバイルデバイス上での機械学習推論を加速する。 • SqueezeSAMモデルはInstagramのカットアウト機能を支えている。 • SME2はArmv9アーキテクチャに導入されたCPU命令セットで、マトリックス計算を加速する。 • INT8モデルの推論レイテンシが1.83倍、FP16モデルが3.9倍改善される。 • モバイルアプリケーションのインタラクティブな機能が迅速に実行可能になる。 • 開発者は精度に応じた柔軟な選択ができる。

>

この記事では、最新のAI技術を活用した新しい開発ツールについて説明しています。このツールは、開発者がコードを書く際にAIの支援を受けることができるもので、特に自然言語処理を用いた機能が強化されています。具体的には、開発者が自然言語で指示を出すと、AIがそれに基づいてコードを生成することが可能です。また、ツールは既存の開発環境に統合できるため、導入が容易である点も強調されています。さらに、AIによるコード生成は、開発の効率を大幅に向上させることが期待されています。 • AI技術を活用した新しい開発ツールの紹介 • 自然言語での指示に基づいてコードを生成する機能 • 既存の開発環境への統合が容易 • 開発効率の向上が期待される

Cognizant has partnered with Cognition to deploy Devin and Windsurf across its engineering teams and customer base.

By Chester Curme and Mason Daugherty As the addressable task length of AI agents continues to grow, effective context management becomes critical to prevent context rot and to manage LLMs’ finite memory constraints. The Deep Agents SDK is LangChain’s open source, batteries-included agent harness. It provides an easy path

By Chester Curme and Mason Daugherty As the addressable task length of AI agents continues to grow, effective context management becomes critical to prevent context rot and to manage LLMs’ finite memory constraints. The Deep Agents SDK is LangChain’s open source, batteries-included agent harness. It provides an easy path

Discover how aligning AI transformation with sustainability can boost efficiency, resilience, and long‑term competitiveness at Davos 2026. Learn more.

この記事では、AIエージェントシステムのスケーリングに関する初の定量的原則を導出し、180のエージェント構成の制御評価を通じて、マルチエージェントの協調が並列化可能なタスクのパフォーマンスを大幅に向上させる一方で、逐次タスクでは劣化させることを明らかにしています。また、87%の未見タスクに対して最適なアーキテクチャを特定する予測モデルも紹介しています。エージェントは、推論、計画、行動が可能なシステムであり、業界は単発の質問応答から持続的なマルチステップのインタラクションへと移行しています。従来の静的ベンチマークはモデルの知識を測定しますが、エージェントタスクは外部環境との持続的なインタラクション、部分的な可観測性の下での情報収集、環境フィードバックに基づく戦略の適応的な改良を必要とします。 • AIエージェントシステムのスケーリングに関する定量的原則を導出した。 • マルチエージェントの協調は並列化可能なタスクのパフォーマンスを向上させるが、逐次タスクでは劣化する。 • エージェントタスクは持続的なインタラクション、部分的な可観測性、環境フィードバックに基づく戦略の適応を必要とする。 • 5つのエージェントアーキテクチャ(単一エージェント、独立、中央集権、分散、ハイブリッド)を評価した。 • 87%の未見タスクに対して最適なアーキテクチャを特定する予測モデルを導入した。