You can now access OpenAI's newest model GPT 5.3 Codex via Vercel's AI Gateway with no other provider accounts required.

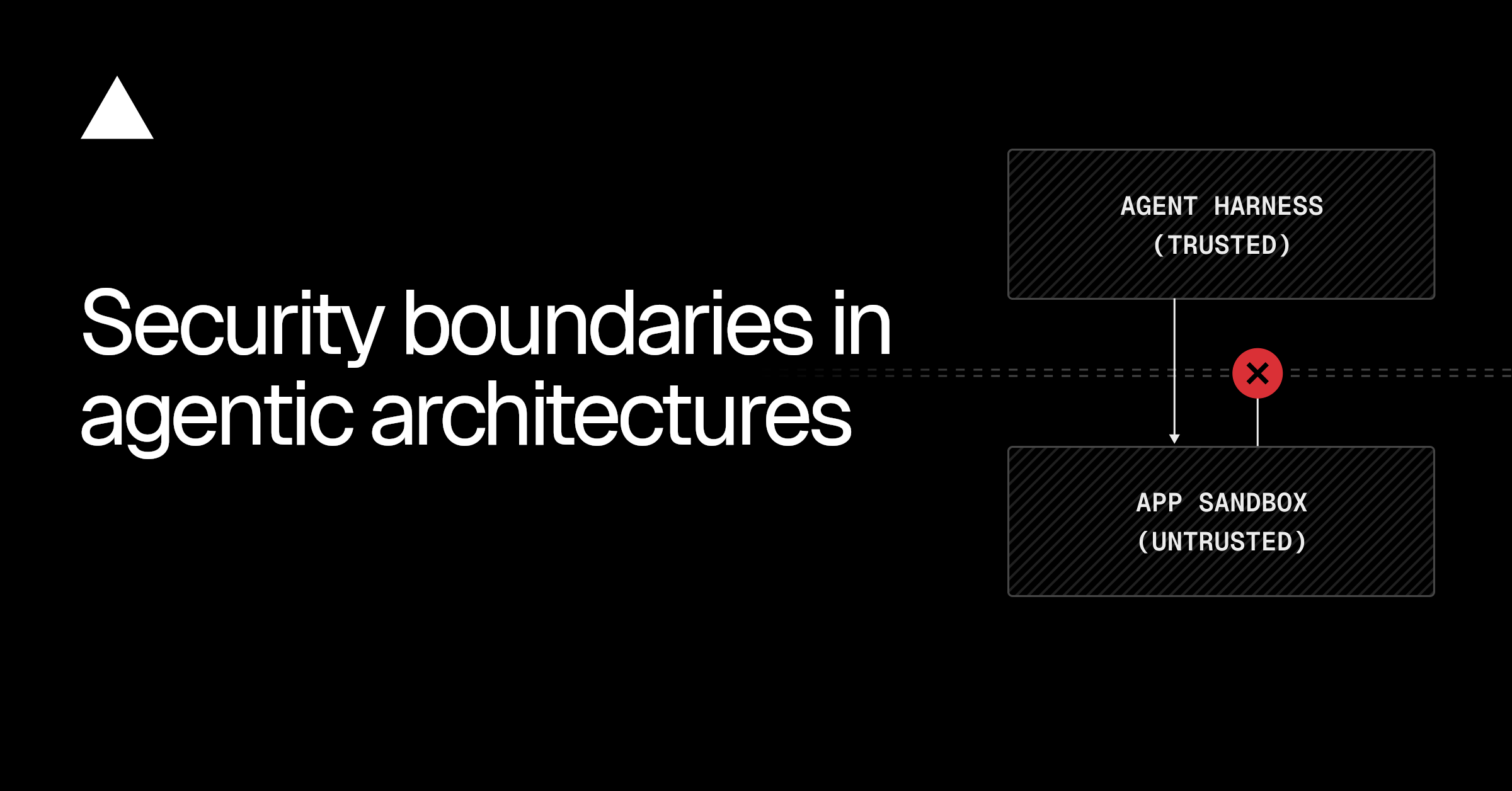

A framework for drawing security boundaries in agentic architectures. Most agents run with zero isolation between the agent and the code it generates. Learn where to draw the boundaries, from secret injection to full application sandboxing.

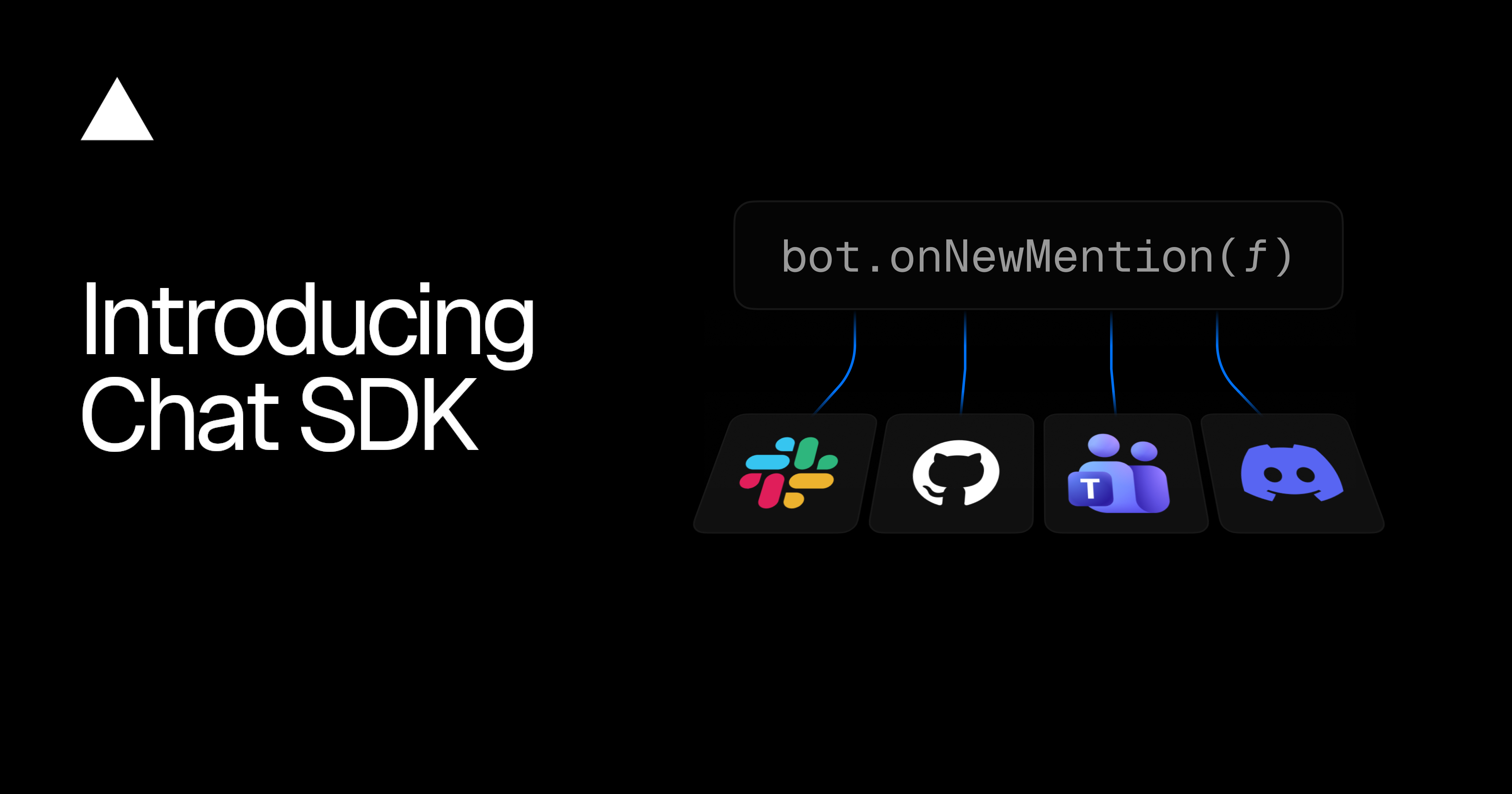

Chat SDK is now open source and available in public beta. It's a TypeScript library for building chat bots that work across Slack, Microsoft Teams, Google Chat, Discord, GitHub, and Linear — from a single codebase.

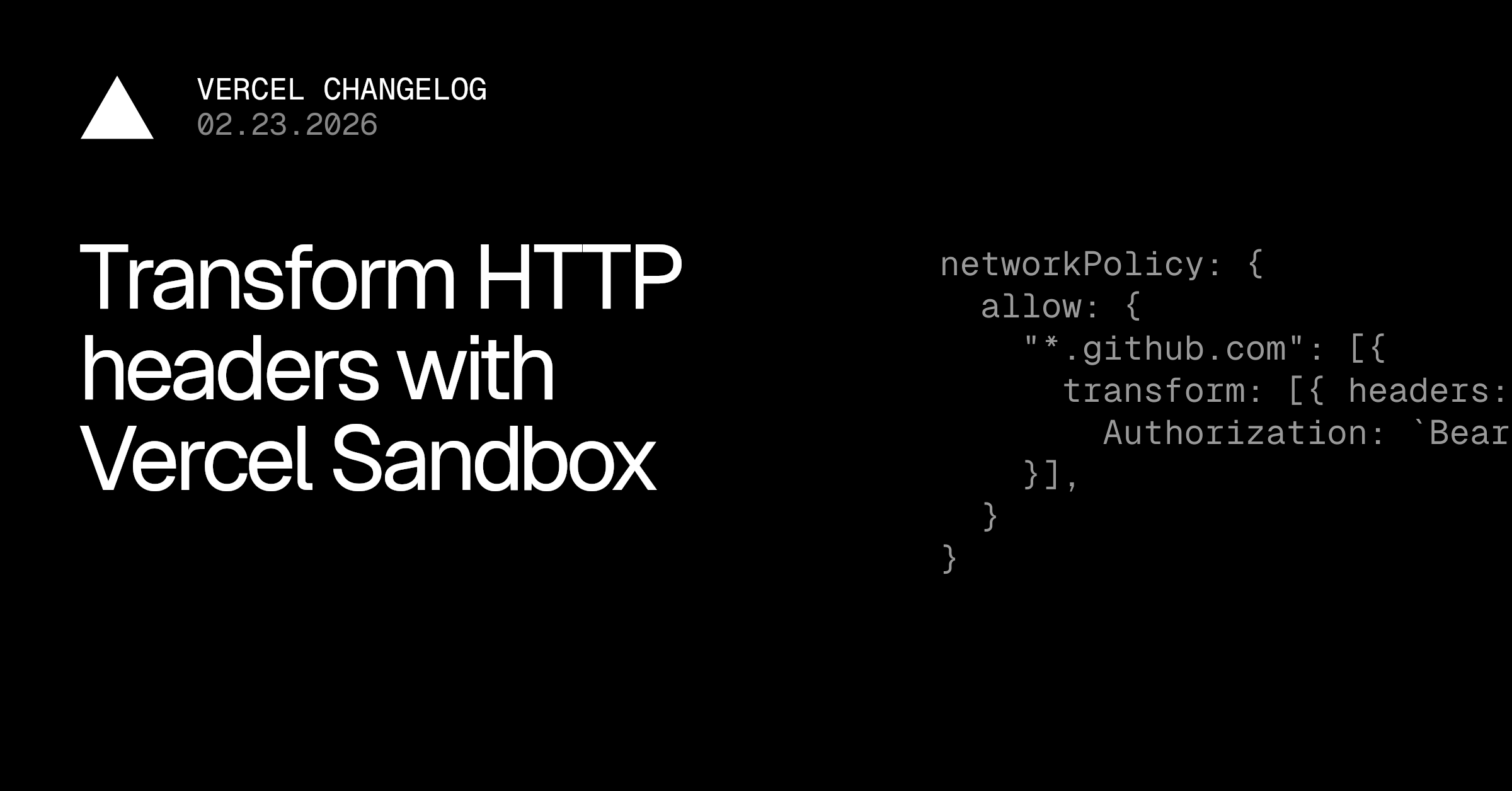

Sandbox network policies now support HTTP header injection to prevent secrets exfiltration by untrusted code

Support for the deprecated "now.json" configuration file will be removed on March 31, 2026. Move to "vercel.json" instead.

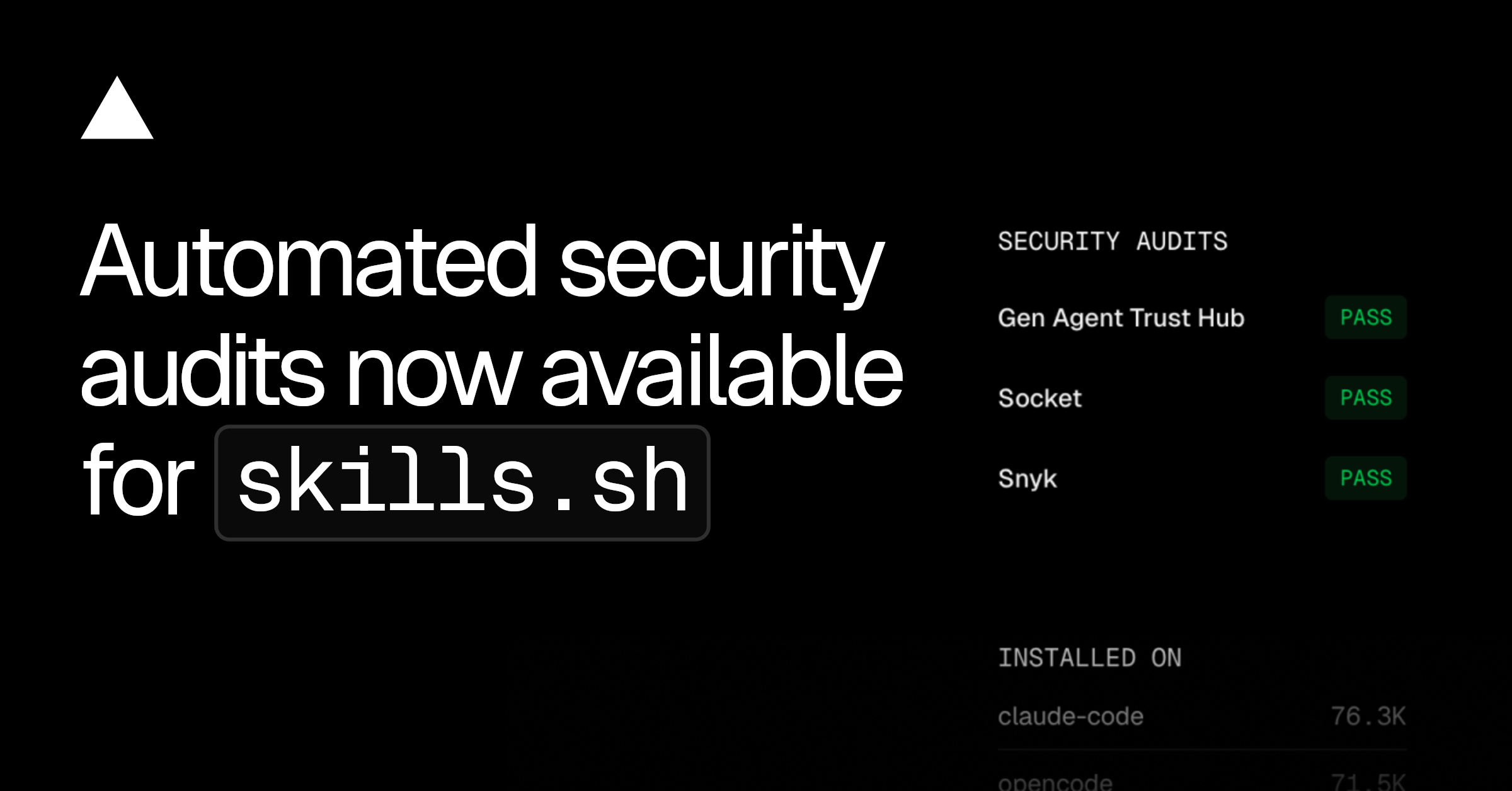

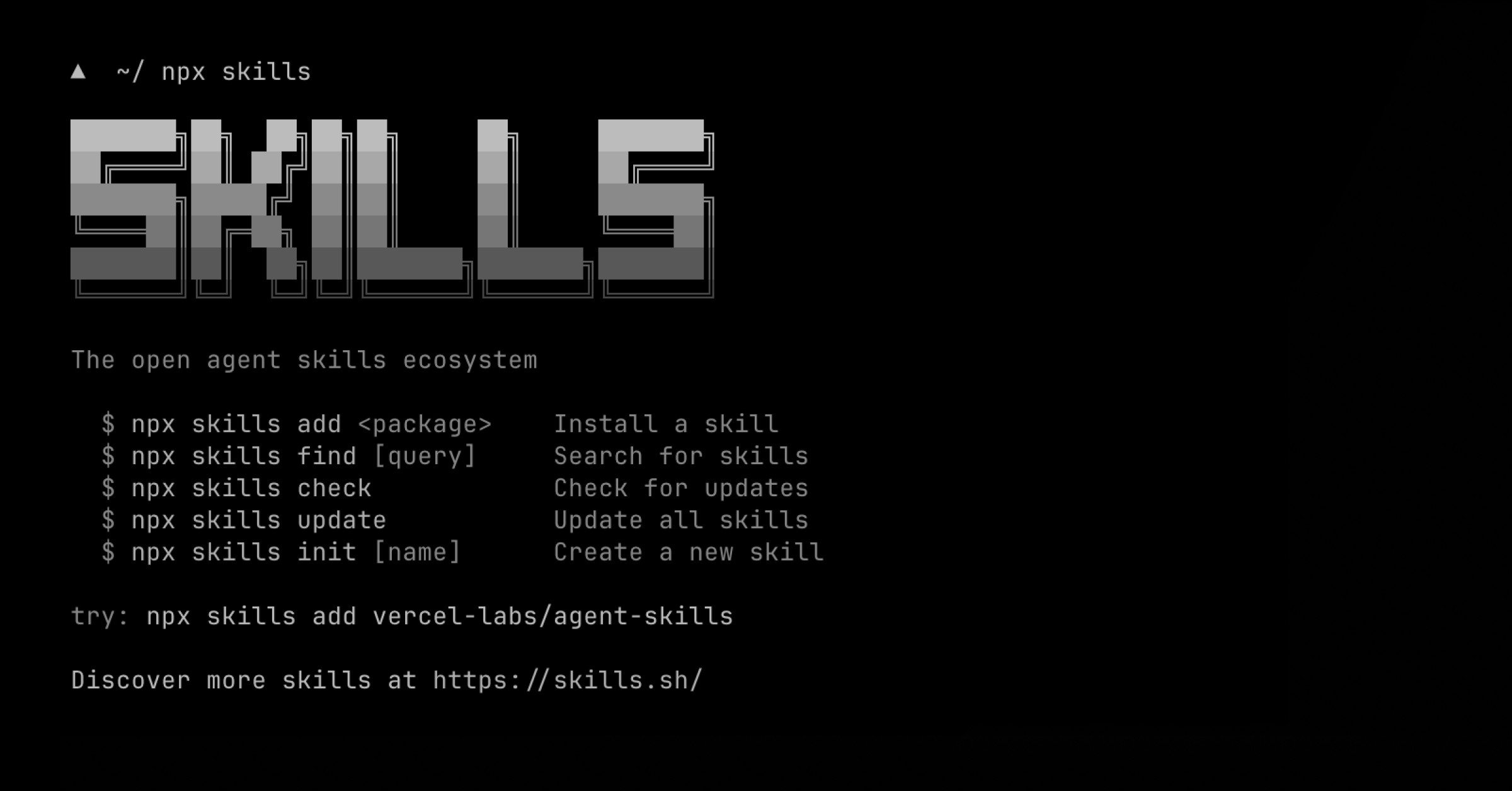

Andrew Qu reflects on Skills Night SF: how a weekend project became 69,000 community-created skills, the security partnerships protecting them, and what eight partner demos revealed about agents, context, and the future of development.

Andrew Qu reflects on Skills Night SF: how a weekend project became 62,000 community-created skills, the security partnerships protecting them, and what eight partner demos revealed about agents, context, and the future of development.

Generate photorealistic AI videos with the Veo models via Vercel AI Gateway. Text-to-video and image-to-video with native audio generation. Up to 4K resolution with fast mode options.

Generate AI videos with Kling models via the AI Gateway. Text-to-video with audio, image-to-video with first/last frame control, and motion transfer. 7 models including v3.0, v2.6, and turbo variants.

Generate AI videos with xAI Grok Imagine via the AI SDK. Text-to-video, image-to-video, and video editing with natural audio and dialogue.

Build video generation into your apps with AI Gateway. Create product videos, dynamic content, and marketing assets at scale.

Streamdown 2.3 focuses on design polish and developer experience. Tables, code blocks, and Mermaid diagrams have been redesigned.

Vercel now supports programmatic access to billing usage and cost data through the API and CLI, and we're introducing a new native integration that connects Vercel teams to Vantage accounts.

Vercel Blob now supports private storage for user content or sensitive files. Private blobs require authentication for all read operations, ensuring your data cannot be accessed via public URLs and is only available to authenticated requests.

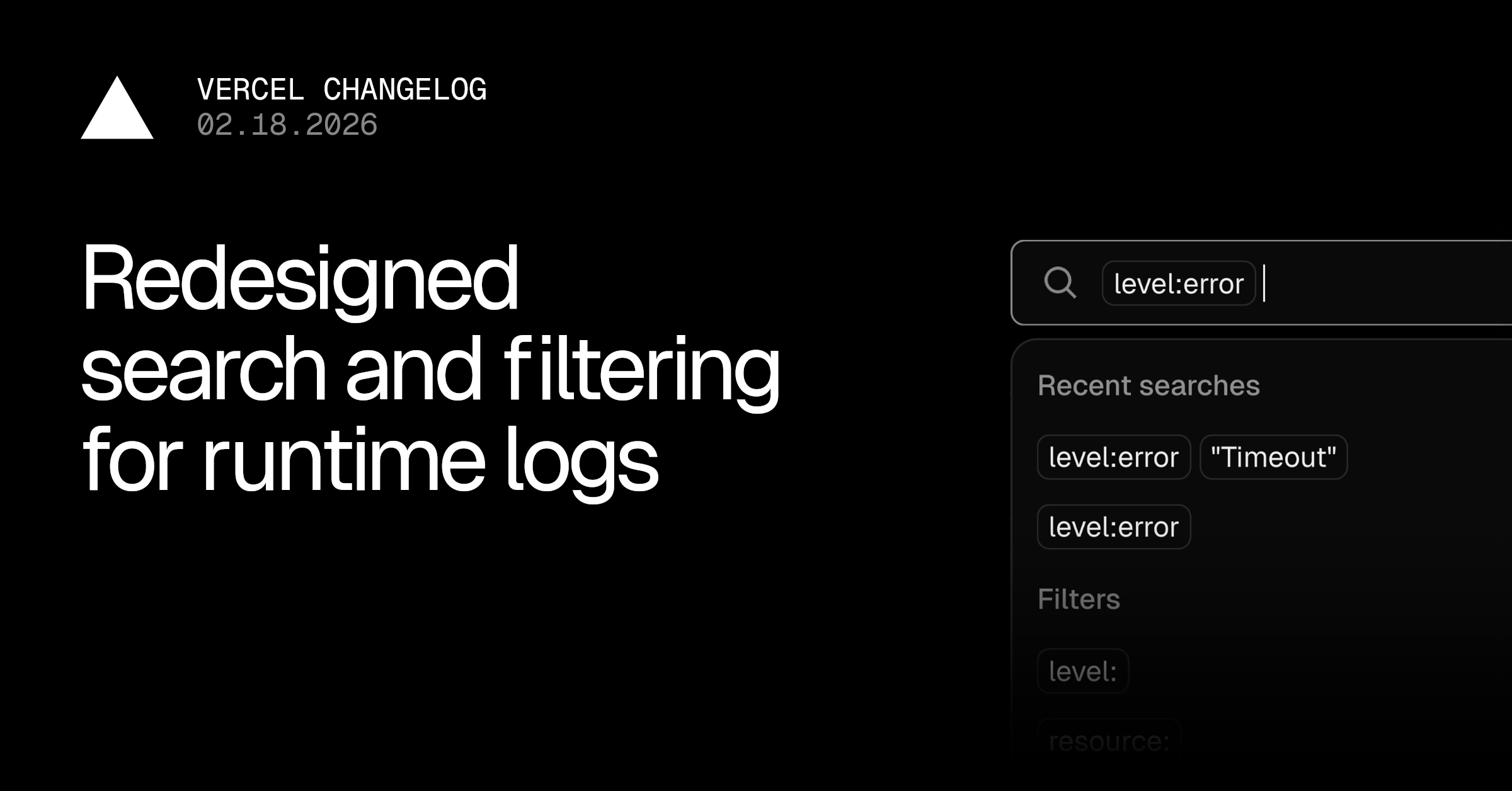

The Logs search bar has been redesigned with visual filter pills, smarter suggestions from your log data, and instant query validation.

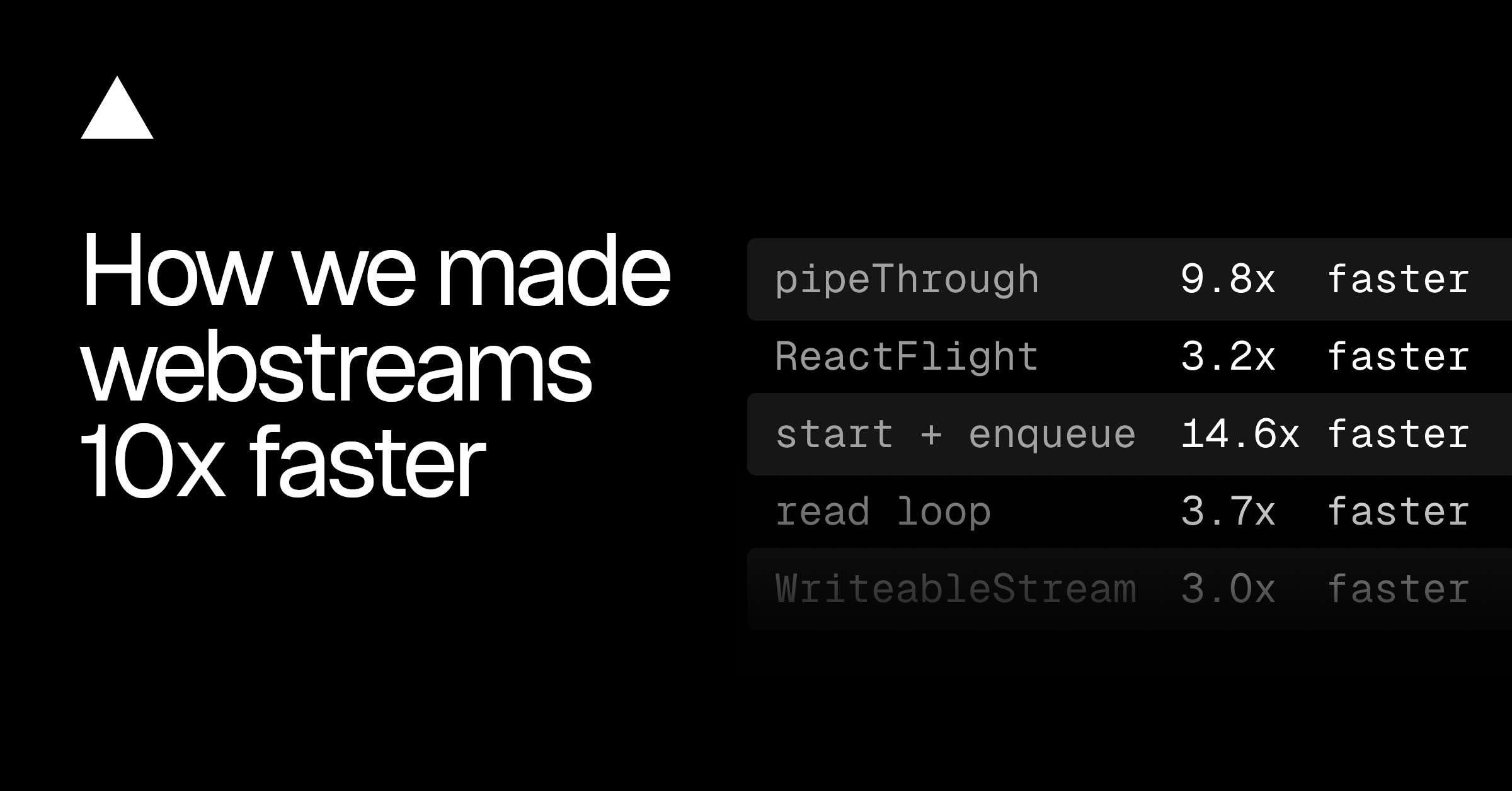

WebStreams had too much overhead on the server. We built a faster implementation. See how we achieved 10-14x gains in Next.js rendering benchmarks.

Skills on the skills.sh now have automated security audits to help developers to use skills with confidence.

Get automatic code fix suggestions from Vercel Agent when builds fail—directly in GitHub Pull Request reviews or the Vercel Dashboard, based on your code and build logs.

You can now access Anthropic's newest model Claude Sonnet 4.6 via Vercel's AI Gateway with no other provider accounts required.

Snapshots created with Vercel Sandbox can now have a configurable expiration policy set instead of the default 30 days allowing users to keep snapshots indefinitely.

Runtime Logs exports now stream directly to your browser, export actual requests instead of raw rows, and match exactly what's on your screen or search.

You can now access Alibaba's latest model, Qwen 3.5 Plus, via Vercel's AI Gateway with no other provider accounts required.

Stale-if-error directive now supported with Cache-control header for all responses, which allows the cache serves a stale response when an error is encountered instead of returning a hard error to the client

Any new deployment containing a version of next-mdx-remote that is vulnerable to CVE-2026-0969 will now automatically fail to deploy on Vercel.

You can now access MiniMax M2.5 through Vercel's AI Gateway with no other provider accounts required.

Browserbase is now available on the Vercel Marketplace, allowing teams to run browser automation for AI agents without managing infrastructure.

You can now access Z.AI's latest model, GLM 5, via Vercel's AI Gateway with no other provider accounts required.

Learn how Vercel scales community support with AI agents. We automated logistics to reclaim human focus, empowering our team to solve complex problems

Create and manage feature flags in the Vercel Dashboard with targeting rules, user segments, and environment controls. Works seamlessly with the Flags SDK for Next.js and Svelte.

Vercel sandboxes now allow you to restrict access to the internet to keep exfiltration risks to a minimum

The vercel logs CLI command has been improved to enable more powerful querying capabilities, and optimized for use by agents.

Vercel now supports Sign in with Apple, enabling faster access for users with Apple accounts and devices

PostHog now integrates directly with Vercel to help teams manage feature rollouts and run experiments without redeploying code. This integration makes it easier for Vercel users to:

Runtime logs are now available via Vercel's MCP server, enabling AI agents to analyze performance, fix errors, and more.

Learn how skills.sh automates safety reviews, fraud detection, and data normalization using AI. A technical look at running an open leaderboard at scale.

Learn how we built an AI Engine Optimization system to track coding agents using Vercel Sandbox, AI Gateway, and Workflows for isolated execution.

Vercel automatically detects and revokes exposed credentials. Learn about new token formats, new automated secret scanning, and partnership in GitHub's secret scanning program.

Why competitive advantage in AI comes from the platform you deploy agents on, not the agents themselves.

Easier file downloads from the Vercel sandbox with new download and read methods added to the sandbox sdk

Sanity is now available as a content management system integration on the Vercel Marketplace. Install and configure directly from your Vercel dashboard.

Geist Pixel is a bitmap-inspired typeface built on the same foundations as Geist and Geist Mono, reinterpreted through a strict pixel grid. It’s precise, intentional, and unapologetically digital.

How Stably, a 6-person team, ships AI testing agents faster with Vercel, moving from weeks to hours. Their shift highlights how Vercel's platform eliminates infrastructure anxiety, boosting autonomous testing and enabling quick enterprise growth.

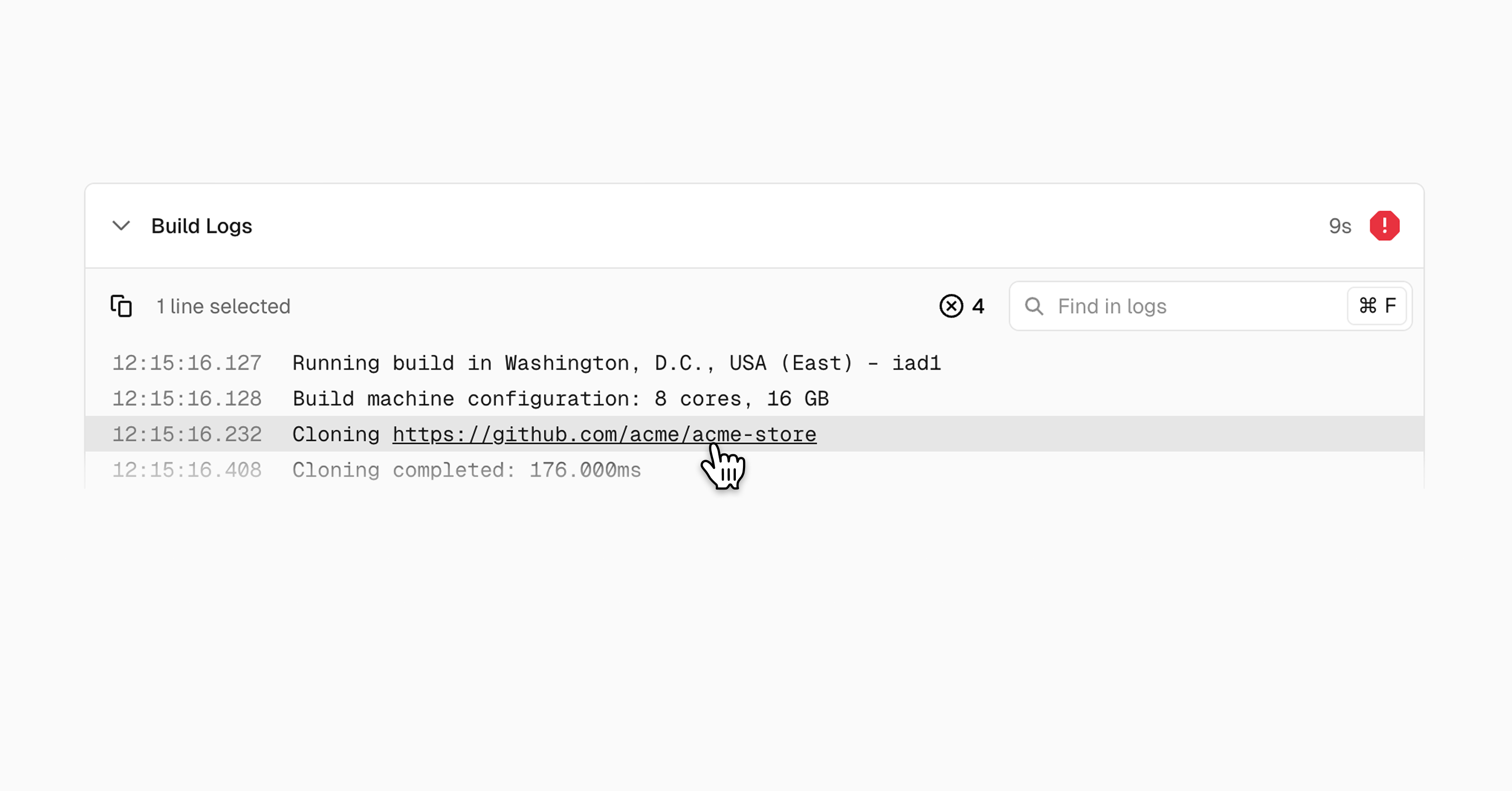

Click URLs directly in build logs to navigate to internal and external resources instantly. No more manual copying and pasting required.

Parallel is now available on the Vercel Marketplace with a native integration for Vercel projects. Developers can add Parallel’s web tools and AI agents to their apps in minutes, including Search, Extract, Tasks, FindAll, and Monitoring

Parallel's Web Search and other tools are now available on Vercel AI Gateway, AI SDK, and Marketplace. Add web search to any model with domain filtering, date constraints, and agentic mode support.

Vercel now detects and deploys Koa, an expressive HTTP middleware framework to make web applications and APIs more enjoyable to write.

Workflow 4.1 Beta now stores every state change as an event and reconstructs workflow state by replaying them, so failures can be recovered.

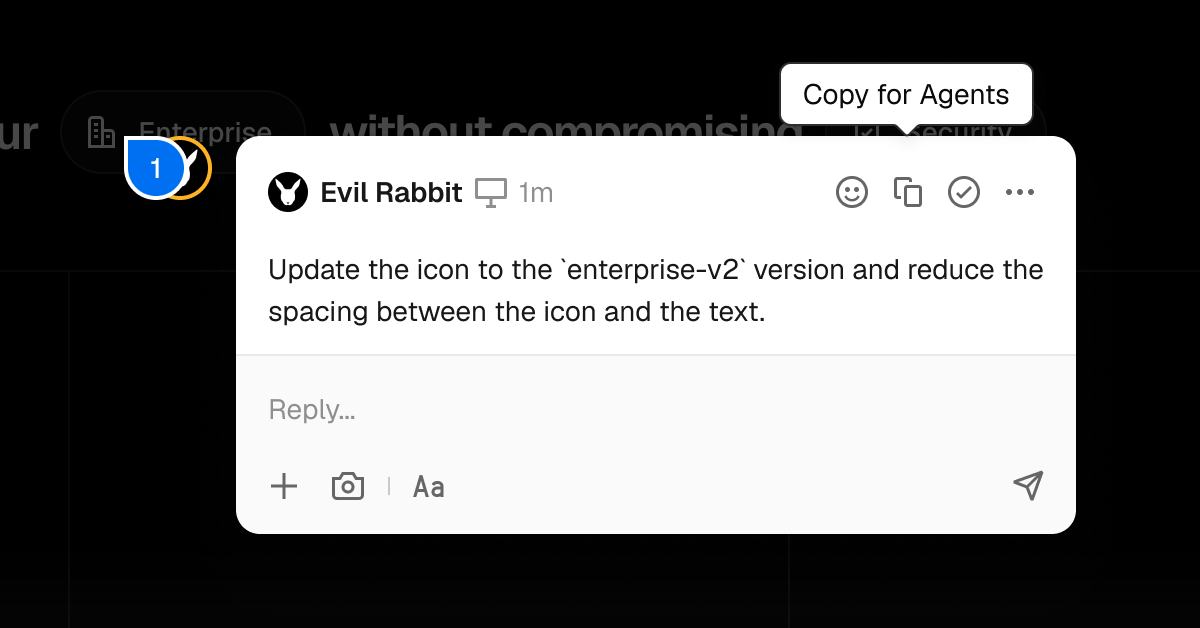

Copy visual context from Vercel Toolbar comments directly to agents. Streamlines feedback-to-code workflow with deployment details and component data.

Turbo build machines are now the default for new Pro Vercel projects, delivering faster buil ds with 30 vCPUs/60GB memory—up to 3x faster for long builds.

TRAE now integrates with Vercel for one-click deployments and access to hundreds of AI models through a single API key. Available in both SOLO and IDE modes.

Learn how Vercel uses HTTP content negotiation to serve markdown to agents and HTML to humans from the same URL, reducing response sizes by 90% while keeping both versions synchronized.

Vercel is opening its open source software bug bounty program to the public for researchers find vulnerabilities and make OSS safer

The new v0 brings production-ready AI coding to enterprises with git workflows, security, and real integrations. Ship faster with agents and teams.

Python versions 3.13 and 3.14 are now available for use in Vercel Builds and Vercel Functions. Python 3.14 is the new default version for new projects and deployments.

AI agents need secure, isolated environments that spin up instantly. Vercel Sandbox is now generally available with filesystem snapshots, container support, and production reliability.

Cubic joins the Vercel Agents Marketplace, offering teams an AI code reviewer with full codebase context, unified billing, and automated fixes.

Vercel Sandbox is now GA, providing isolated Linux VMs for safely executing untrusted code. Run AI agent output, user uploads, and third-party code without exposing production systems.

AssistLoop is now available in the Vercel Marketplace, making it easy to add AI-powered customer support to Next.js apps deployed on Vercel.

Stripe built a production GTM value calculator in a single flight using v0, boosting adoption 288% and cutting value analysis time by 80%.

Next.js now supports a custom deploymentId in next.config.js, enabling Skew Protection for vercel deploy --prebuilt workflows. Set your own deployment ID using a git SHA or timestamp to maintain version consistency across prebuilt deployments.

With our updated Slack integration, investigations now appear directly in Slack alert messages as a threaded response. This eliminates the need to click into the Vercel dashboard and gives you context to triage the alert directly in Slack.

Cached responses can now assign a comma separated list of cache tags using the Vercel-Cache-Tag header. We recommend using the Vercel-Cache-Tag header for caching with external backends or for frameworks without native cache tag support for ISR.

Sensay went from zero to an MVP launch in six weeks for Web Summit. With Vercel preview deployments, feature flags, and rollbacks, the team shipped fast without a DevOps team.

A compressed 8KB docs index in AGENTS.md achieved 100% on Next.js 16 API evals. Skills maxed at 79%. Here's what we learned and how to set it up.

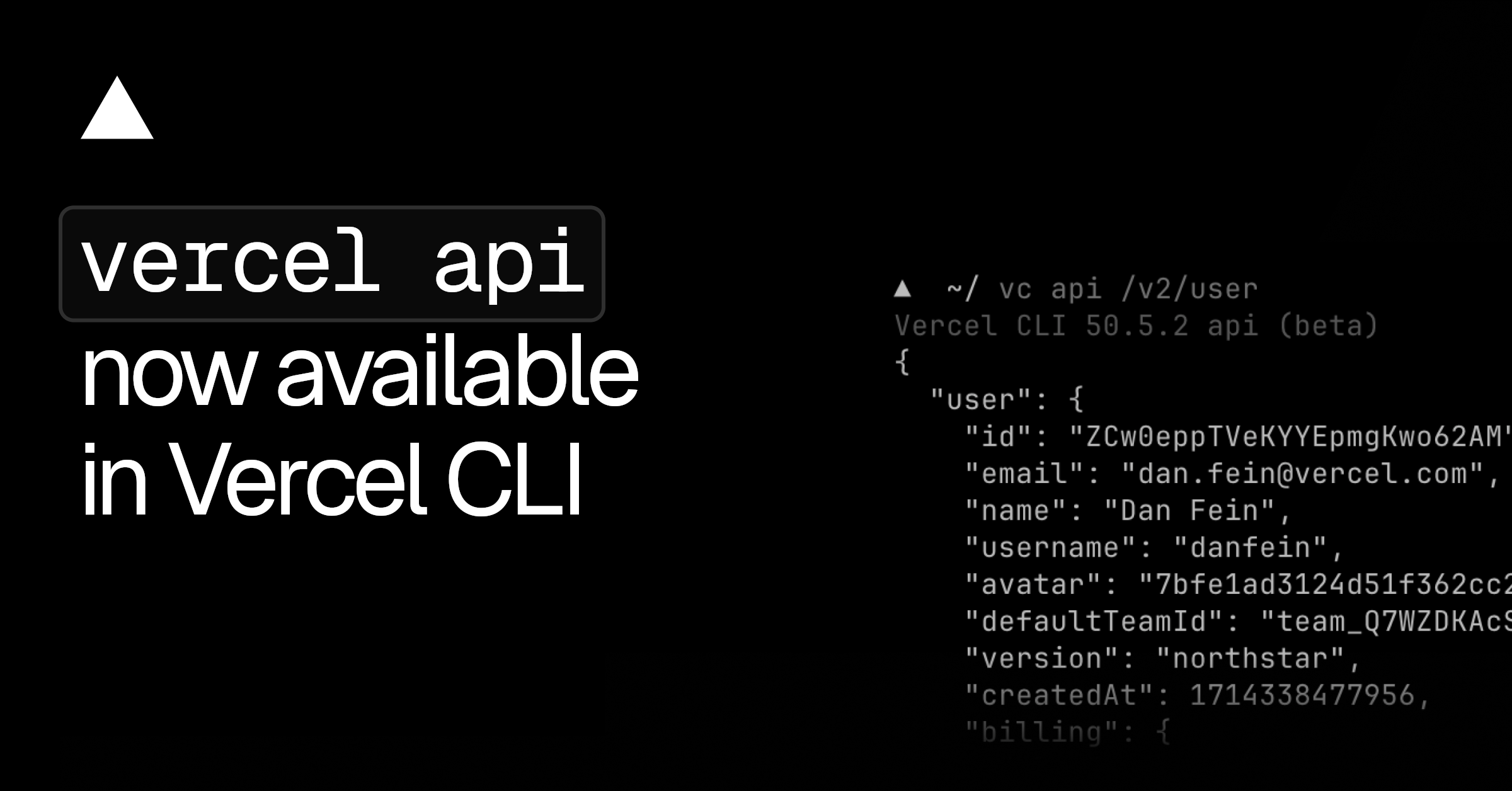

The vercel api command makes an authenticated HTTP request to the Vercel API and prints the response.

You can now access Arcee AI's Trinity Large Preview model via AI Gateway with no other provider accounts required.

You can now access Moonshot AI's Kimi K2.5 model via Vercel's AI Gateway with no other provider accounts required.

A plainspoken Skills FAQ with a ready-to-use guide: what skill packages are, how agents load them, what skills-ai.dev is, how Skills compare to MCP, plus security and alternatives.

You can use your Claude Code Max subscription through Vercel's AI Gateway. This lets you leverage your existing subscription while gaining centralized observability, usage tracking, and monitoring capabilities for all your Claude Code requests.

We are issuing mitigations for CVE-2026-23864 for multiple vulnerabilities affecting React Server Components

Two denial-of-service vulnerabilities were discovered in self-hosted Next.js applications that can cause server crashes through memory exhaustion under specific configurations.

Skills v1.1.1 introduces interactive skill discovery with npx skills find, full open source release on GitHub, and enhanced support for 27 coding agents with automated workflows.